- Tech News & Insight

- December 1, 2025

- Hema Kadia

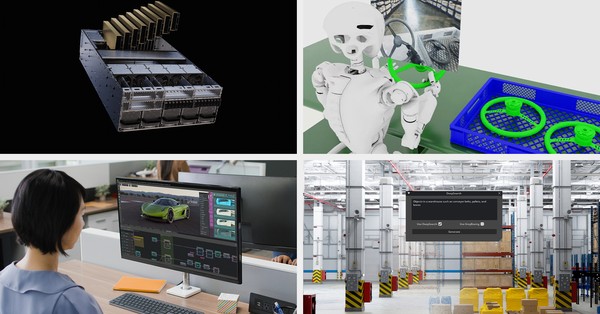

Nvidia used NeurIPS to expand an open toolkit for digital and physical AI, with a flagship reasoning model for autonomous driving and a broader stack that targets speech, safety, and reinforcement learning. Nvidia introduced DRIVE Alpamayo-R1 (AR1), an open vision-language-action model that fuses multimodal perception with chain-of-thought reasoning and path