Why SK Telecom and VAST Data Matter for Sovereign AI in Korea

This collaboration establishes a national-scale GPU-as-a-Service platform that aligns telco infrastructure with sovereign AI requirements, accelerating time-to-model while keeping data and control in-country.

Partnership Overview: Petasus GPUaaS for Korea

SK Telecom is partnering with VAST Data to power the Petasus AI Cloud, a sovereign GPUaaS built on NVIDIA accelerated computing and Supermicro systems, designed to support both training and inference at scale for government, research, and enterprise users in South Korea.

The rollout centers on the Haein Cluster, selected for Korea’s AI Computing Resource Utilization Enhancement program, signaling policy-level support for elastic, in-country access to advanced GPUs and shared AI infrastructure.

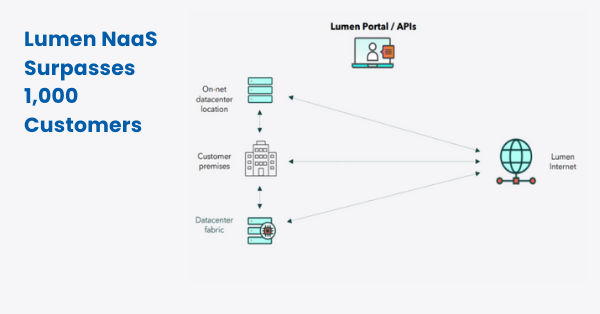

By placing VAST Data’s AI Operating System at the heart of Petasus, SKT is unifying data and compute services into a single control plane, turning legacy bare-metal workflows that took days or weeks into virtualized environments that can be provisioned in minutes and operated with carrier-grade resilience.

Why Now: Speed, Sovereignty, and GPU Supply Constraints

Demand for foundation models and enterprise-grade inference is outpacing on-prem capacity, while regulatory and competitive pressures make data residency, governance, and cost control non-negotiable.

Telecom operators are uniquely positioned to deliver sovereign AI utilities because they already run highly available networks and data centers, and the SKTVAST design shows how virtualization can deliver near bare-metal performance without sacrificing isolation or uptime.

Inside Korea’s Haein Cluster and Petasus AI Cloud

The platform integrates modern GPUs, disaggregated storage, and secure multi-tenancy to deliver elastic AI services within national borders.

Architecture: VAST DASE with NVIDIA HGX and Supermicro

The Petasus AI Cloud pairs VAST Data’s disaggregated, shared-everything architecture with NVIDIA HGX-based servers built by Supermicro, creating a high-throughput data and compute fabric designed for parallelism, scale, and resilience.

Next-generation NVIDIA Blackwell GPUs anchor training and inference capacity, while VAST’s AI OS consolidates data services, compute orchestration, and workflow execution into a unified platform capable of servicing multiple tenants without client-side gateways or proprietary shims.

This combination reduces data movement bottlenecks, improves GPU utilization, and provides a consistent data plane for model development, fine-tuning, and production inference.

Virtualization Without Penalty: GPUaaS in Minutes

Where provisioning AI jobs on bare metal can stall projects for weeks, Petasus uses virtualization to stand up GPU environments in roughly ten minutes while preserving performance that closely tracks bare-metal baselines.

VAST’s software automates resource allocation across GPUs, storage, and the associated networking fabrics, carving out dedicated pools per tenant and per workload to match policy, performance, and security requirements.

Secure Multi‑Tenancy and Simplified Lifecycle

The platform enforces workload isolation and data privacy with quality-of-service guarantees, which is essential for mixed government, research, and enterprise tenants sharing national resources.

By providing a single, unified pipeline for training and inference, teams can move models from experimentation to production with fewer data copies and operational touchpoints, improving time-to-value and reducing operational risk.

Carrier-grade uptime and lean operations are baked into the design, aligning with telco reliability expectations and enabling consistent SLAs for AI services.

Business Impact for Telcos and Enterprises

The design offers a blueprint for telcos to monetize AI infrastructure while giving enterprises sovereign, elastic access to state-of-the-art GPUs.

For Telcos: Toward a National AI Utility

Operators can extend beyond connectivity to deliver GPUaaS, data services, and model lifecycle operations, priced as a utility and governed to national standards.

Selection by the Ministry of Science and ICTs GPU rental support program underscores the public-private alignment needed to scale capacity, de-risk capital investment, and ensure equitable access to advanced compute.

By virtualizing GPUs with near-native performance, telcos can drive higher utilization, shorten provisioning cycles, and expand addressable markets across research institutions, startups, and regulated industries.

For Enterprises and Public Sector: Elastic, In‑Country AI

Organizations gain access to modern NVIDIA platforms without navigating supply constraints or building bespoke AI stacks, while keeping data, models, and operations within South Korea’s borders.

Unified data and compute services simplify compliance, reduce data gravity challenges, and streamline MLOps, from pretraining and fine-tuning to real-time inference at scale.

What to Watch Next

Execution details will determine whether this sovereign AI model becomes a repeatable pattern for other markets and operators.

Performance and Operational KPIs

Track GPU utilization rates, time-to-provision, job queue times, training throughput, inference latency, and SLA adherence, along with failure domain containment and recovery times tied to carrier-grade targets.

Ecosystem Integration and Developer Experience

Watch how quickly the platform exposes frictionless, multi-protocol access for data scientists and MLOps teams, and how it integrates with common AI frameworks, data pipelines, and enterprise security controls.

Capacity Scaling and Cost Efficiency

Monitor cadence of NVIDIA Blackwell capacity adds, power, and cooling efficiency, and the impact of disaggregation on TCO, including the balance between virtualization flexibility and performance for large training runs.

Leadership Takeaways

Technology leaders should use this deployment as a template for building a compliant, elastic AI infrastructure that balances speed, control, and cost.

Design for Sovereignty and Speed

Define data residency, access control, and audit requirements up front, and pair them with a provisioning target measured in minutes, not weeks, to keep model development cycles on track.

Adopt a Unified Data and Compute Plane

Consolidate training and inference pipelines on a shared, high-throughput fabric to cut data copies, improve GPU utilization, and simplify operations across tenants.

Prioritize Isolation with Carrier‑Grade Reliability

Engineer for strict workload separation, predictable performance, and automated recovery, treating AI services with the same rigor as critical network functions.

Align Funding and Ecosystem Partnerships

Leverage public programs, hardware partners such as Supermicro, and GPU roadmaps from NVIDIA to secure capacity, manage TCO, and accelerate time-to-service for national AI initiatives.

Pilot, Measure, and Iterate

Start with high-impact workloads, instrument end-to-end KPIs, and use data to refine resource allocation, scheduling, and cost models as adoption scales across research, government, and enterprise tenants.