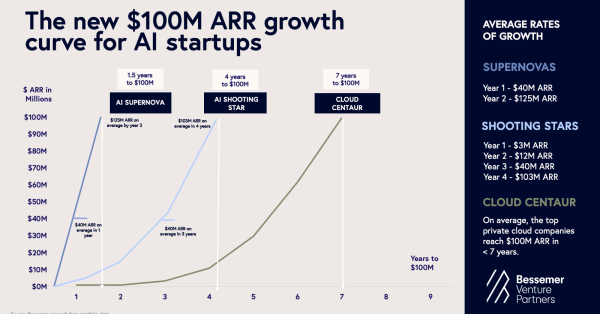

AI startups are scaling revenue faster than the cloud era

New data shows AI-native companies are scaling revenue at speeds the cloud era never reachedand the implications span software, telecom, and the broader digital economy.

Evidence: venture benchmarks and Stripe telemetry on ARR speed

Bessemer Venture Partners’ latest State of AI analysis describes two breakout archetypes: supernovas sprinting from near-zero to meaningful ARR in their first year, and shooting stars that scale like elite SaaS with healthier margins and retention. On the ground, Stripes 2024 processing data reinforces the trend: the top AI startups are hitting early revenue milestones materially faster than prior SaaS cohorts. Named examples stand outCursor reportedly crossed nine-figure revenue, while Lovable and Bolt reached eight figures within monthsunderscoring how AI-native distribution and usage patterns compress time-to-scale.

Drivers: falling transaction costs, LLM interfaces, and faster scale

Classic growth levers matter subsidization and insatiable demandbut the deeper driver is structural. The modern internet stack has converted fixed costs into variable services: cloud compute (AWS, GPUs), payments (Stripe), customer service (Intercom), growth engines (Google and Meta ad platforms), and viral distribution surfaces (Discord, app stores). Layer in LLMs that automate the four primary external interfacesvoice, text, UI, and APIsand the transaction costs Ronald Coase wrote about are falling again. That’s why lean teams can reach scale quickly; Midjourney’s ascent with a tiny headcount is emblematic. The market tailwind from generative AI then amplifies otherwise familiar growth playbooks.

Enterprise disruption and why telecom operators must act

AI is not a feature war; its a workflow rewrite that erodes switching costs and threatens the deepest moats in enterprise software and operations.

AI systems of action erode CRM/ERP/ITSM lock-in

AI-native apps structure unstructured data, auto-generate integration code, and ingest multi-source telemetrycollapsing migrations from years to days. That weakens decades of lock-in around CRM, ERP, and ITSM from incumbents like Salesforce, SAP, Oracle, and ServiceNow. For telecom, the parallels are direct: BSS/OSS, CRM, CPQ, and knowledge systems can be displaced by systems of action that capture data passively and execute agentic workflows across provisioning, field service, and care. Expect buyers to reward tools that deliver hard ROI on day onereduced truck rolls, faster order-to-activate, lower AHT, and fewer escalations.

Agentic browsers and MCP standards enable safe automation

The browser is becoming the operating layer for agents. Products like Perplexity’s Comet and The Browser Company’s Dia preview how AI will observe and act across the web. Under the hood, Anthropics Model Context Protocol (now embraced by OpenAI, Google DeepMind, and Microsoft) is emerging as a USB-C for AI, standardizing how agents access tools, APIs, and data. Telco vendors and integrators should plan MCP-compatible plug-ins for provisioning, billing, network telemetry, and identity to enable safe, controllable automation across silos.

Private eval pipelines and data lineage for regulated AI

Public benchmarks are too coarse for regulated, decision-critical use. The next wave is private, use-case-specific evaluation pipelines tied to business metricsaccuracy, latency, hallucination risk, compliance outcomesand airtight lineage. A new tooling ecosystem (e.g., Braintrust, LangChain, Bigspin.ai, Judgment Labs) is forming to operationalize this. For operators, evals need to span CX agents, AIOps, fraud, and credit decisions, with defensible audit trails.

Where value concentrates in 2025–2026

The stack is crystallizing around compound systems, vertical depth, and new consumer surfaceswith second-order effects on networks and edge.

Infrastructure 2.0: compound AI, retrieval, tools, and durable memory

Foundational models keep improving, but the advantage is shifting to systems that fuse retrieval, planning, tool use, and inference optimization, plus durable memory that goes beyond first-generation RAG. Startups like mem0, Zep, SuperMemory, and LangMem, alongside model vendors, are racing to make memory persistent and personalized. This favors telcos that can unify customer, device, and network state across time and expose it safely to agents executing service changes or resolving incidents.

Vertical AI with immediate ROI replaces traditional SaaS playbooks

Category winners are solving language-heavy, multimodal workflows in complex domains with immediate ROI. Healthcare exemplarsAbridge, Nabla, Deep Scribe show how documentation automation unlocks throughput and quality. Similar wedges exist in telecom: AI copilots for field techs, contract intelligence, dispute resolution, and spectrum planning. In consumer, voice-first interfaces are normalizing with platforms like Vapi, while AI-native search and browsing via Perplexity signal a shift in how users discover, shop, and book new surfaces that CSPs and MVPDs can integrate for commerce and support.

Generative video will stress networks, CDNs, and ad models

Model quality across Google’s Veo 3, OpenAI’s Sora, Moon valleys stack, and early open entrants like Qwen is improving fast. 2026 looks like the commercialization window, from cinematic tools to real-time streaming and personalized content. Expect bursts of upstream and CDN traffic from synthetic media generation, new latency-sensitive workloads, and novel ad formats. Operators should model GPU-as-a-service at the metro edge, expand peering with media platforms, and refresh traffic engineering and QoE analytics for AI-generated video.

Strategy guide for operators, vendors, and investors

Speed is now a capability, not a metricuse it to pick where to build, buy, or partner.

Build, buy, or partner—decide fast for advantage

Assume an aggressive M&A cycle as incumbents buy AI capabilities. Identify targets with technical and data moats, embedded workflows, and MCP-ready integrations. For in-house builds, start with high-friction wedges in CX, assurance, or billing exceptions and expand from there. Partner where distribution beats inventionespecially in vertical copilots that already show product-market fit.

2025 technical priorities: evals, MCP adapters, edge GPUs

Stand up private eval and lineage pipelines early. Normalize data for memory-aware agents and design guardrails for tool use. Prioritize MCP-based tool adapters for BSS/OSS and network APIs. Pilot agentic browsers for internal ops. Prepare for generative video by extending GPU capacity at edge locations and refining low-latency observability. Invest in security for agent actions, including RBAC, policy-as-code, and continuous approval flows.

Operating model and metrics for AI-era velocity

Calibrate for AI-era velocity: shorter implementation cycles, faster migrations, and more iterative releases. Track business-grounded outcomescontainment rates, net revenue lift, time-to-valueover proxy model scores. Aim to collapse switching costs for your customers before competitors do, and build context and memory as durable moats. The winners will blend agentic automation with human judgment and move before the M&A wave sets the market structure.