AI Traffic Growth and Network Readiness: Key Findings

A new Ciena and Heavy Reading study signals that AI will become a primary source of metro and long-haul traffic within three years, while most optical networks remain only partially prepared.

Why Now: AI Workflows Stress Transport, Latency, Automation

AI training and inference are shifting from contained data center domains to distributed, edge-to-core workflows that stress transport capacity, latency, and automation end-to-end. The survey of 77 global CSPs, fielded in February 2025, shows rising near-term demand: 18% expect AI to represent more than half of metro traffic, and 49% expect AI to exceed 30% of metro traffic within three years. Expectations are even higher for long-haul: 52% see AI surpassing 30% of traffic, and 29% expect AI to account for more than half. Yet only 16% of respondents rate their optical networks as very ready for AI workloads, underscoring an execution gap that will shape capex priorities, service roadmaps, and partnership models through 2027.

Strategy: Product+Photonics+Automation to Lower Cost per Bit

CSPs view AI connectivity as a growth vector centered on managed high-bandwidth wavelengths (100G/400G/800G), with enterprise demand outpacing hyperscalers. The winners will align product, photonics, and operations to deliver predictable capacity at lower cost per bit, while using automation and APIs to make transport consumable by AI application owners.

AI Traffic Patterns: Metro Demand and Long‑Haul Surge

AI traffic growth will be uneven across the network, with distinct drivers in metro and core domains.

Metro: Fast‑Rising AI Share; DWDM, IPoDWDM, Low‑Latency Routing

In metro networks, AI is additive to existing heavyweights like video, web, and IoT, yet its share is set to climb quickly as enterprises operationalize generative AI, computer vision, and real-time analytics. Edge inference, data preprocessing, and retrieval-augmented generation create short-hop and east-west flows within and between metro data centers. This pattern favors dense wavelength-division multiplexing (DWDM) in metro DCI, coherent pluggables in routers (IPoDWDM), and latency-aware routing to hit SLA targets without exploding costs.

Long‑Haul: Training, Replication Drive 400G/800G and C+L Expansion

Across the core, AI training, data replication, and model synchronization drive large, sustained flows between regions and availability zones. This is where 400G and 800G wavelengths, C- and L-band expansion, and ROADMs capable of flexible grid operation matter most. Expect more alien-wavelength scenarios as clouds, wholesale carriers, and cable operators stitch together capacity with multi-vendor coherent optics. The result is a premium on photonic line system modernization and automation to maximize spectrum utilization and operational agility.

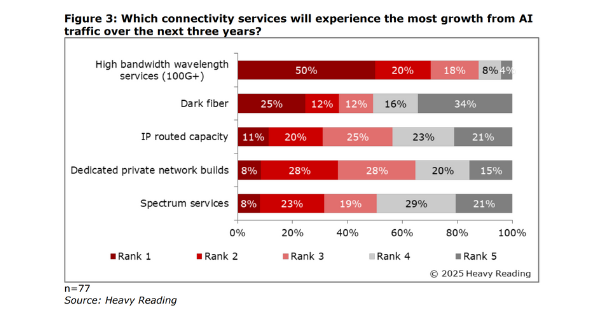

Monetization: Managed 100G/400G/800G Wavelengths vs Dark Fiber

CSPs expect AI to favor service-based consumption models over raw infrastructure leasing.

High-bandwidth wavelength services lead growth

Half of respondents rank managed high-bandwidth wavelengths as the top service segment tied to AI over the next three years, far ahead of dark fiber, which only a quarter expect to grow the most. This aligns with enterprise needs for guaranteed throughput, latency, and availability without managing optics, amplifiers, or photonic impairments. Offers at 100G, 400G, and 800G with clear SLAs, dynamic bandwidth options, and predictable pricing will resonate as enterprises scale AI pipelines.

Enterprise demand edges out hyperscalers

Seventy-four percent of CSPs expect enterprise customers to drive the largest traffic growth on their networks, ahead of hyperscalers and cloud providers. That tilt reflects the uptake of AI in sectors like financial services, healthcare, manufacturing, and media, where compliance, data gravity, and deterministic performance requirements favor private connectivity. CSPs should bundle wavelength services with cloud on-ramps (e.g., for AWS, Microsoft Azure, and Google Cloud), hosted colocation, and managed encryption to capture more of the enterprise AI stack.

Readiness Gap: Capex, Strategy, and Operations

Investment constraints and operating complexity are slowing AI-era optical upgrades, even as demand accelerates.

Top hurdles: capex, go-to-market, and network management

Capex constraints (38%) and go-to-market/business strategy challenges (38%) are tied as the most common barriers, followed by network management complexity (32%). Many operators report they are ready in parts of the network but need further upgrades, with 39% in that middle state, 40% somewhat ready, and 5% not ready at all. The takeaway: readiness is uneven and often blocked by portfolio clarity and operational tooling, not just optics.

Technology priorities to close the gap

Execution should focus on scaling capacity and simplifying operations. Key actions include upgrading to modern ROADMs with flexible grid and C+L band support; adopting 400G/800G coherent across metro DCI and long-haul; leveraging 400ZR/OpenZR+ pluggables to collapse route optical boundaries; and embracing IPoDWDM, where it reduces cost and power. Operators should also plan for the step to 1.2T1.6T-class coherent line cards as they become economic, aligned with vendor roadmaps from Ciena, Nokia, Infinera, Cisco/Acacia, and others. Automation is essential: streaming telemetry, optical performance analytics, and closed-loop control across L0L3 will mitigate operational risk as channel counts and service velocity increase.

Operator Playbook: 12–24 Month Actions for AI Connectivity

CSPs can monetize AI-driven demand while de-risking spend through targeted network and portfolio moves.

Prioritize metro DCI and AI corridors

Target the top ten metro clusters and inter-regional corridors where AI workloads concentrate, and pre-provision spectrum and 400G/800G wavelengths tied to enterprise and cloud demand signals. Use flexible term plans and usage-based tiers to hedge traffic uncertainty, and design for fast turn-up using standardized service templates.

Automate for SLA assurance

Deploy multi-layer path computation and traffic engineering (e.g., SR-MPLS/SRv6 TE) integrated with optical controllers and a PCE for deterministic latency and protection. Implement service automation via MEF LSO APIs and align with TM Forum Autonomous Networks practices for closed-loop assurance. Expose performance telemetry via portals and APIs so enterprises and cloud partners can embed transport metrics into AI workload orchestration.

Align the go-to-market with vertical needs

Package managed wavelengths with cloud interconnect, encryption, and edge colocation as AI-ready connectivity, tailored to regulated verticals. Offer rapid trial-to-production pathways, including short-duration high-capacity bursts for training runs, and co-sell with integrators and GPU/AI infrastructure partners. Consider NaaS commercial models to reduce upfront commitments while protecting margins with clear SLA tiers.

What to Watch: Optics Roadmap, Standards, and Constraints

Technology roadmaps and policy headwinds will influence timing and economics for AI-era transport.

Coherent optics and pluggable evolution

Expect broader availability of 800G coherent solutions and early steps toward 1.2T1.6T wavelengths, alongside ongoing work in OIF and OpenZR+ communities on higher-rate pluggables. Supply chain and power envelopes for high-speed optics will be critical to plan cycles and operational budgets.

Data center architectures spilling into transport

As AI fabrics push to 800G and beyond inside data centers, DCI requirements for loss, jitter, and latency will tighten, increasing the value of integrated optical IP planning and segment routing. Look for closer alignment between cloud routing policies and carrier transport SLAs, including traffic class differentiation for training versus inference.

Sustainability, spectrum, and permitting

Energy per bit targets and site power constraints will shape platform choices, while fiber scarcity and permitting lead times may limit dark fiber options in some markets. Maximizing existing spectrum with better modulation, C+L band, and automation will often deliver faster ROI than greenfield builds.

The bottom line: AI traffic is arriving faster than many transport networks can accommodate, and CSPs that pair coherent upgrades with automation and enterprise-focused offers will be best positioned to capture growth while keeping unit economics in check.