US AI concerns over jobs, politics, and social costs

Fresh polling signals rising public concern that AI could upend employment, destabilize politics, and strain social and energy systems.

AI job displacement tops public fears

A recent Reuters/Ipsos survey of 4,446 U.S. adults found that 71% worry AI will permanently displace too many workers. The sentiment aligns with research from Microsoft that flags information-processing and communication rolessuch as translation and customer serviceas especially exposed to automation.

Tech leaders have not downplayed the risk. Executives at Anthropic (Dario Amodei), OpenAI (Sam Altman), and Amazon (Andy Jassy) have acknowledged that next-generation AI tools could replace significant portions of repetitive, digital work. Near-term labor effects remain uneven, but early signals include a tougher hiring market for some computer science graduates as employers recalibrate skill needs toward applied AI, MLOps, and AI governance.

AI-driven political manipulation and info integrity risks

Seventy-seven percent of respondents fear AI will fuel political instability if hostile actors exploit the technology. That concern is grounded in the rapid spread of deepfakes, synthetic voices, and AI-written narratives that can erode trust and amplify divisive content. OpenAIs recent threat reports have detailed state-linked operations using AI to generate persuasive posts around contentious policy topics, illustrating the low-cost, high-scale dynamics of influence campaigns.

This is now an operational risk for telecom and cloud providers that carry communications traffic. Voice cloning has already been weaponized in robocalls, prompting the FCC to clarify that AI-generated voices in unsolicited calls violate the Telephone Consumer Protection Act. Carriers and CPaaS providers will face mounting expectations to authenticate content, filter abuse, and support provenance signals end to end.

AI social impacts and data center energy footprint

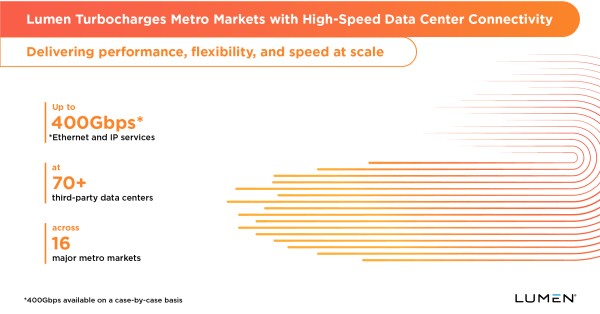

The poll also shows broad worry about AIs indirect costs: 66% are concerned about AI companions displacing human relationships, and 61% are concerned about the technologys energy footprint. Large-scale model training and inference are increasing data center power demand, with implications for siting, cooling, grid stability, and corporate climate targets. For telecom operators building edge compute and private AI services, power and sustainability constraints will shape capacity planning as much as spectrum or fiber.

Why AI risk perceptions matter for telecom, 5G, and enterprise

These perceptions will influence buying, regulation, and the social license for AI deployment across networks and customer touchpoints.

Contact center AI: augmentation, compliance, and CX gains

Customer service is squarely in AIs path. Generative AI copilots, virtual agents, and agent-assist tools can automate call summarization, next-best-action guidance, and routine interactions. That raises the productivity ceilingbut also the stakes for workforce transition, quality control, and compliance. Operators and B2B providers that frame AI as augmentation (fewer transfers, better first-contact resolution, shorter handle times) rather than wholesale replacement will mitigate backlash and protect brand trust.

Telecom AIOps and intent-based, closed-loop automation

Across RAN, transport, and core, AIOps is moving from dashboards to actions. ETSIs ENI work, TM Forums AIOps/OA initiatives, and intent-based frameworks point toward closed-loop change with human oversight. The workforce implication is job redesign, not just headcount reduction: NOC engineers shift toward policy curation, anomaly triage, and model evaluation. Reliability, safety, and auditability should be designed in from day one.

AI talent strategy and reskilling as a competitive edge

Reskilling pathways are essential. High-impact roles include conversation designers, knowledge managers, AI product owners, data stewards, red-teamers, and model risk specialists. Partnerships with vendors and universities can accelerate curricula on prompt engineering, RAG architectures, and AI evaluation. Tie these investments to measurable KPIscustomer satisfaction, MTTR, SLA adherenceto secure executive and board support.

AI strategy: actionable steps for the next two quarters

Address public concerns head-on while capturing AIs productivity gains in a controlled, governed way.

Lead with AI augmentation and measurable business outcomes

Start with agent-assist, knowledge search, and summarization before full automation. Run time-bound pilots with control groups, quantify impact, and publish internal scorecards. Conduct job impact assessments and craft transition plans that include redeployment, training, and incentives. Communicate early and often to employees and unions to maintain trust.

Strengthen AI governance, provenance (C2PA), and security

Adopt the NIST AI Risk Management Framework and align with ISO/IEC 23894 for risk controls. Standardize model evaluation, safety testing, and red-teaming; document lineage and change management. Implement content authenticity and provenance via the C2PA standard for outbound media, and instrument inbound verification where feasible. For voice and messaging, strengthen STIR/SHAKEN, spam filtering, LLM-powered fraud detection, and incident playbooks. Track regulatory exposure across the EU AI Act, FCC and FTC actions, and state AG enforcement.

Plan AI power, placement, and total cost of ownership

Model AI workloads power draw across training and inference. Improve PUE with advanced cooling, and consider scheduling and hardware choices (GPUs vs. accelerators vs. CPUs) by workload latency and cost. Use edge inference selectively to reduce backhaul and improve customer experience, while consolidating heavy training in efficient regions or hyperscale partnerships. Lock in renewable PPAs where possible, quantify scope 2 impacts, and explore heat reuse with municipal partners.

AI watchlist: 12-month risks, adoption, and competition

Several inflection points will shape risk, adoption, and competitive positioning.

AI regulation and standards: deepfakes, labeling, telecom rules

Expect more guidance and enforcement around deepfakes, AI-labeled content, and robocalls. The EU AI Acts implementing rules will clarify obligations by risk class; in the U.S., regulators will lean on existing authorities while NIST refines testing and reporting practices. Provenance adoption via C2PA across major software, device, and cloud platforms will be a bellwether for trust infrastructure.

GenAI vendor roadmaps, alliances, and telecom stack choices

Watch how operators scale genAI with hyperscalers and ISVsfor example, contact center platforms from Genesys, NICE, and Cisco, and LLM services from OpenAI, Anthropic, Google, and open model ecosystems. Evaluate integrations with telecom OSS/BSS (TM Forum ODA), data governance tools, and on-prem or edge accelerators. Favor vendors with transparent evals, clear TCO, and strong safety posture.

Real-time AI threats will test defenses across channels

Election cycles and major events will spur synthetic media and voice fraud across channels. Monitor threat intel from cloud AI providers and national cybersecurity agencies, and run live-fire exercises across voice, messaging, and social entry points. Measure detection precision and response times, not just model accuracy, to ensure operational resilience.

Bottom line: Public concern is high, and that increases the cost of missteps. Telecom and enterprise tech leaders who combine augmentation-first deployments, rigorous governance, and credible energy strategies will capture AIs upside while maintaining trust with customers, employees, and regulators.