As the global economy accelerates into the AI-driven era, the massive scale of data generated, processed, and exchanged by AI platforms is introducing new demands—and new opportunities—for telecom providers. From generative AI models generating dynamic content to agentic AI systems orchestrating complex decisions autonomously, the connective tissue holding this ecosystem together is the network. Telecom service providers now stand at a pivotal crossroads: evolve and monetize this new AI-driven traffic or risk being left behind as digital intermediaries without a stake in the growing value chain.

This article explores how communications infrastructure operators can embrace AI connectivity as a core business model, shift from legacy paradigms, and capture value through innovative services, dynamic network architectures, and modern monetization strategies.

Monetizing AI Connectivity: Why High-Performance Networks Are Foundational

AI is fundamentally a data business. Whether in training large models or delivering real-time inference, AI workloads require constant, efficient movement of vast datasets. That means connectivity—especially high-performance, secure, low-latency connectivity—is central to AI’s success.

The AI boom is evident in the unprecedented capital expenditures made by hyperscale cloud providers. In 2025 alone, companies like Alphabet, Amazon, Microsoft, Meta, and Oracle are collectively investing over $335 billion into AI infrastructure—a 20–100% increase over the previous year. These investments signal a rapidly growing demand for compute, storage, and critically, connectivity.

Telecom operators have historically focused on voice and broadband, but AI workloads open a new domain: high-value, application-specific data transport. These include direct enterprise-to-cloud AI connections, inter-cloud model-to-model traffic, and localized AI interactions at the edge.

The challenge is that most existing networks were not built with these use cases in mind.

Understanding AI Workloads and Their Demands

AI workloads differ substantially from conventional digital traffic. They encompass training, inference, generative, and agentic processes—all requiring varying bandwidth, latency, and resource orchestration. While training tasks are typically data-intensive and centralized, inference is distributed, dynamic, and increasingly real-time.

For example:

- Inference workloads include chatbot responses, fraud detection, or video surveillance interpretation.

- Generative AI creates synthetic content and drives customer interactions.

- Agentic AI, an emerging class, manages tasks independently and can multiply the volume of required network interactions.

Crucially, agentic AI could produce three to five times more workloads than generative AI, making it a potentially explosive driver of network demand. As AI applications evolve from static chatbots to autonomous agents managing infrastructure or customer journeys, the implications for telecoms are profound.

Creating Infrastructure for AI Connectivity

Delivering connectivity for AI workloads requires an architectural shift from static provisioning to autonomous, intelligent networks. Modern networks must become dynamic platforms that support:

- Real-time traffic routing and optimization

- Service chaining and orchestration for AI applications

- Low-latency, high-throughput performance

- Edge compute integration for localized AI processing

Autonomous networks (ANs) are key enablers here. At Level 4 autonomy, networks can self-optimize across domains, allocate resources based on AI-driven intent, and respond dynamically to changing workload demands. This is a significant evolution from the traditionally over-provisioned, single-purpose networks used today.

Enterprises are also showing interest in hybrid architectures where local AI models work alongside cloud-based platforms. This helps mitigate latency and cost concerns while improving data sovereignty and resilience.

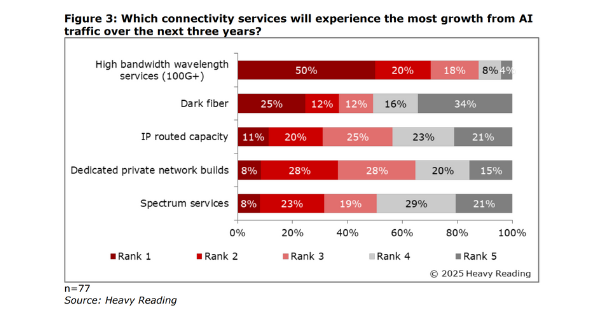

Addressing the Market Opportunity

According to forecasts, AI-enriched interactions will drive nearly 1,226 exabytes of network traffic per month by 2030—almost 20 times the 2023 volume. This surge is expected to account for over 60% of global data traffic.

Initially, much of this traffic will come from video and image-based applications—AI’s most data-intensive activities today. Over time, more sophisticated use cases will emerge, including real-time collaboration between autonomous systems and smart infrastructure.

Telecoms operators thus have a vast, addressable market—both in transporting this data and offering value-added services. But to access this revenue, operators must provide more than connectivity—they must integrate quality of service, redundancy, determinism, and assured performance into their offers.

Strategies for Monetizing AI Connectivity: From Tokens to Outcomes

Traditional telecom billing models won’t suffice for AI. Enterprises expect cloud-like transparency, elasticity, and control. To thrive, operators must adopt new strategies:

1. Metering AI Workloads

Metering must evolve to include AI-relevant metrics such as:

- Tokens per bit (a reference to how AI models process data)

- Power consumption per workload

- Data latency and jitter metrics

A new model might look like “bits per token per watt per dollar,” reflecting the full cost of transmitting and processing AI traffic, including energy use.

2. Usage-Based Billing

AI application developers and enterprises are accustomed to usage-based billing for compute and storage. Telecom services must follow suit with:

- Real-time visibility into usage

- API-based access to metering data

- Self-service dashboards for cost control

3. Outcome-Based Pricing

Instead of charging per connection, some customers may prefer pricing tied to business outcomes (e.g., ensuring real-time object detection in surveillance or accurate real-time translation). Operators can bundle SLA-driven connectivity with AI model integration for specific verticals such as finance, manufacturing, and healthcare.

4. Security and Compliance as a Premium

AI workloads often involve sensitive data. Telecoms can offer premium services with enhanced security, token-aware content filtering, and compliance features such as data residency enforcement or regulated routing.

5. AI Firewalls and Egress Points

As enterprises shift to local-plus-cloud hybrid models, the network edge becomes critical. Telecoms can monetize control points like ingress/egress filters that:

- Monitor and meter AI token usage

- Enforce traffic policies

- Manage hybrid AI integration across platforms

The Role of Local Models and Edge Infrastructure

Cost and resilience concerns are driving interest in localized AI processing. Running smaller models on-premises or at the network edge allows businesses to:

- Reduce dependency on expensive cloud tokens

- Maintain functionality even during connectivity issues

- Limit exposure of proprietary data

Telecom operators are well-positioned to offer edge AI hosting as a managed service, bundling connectivity with local model inferencing, maintenance, and integration. This can also reduce the overall data transport volume and cost.

Challenges in Monetizing AI Connectivity: Overcoming Legacy Systems and Silos

Despite the clear opportunity, many telecoms providers face structural hurdles:

- Legacy systems often lack the agility to support API-based, granular metering and billing.

- Organizational inertia may hinder transformation toward developer-centric, cloud-native operations.

- Vendor silos in automation can limit the interoperability required for dynamic AI network management.

To overcome these barriers, operators need to modernize business support systems (BSS), consolidate automation platforms, and adopt open architectures that support cross-domain orchestration.

Strategic Playbook for Monetizing AI Connectivity in Telecom

To monetize the AI connectivity opportunity, telecoms must:

- Elevate Network Value – Market connectivity not just as a utility but as a critical enabler of AI success.

- Modernize BSS and OSS – Build support for real-time, usage-based metering and outcome-based billing.

- Invest in Autonomous Networking – Achieve Level 4 autonomy for dynamic provisioning and closed-loop optimization.

- Create Edge and Local AI Solutions – Offer localized inferencing, hybrid model hosting, and integration services.

- Develop AI-centric SLAs – Tailor offerings to verticals with guarantees on performance, latency, and security.

- Support Developer Experience – Provide APIs, portals, and tools that attract AI application developers.

- Ensure Compliance and Control – Embed features that support data sovereignty, auditing, and model observability.

Conclusion

AI is redefining the digital value chain, and telecom networks are its vital circulatory system. The AI connectivity opportunity represents a pivotal growth lever for telecoms providers, capable of reversing flat revenue curves and positioning them as key players in the emerging AI economy.

However, capturing this opportunity requires a decisive pivot—from fixed connectivity provisioning to intelligent, programmable, and monetizable network platforms tailored for AI workloads. Those who move early and decisively will not only enable AI innovation but also carve out a lucrative role in the global data economy.

The AI revolution needs a network that’s as smart, dynamic, and responsive as the models it serves. The time to build it—and monetize it—is now.