Qubrid AI, a leader in enterprise AI solutions, today announced a major update to its AI GPU Cloud Platform (V3), along with a robust roadmap featuring App Studio and the forthcoming Agentic Workbench – a transformative toolkit designed to simplify the creation and management of intelligent AI agents.

These updates reinforce Qubrid AI’s mission to democratize AI by enabling faster, smarter, and cost-effective AI development for enterprises, researchers, and developers. Users can access the new platform by visiting https://platform.qubrid.com

New Capabilities in AI GPU Cloud Platform V3:

- New UI: A redesigned interface delivers an intuitive and seamless user experience, simplifying navigation and improving workflow management.

- Model Tuning Page Optimization: Now directly accessible, this page allows users to select base models, upload datasets (CSV), configure parameters, and fine-tune models – all in a few clicks.

- Chat History in RAG UI: Enhances the Retrieval-Augmented Generation experience by displaying past chat interaction – critical for debugging and context-aware improvements.

- Auto Stop for Hugging Face Deployments: Enables users to automatically shut down deployed model containers after a defined duration, improving GPU utilization and reducing cost.

What’s Coming Next:

- App Studio: A powerful, user-friendly workspace to design, prototype, and launch AI-powered applications within the Qubrid AI ecosystem.

- Agentic Workbench: A toolkit purpose-built for developing and scaling AI agents that can autonomously perform tasks, make decisions, and adapt over time.

- Data Provider Integration: Native integration into popular industry data providers solutions that allows easy access to proprietary data from GPU compute.

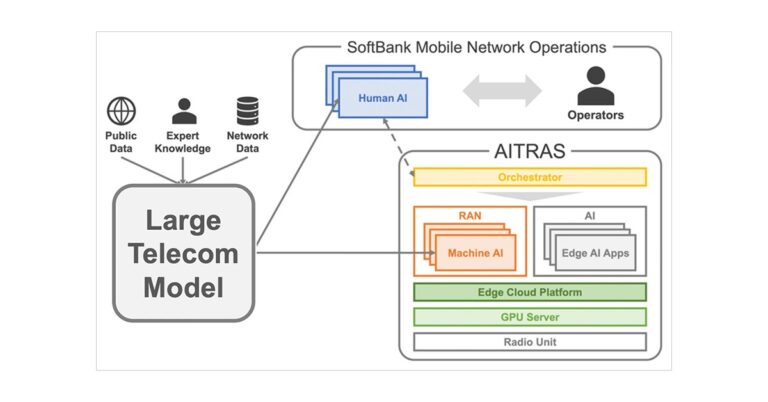

Empowering AI Innovation with Open Cloud Architecture

Qubrid AI’s Open Cloud architecture provides unmatched flexibility for Business Users, Product Managers, AI researchers and data scientists. By supporting popular open-source AI models and compatibility with Jupyter notebooks, the platform allows users to bring their own models, tools, and workflows while still benefiting from Qubrid’s high-performance GPU infrastructure.

No-Code Platform for Rapid AI Development

Qubrid AI’s no-code environment empowers both technical and non-technical users to build, train, and deploy models without writing a single line of code. With drag-and-drop interfaces, pre-configured templates, and guided workflows, users can go from idea to production-ready AI solutions in record time, dramatically shortening the innovation cycle with AI.

A Message from Qubrid AI’s CTO

“Our mission is to make advanced AI accessible, scalable, and enterprise-ready,” said Ujjwal Rajbhandari, Chief Technology Officer at Qubrid AI. “With this latest cloud release, we’re not just improving the user experience; we’re giving businesses the power to operationalize AI faster and more intelligently. From smarter resource management to frictionless model tuning and future-ready agentic tooling, we’re building the foundation for enterprise AI at scale.”

About Qubrid AI

Qubrid AI is a leading enterprise artificial intelligence (AI) company that empowers AI developers and engineers to solve complex real-world problems through its advanced AI cloud platform and turnkey on-prem appliances. For more information, visit http://www.qubrid.com/

Media Contact – Crystal Bellin

Email: digital@qubrid.com