South Korea’s Sovereign AI Strategy: Why It Matters Now

South Korea is funding a national AI stack to reduce dependence on foreign models, protect data, and tune AI to its language and industries.

The government has committed ₩530 billion (about $390 million) to five companies building large-scale foundation models: LG AI Research, SK Telecom, Naver Cloud, NC AI, and Upstage. Progress will be reviewed every six months, with underperformers cut and resources concentrated on the strongest until two leaders remain. The policy goal is clear: build world-class, Korean-first AI capability that supports national security, economic competitiveness, and data sovereignty.

For telecoms and enterprise IT, this is a shift from “consume global models” to “operate domestic AI platforms” integrated with local data, compliance, and services. The winners will not necessarily be the largest models, but the ones that best align with real workloads, efficient inference, and sector-specific outcomes.

The 5 Korean AI Contenders and Their Competitive Edge

Each company brings a different route to competitive parity: vertical data, full-stack control, network distribution, or cost-performance optimization.

LG Exaone 4.0: Data-Centric, Efficient, Industry-Tuned

LG’s Exaone 4.0 (32B) blends language understanding with stronger reasoning, advancing from the earlier Exaone Deep lineage. Its strategy hinges on curated industrial data—biotech, advanced materials, and manufacturing—paired with rigorous data refinement before training. Rather than chase ever-larger GPU clusters, LG is emphasizing efficiency and industry-specific variants. The team distributes models via APIs and uses real usage data to improve performance, creating a flywheel that prioritizes practical outcomes over headline parameter counts.

SK Telecom A.X and A. Agent: Korean-Tuned AI at Network Scale

SK Telecom’s A.X 4.0, derived from Alibaba Cloud’s open-source Qwen 2.5, ships in 72B and 7B versions and is tuned for Korean. The company reports roughly one-third better Korean input efficiency versus prior-generation global models. SKT has also open-sourced A.X 3.1 and is scaling a consumer-facing personal agent, A., with multimodal features such as AI call summaries and notes—now with a reported base of millions of subscribers. Its edge is distribution and data: the telco can pull from services across mobility, navigation, and customer support, while investing in GPUaaS, a new hyperscale AI data center with AWS, and partnerships with domestic chipmaker Rebellions and research collaborations like MIT’s MGAIC program.

Naver HyperCLOVA X: End-to-End AI Full Stack

Naver built HyperCLOVA X from the ground up and runs the data centers, cloud, platforms, and consumer apps that monetize it. The company is infusing AI into core services—search, shopping, maps, and finance—while rolling out products like CLOVA X (chatbot), Cue (AI-enhanced search), and a multimodal reasoning model, HyperCLOVA X Think. For enterprises, Naver offers CLOVA Studio to build custom generative AI and vertical solutions such as CLOVA Carecall for elder care. Naver argues that recipe, sophistication, and capital discipline—not just model size—will set the bar for global competitiveness at comparable scales.

Upstage Solar Pro 2: Compact Model, Frontier Performance

The only startup in the cohort, Upstage, fields Solar Pro 2, a 31B-parameter model recognized by independent benchmarking as a frontier class model. It claims leading results on Korean-language benchmarks and targets performance exceeding global averages for Korean use cases. Upstage is leaning into industry-specialized models in finance, law, and medicine, with an “AI-native” startup ecosystem approach focused on measurable business impact rather than leaderboard vanity.

NC AI: Gaming-Driven Data and Multimodal Potential

NC AI has not disclosed details, but the company’s lineage in gaming suggests access to rich user behavior data, latency-sensitive workloads, and real-time content pipelines that could translate into differentiated multimodal and agent capabilities.

What Korea’s AI Push Means for Telcos and Enterprise IT

This wave blends sovereign control with practical deployment paths—from networks and clouds to vertical apps—shaping how Korean enterprises will source and run AI.

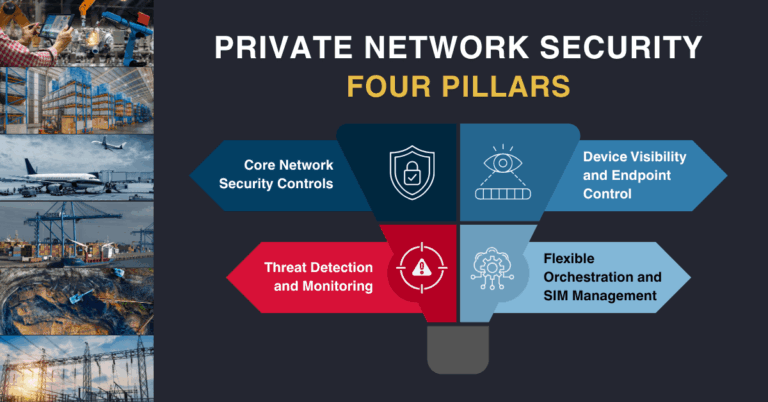

Telcos: Turn Distribution and First-Party Data into Defensible AI

SKT’s model shows how network operators can productize AI through customer care, mobility, and industrial services while leveraging first-party data responsibly. Owning the service layer, investing in GPUaaS, and aligning with chip partners can compress cost per inference and enable on-net AI experiences with stronger privacy guarantees.

Enterprises: Korean-Language Accuracy and Compliance Gains

Korean-tuned models should reduce hallucination on local content, improve retrieval quality, and support data residency requirements. Naver’s and LG’s industry data strategies can shorten deployment cycles for search, agents, and decision support across regulated sectors. Upstage’s smaller but strong models provide a cost-effective option for edge and private cloud.

Suppliers and Partners: A New AI Platform Market

There is room for GPU providers, AI chip startups, cloud operators, and systems integrators to plug into training and inference pipelines. Expect demand for secure data partnerships, RAG pipelines tied to legacy systems, and MLOps that meet enterprise-compliance standards.

Execution Risks for Korea’s Sovereign AI

Scaling sovereign AI is less about a single breakthrough and more about disciplined execution across compute, data, and governance.

Compute Economics and Energy Constraints

Even with efficiency-focused models, training and inference costs can balloon. Partnerships like SKT’s data center work with hyperscalers are essential, but organizations must plan for energy, cooling, and capacity constraints and design for model efficiency from the start.

Data Access, Quality, and Permissions

Vertical advantage depends on legal access to high-quality, continuously refreshed data. Robust data governance, anonymization, and auditability are non-negotiable, especially for models fine-tuned on sensitive enterprise and public-sector datasets.

Talent, Evaluation, and AI Safety

Competition for model, systems, and safety engineers remains intense. Independent evaluations (e.g., third-party benchmarks and red-teaming) and clear incident response policies will be required to win enterprise trust and meet evolving regulatory expectations.

Open-Source Dependencies and Geopolitics

Building on open-source backbones accelerates time to market but introduces upstream risks and licensing complexity. Supply constraints in advanced chips and shifting export controls can affect roadmaps.

Next Steps for Telcos, CIOs, and Investors

Now is the time to pilot with local models, harden your data pipelines, and align AI roadmaps to real P&L outcomes.

Guidance for Network Operators

Productize agent-based services on first-party channels, pair them with telco-grade observability, and explore GPUaaS monetization. Prioritize Korean-tuned models for customer care, field operations, and mobility workflows where latency and privacy matter.

Guidance for CIOs and CTOs

Run bake-offs among HyperCLOVA X, Exaone, A.X, and Solar Pro 2 against your Korean corpora. Standardize on a retrieval layer, enforce data contracts, and optimize for total cost of ownership—especially inference and fine-tuning costs under realistic load.

Guidance for Vendors and Investors

Back ecosystems around evaluation, safety, and domain datasets. Focus on co-developing vertical agents with clear KPIs in finance, healthcare, manufacturing, and public sector. Efficiency, not just scale, will separate durable winners from the rest.