SoftBank Launches Telecom-Centric Generative AI Foundation Model

On March 19, 2025, SoftBank Corp. announced the development of a new Large Telecom Model (LTM) — a domain-specific generative AI foundation model built to enhance the design, management, and operation of cellular networks. Leveraging years of expertise and extensive network data, LTM serves as a foundational model for AI innovation across telecom operations.

Trained on a diverse set of datasets — including internal operational data, expert network annotations, and management frameworks — the LTM offers advanced inference capabilities tailored specifically for telecom environments. The model represents a major leap toward AI-native network operations, enabling automation, optimization, and predictive intelligence across the full lifecycle of cellular network management.

AI-Driven Base Station Optimization with LTM

To demonstrate LTM’s practical applications, SoftBank fine-tuned the model to develop AI agents for base station configuration. These agents were tasked with generating optimized configurations for base stations that were not included in the training data. The results were validated by in-house telecom experts and showed over 90% accuracy.

This approach drastically reduces the time needed for configuration tasks — from days to minutes — with similar or improved accuracy. Compared to manual or partially automated methods, the LTM-led models offer:

- Significant time and cost savings

- Reduction in human error

- Scalability across thousands of network nodes

The fine-tuned LTM models are capable of supporting two primary use cases:

1. New Base Station Deployment

In dense urban areas like Tokyo, the model is used to generate optimal configurations for new base stations. It receives input such as the deployment location, existing nearby infrastructure, and network performance metrics, and outputs a set of recommended configurations tailored to maximize performance and coverage.

2. Existing Base Station Reconfiguration

In scenarios like large events that temporarily increase mobile traffic, the model is used to dynamically adjust base station settings. It recommends real-time configuration changes to handle the surge in demand and maintain quality of service.

LTM as the Foundation for “AI for RAN” and Future AI Agents

LTM is not just a standalone model—it is also the foundational layer for SoftBank’s broader “AI for RAN” initiative, which focuses on using AI to enhance Radio Access Network (RAN) performance. Through continued fine-tuning, LTM will enable the creation of domain-specific AI agents capable of:

- Automated network design

- Adaptive resource allocation

- Predictive maintenance

- Performance optimization across the RAN

These AI agents are designed to be modular and context-aware, making them easier to deploy across different scenarios and geographies.

Collaboration with NVIDIA for LTM Performance Gains and Flexibility

To maximize LTM’s performance, SoftBank partnered with NVIDIA. Training and optimization of LTM were carried out using the NVIDIA DGX SuperPOD, a high-performance AI infrastructure used for distributed model training.

In the inferencing phase, SoftBank adopted NVIDIA NIM (NVIDIA Inference Microservices), which yielded:

- A 5x improvement in Time to First Token (TTFT)

- A 5x increase in Tokens Per Second (TPS)

NVIDIA NIM also supports flexible deployment—whether on-premises or in the cloud—offering SoftBank the agility needed for enterprise-scale rollouts.

SoftBank also plans to use NVIDIA’s Aerial Omniverse Digital Twin (AODT) to simulate and validate configuration changes before they’re applied, adding another layer of safety and optimization to the process.

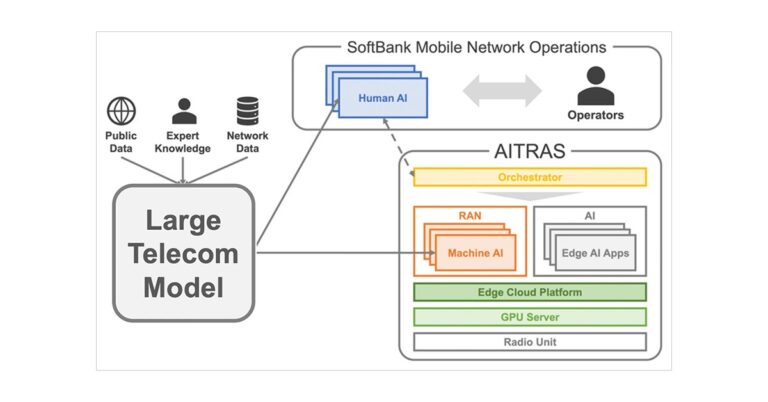

“Human AI” Vision Realized Through LTM

The LTM is an embodiment of SoftBank’s “Human AI” concept, as proposed by its Research Institute of Advanced Technology (RIAT). This vision complements “Machine AI” and emphasizes the integration of human expertise with AI to streamline operations and decision-making in mobile networks.

LTM is designed not just as a model but as a knowledge system, reflecting the insights of SoftBank’s top network specialists. By integrating LTM-based models with AITRAS—SoftBank’s AI-RAN orchestrator—the company aims to build a unified AI framework for operating virtualized RAN and AI systems on the same infrastructure.

AITRAS Integration and Future Roadmap

The orchestration layer, known as AITRAS, is central to SoftBank’s strategy for converged AI and RAN operations. LTM-powered models will eventually feed into AITRAS, enabling intelligent orchestration of both virtualized and AI-native workloads on a unified platform.

This integration is a key part of SoftBank’s plan to build autonomous and self-optimizing networks that can:

- React to real-time events

- Predict and mitigate performance issues

- Continuously evolve based on AI-driven insights

As SoftBank continues development of AITRAS, LTM will serve as its cognitive engine, providing operational intelligence across all layers of the network.

Global Collaboration Fuels LTM’s Telecom AI Expansion

The development of LTM was led by the SoftBank RIAT Silicon Valley Office in collaboration with its Japan-based R&D team. Looking ahead, SoftBank plans to strengthen its global partnerships to scale the adoption of LTM across international markets and contribute to the advancement of next-generation telecom networks.

SoftBank also envisions using LTM to enable new services, enhance operational agility, and deliver superior mobile experiences to its customers.

Industry Experts Weigh in on LTM’s Impact in Telecom

Ryuji Wakikawa, Vice President and Head of the Research Institute of Advanced Technology at SoftBank, said: “SoftBank’s AI platform model, the ‘Large Telecom Model’ (LTM), significantly transforms how we design, build, and operate communication networks. By fine-tuning LTM, we can create AI agents for specific tasks, improving wireless device performance and automating network operations. We will continue to drive innovation in AI to deliver higher-quality communication services.”

Chris Penrose, Vice President of Telecoms at NVIDIA, added: “Large Telecom Models are foundational to simplifying and accelerating network operations. SoftBank’s rapid progress in building its LTM using NVIDIA technologies sets a strong example for how AI can redefine telecom operations globally.”

LTM Sets a New Standard for AI-Powered Telecom Infrastructure

With the introduction of its Large Telecom Model, SoftBank has laid the foundation for a next-generation, AI-powered telecom infrastructure. LTM not only enhances operational efficiency but also unlocks new possibilities for intelligent automation, predictive optimization, and scalable AI agent deployment.

As SoftBank continues to refine and expand this model—alongside its work on AITRAS and “Human AI”—it is positioning itself as a leader in the future of AI-native mobile networks.