SoftBank’s $30B OpenAI investment: timing, terms, and trigger

SoftBank has reportedly approved the final $22.5 billion tranche of a planned $30 billion commitment to OpenAI, tied to the AI firm’s shift to a conventional for‑profit structure and a path to IPO.

Deal terms, restructuring, and IPO catalyst

The investment completes a massive $41 billion financing round for OpenAI that began in April, making it one of the largest private capital raises in tech history. SoftBank initially contributed $10 billion; the remaining capital is contingent on OpenAI completing a restructuring that unwinds its capped‑profit model and consolidates governance for public markets. If that restructuring were to stall, SoftBank’s total exposure was set to be reduced, underscoring how tightly the capital is linked to OpenAI’s governance clarity and liquidity roadmap.

Investor landscape, strategic partners, and compute ecosystem

Microsoft remains OpenAI’s most consequential strategic partner through Azure, while other financial backers like Thrive Capital are positioned for a public market outcome. On the technology side, OpenAI has been deepening ties across the compute supply chain, including work with GPU and accelerator vendors such as NVIDIA and AMD, and has explored new form factors and hardware concepts with product leaders in the consumer domain. The fresh capital is expected to support enterprise AI expansion, infrastructure scaling, and next‑generation model development beyond GPT‑5.

SoftBank’s AI strategy and post‑Vision Fund reset

For SoftBank, this move signals a deliberate reentry to the tech vanguard after Vision Fund setbacks. The group has already benefited from Arm’s successful public listing and is leaning into AI as a defining growth pillar. A major position in OpenAI could diversify SoftBank’s AI footprint across cloud, silicon, and potentially devices—while giving it a meaningful voice alongside Microsoft as OpenAI’s ownership and control evolve.

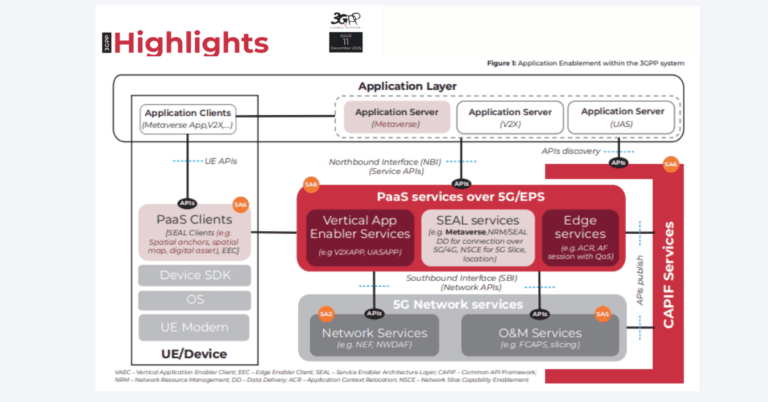

How the OpenAI raise impacts telecom, 5G, and enterprise IT

This funding and restructuring signal faster enterprise AI adoption, heavier infrastructure demand, and new platform dynamics that will ripple across networks, cloud, and edge.

Network capacity and data center buildout accelerate

A war chest of this size will intensify spend on training and inference infrastructure: high‑density GPU clusters, optical transport, 400G/800G Ethernet upgrades, and DC interconnects. For carriers and neutral hosts, expect increased demand for dark fiber, metro backhaul, and AI‑adjacent colocation as hyperscalers and AI labs chase capacity. Power and cooling constraints become gating factors, which elevates the role of energy‑aware routing, liquid cooling retrofits, and siting strategies near renewables.

Enterprise AI platform shift and vendor selection

OpenAI is pushing deeper into enterprise tools, security features, and domain‑specific assistants. This tilts the competitive field against both hyperscaler‑native stacks and open‑source model ecosystems. CIOs will weigh latency, data residency, and compliance against developer velocity and model capabilities. Expect tighter integration with identity, observability, and data governance platforms, and more hybrid patterns that split sensitive inference across on‑prem, telco edge, and cloud.

Edge and on‑device AI opportunities

SoftBank’s Arm stake and OpenAI’s hardware exploration hint at scenarios where on‑device and near‑edge AI become mainstream, reducing latency and cloud egress cost for use cases like customer service, field operations, and immersive apps. For operators, this aligns with monetizable MEC footprints paired with private 5G, where AI inference can live closer to sensors and endpoints while central training remains in core clouds.

Risks, constraints, and open questions for AI scale

The upside is significant, but execution risk spans governance, competition, supply chains, and policy.

Governance, structure, and regulatory scrutiny

Transitioning from a capped‑profit model to a standard for‑profit entity will be closely watched by regulators and enterprise buyers. Oversight, model transparency, and safety commitments will be stress‑tested under EU AI rulemaking, emerging US frameworks, and procurement policies in regulated sectors. Expect customers to demand clearer SLAs, auditing hooks, and data handling assurances before scaling sensitive workloads.

Competitive dynamics and model economics in AI

OpenAI faces well‑funded challengers across the spectrum—incumbent platforms, independent labs, and open‑source communities. Price‑performance pressure in inference, rapid release cycles, and fine‑tuned vertical models will keep margins tight. Enterprises will hedge with multi‑model strategies to avoid lock‑in, forcing providers to differentiate on control, safety, quality, and TCO rather than raw capability alone.

Supply chain bottlenecks and energy constraints

Capacity remains bounded by advanced accelerators, packaging, and memory supply. While NVIDIA leads, alternatives from AMD and specialized silicon are gaining traction, and Arm‑based CPU platforms are expanding in AI‑adjacent workloads. Power availability and sustainability targets will shape data center buildouts, making energy contracting and grid partnerships as strategic as GPU procurement.

What telecom and enterprise leaders should do now

Telecom and enterprise decision‑makers should use this moment to harden AI strategies, procurement, and infrastructure plans ahead of another investment-driven adoption wave.

Actions for telecom operators and vendors

Model traffic is growing faster than consumer data; plan for AI‑heavy east‑west flows with 400G/800G roadmaps, optical upgrades, and converged IP/optical designs. Stand up AI‑ready MEC sites near enterprise campuses to support low‑latency inference over private 5G. Build reference architectures that integrate OpenAI services via Azure with carrier-grade identity, policy, and billing. Prioritize observability and data governance inline with sector regulations to make AI offerings procurement‑ready.

Actions for enterprises and CTOs

Adopt a portfolio approach: mix frontier models with domain‑tuned open alternatives to manage risk and cost. Negotiate contracts for data control, content provenance, uptime SLAs, and model upgrade paths. Model TCO across cloud, colocation, and edge, factoring in power, cooling, and egress. Pilot AI co‑pilots in functions with measurable ROI—customer operations, developer productivity, and knowledge management—while establishing a safety and compliance baseline.

Milestones and signals to track

Key indicators include OpenAI’s restructuring milestones, any S‑1 filing signals, new strategic partnerships in silicon and cloud, enterprise feature roadmaps, model pricing changes, and disclosures around next‑gen models like GPT‑6. SoftBank’s posture post‑investment—especially alignment with Arm and prospective device efforts—will also signal how far the stack could extend from cloud to edge.