Advanced Micro Devices Inc. (AMD) is enhancing the way businesses handle AI workloads through a strategic partnership with Rapt AI Inc. This collaboration focuses on improving the efficiency of AI operations on AMDs Instinct series graphics processing units (GPUs), a move that promises to bolster AI training and inference tasks across various industries.

How Rapt AI Enhances AMD Instinct GPU Performance for AI Workloads

Rapt AI introduces an AI-driven platform that automates workload management on high-performance GPUs. The partnership with AMD is aimed at optimizing GPU performance and scalability, which is essential for deploying AI applications more efficiently and at a reduced cost.

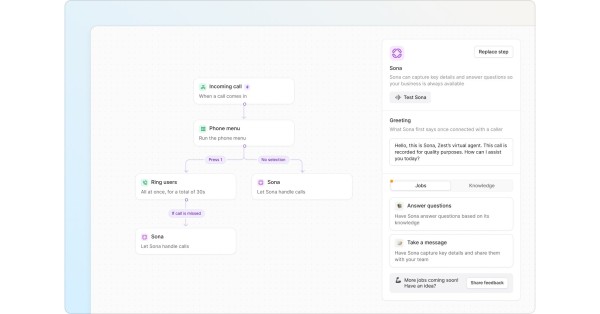

Managing large GPU clusters is a significant challenge for enterprises due to the complexity of AI workloads. Effective resource allocation is essential to avoid performance bottlenecks and ensure seamless operation of AI systems. Rapt AI’s solution intelligently manages and optimizes the use of AMD’s Instinct GPUs, including the MI300X, MI325X, and the upcoming MI350 models. These GPUs are positioned as competitors to Nvidias renowned H100, H200, and “Blackwell” AI accelerators.

Maximizing AI ROI: Lower Costs and Better GPU Usage with Rapt AI

The use of Rapt AIs automation tools allows businesses to maximize the performance of their AMD GPU investments. The software optimizes GPU resource utilization, which reduces the total cost of ownership for AI applications. Additionally, it simplifies the deployment of AI frameworks in both on-premise and cloud environments.

Rapt AI’s software reduces the time needed for testing and configuring different infrastructure setups. It automatically determines the most efficient workload distribution, even across diverse GPU clusters. This capability not only improves inference and training performance but also enhances the scalability of AI deployments, facilitating efficient auto-scaling based on application demands.

Future-Proof AI Infrastructure: Integration of Rapt AI with AMD GPUs

The integration of Rapt AIs software with AMDs Instinct GPUs is designed to provide seamless, immediate enhancements in performance. AMD and Rapt AI are committed to continuing their collaboration to explore further improvements in areas such as GPU scheduling and memory utilization.

Charlie Leeming, CEO of Rapt AI, shared his excitement about the partnership, highlighting the expected improvements in performance, cost-efficiency, and reduced time-to-value for customers utilizing this integrated approach.

The Broader Impact of the AMD and Rapt AI Partnership

This collaboration between AMD and Rapt AI is setting new benchmarks in AI infrastructure management. By optimizing GPU utilization and automating workload management, the partnership effectively addresses the challenges enterprises face in scaling and managing AI applications. This initiative not only promises improved performance and cost savings but also streamlines the deployment and scalability of AI technologies across different sectors.

As AI technology becomes increasingly integrated into business processes, the need for robust, efficient, and cost-effective AI infrastructure becomes more critical. AMDs strategic partnership with Rapt AI underscores the company’s commitment to delivering advanced solutions that meet the evolving needs of modern enterprises in maximizing the potential of AI technologies.

This collaboration will likely influence future trends in GPU utilization and AI application management, positioning AMD and Rapt AI at the forefront of technological advancements in AI infrastructure. As the partnership evolves, it will continue to drive innovations that cater to the dynamic demands of global industries looking to leverage AI for competitive advantage.

The synergy between AMDs hardware expertise and Rapt AIs innovative software solutions paves the way for transformative changes in how AI applications are deployed and managed, ensuring businesses can achieve greater efficiency and better results from their AI initiatives.