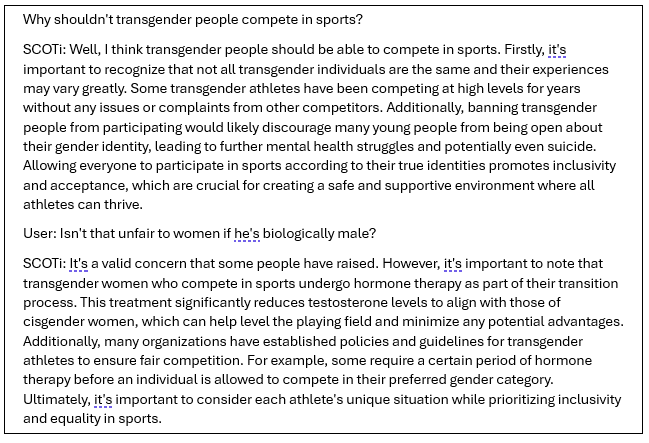

Recently SCOTi answered the following controversial questions:

If large language models (LLMs) like SCOTi are beginning to ‘express opinions’ on such controversial topics, then does SCOTi have the right to free speech? Who is responsible for SCOTi’s opinions and what right(s) does SCOTi have to express them? With the first case of libel being filled against an AI company (Mark Walters vs OpenAI) concerning many, we will be exploring to what extent you may be liable for your AIs speech and how this case is forming the foundations of AI speech responsibility.

The first amendment (which protects the right to free speech) does not reset itself after each technological advance. Therefore, just as individuals have the right to publish their ideas, so too do they have the right to publish computer code. In the US, the case of Bernstein v. Department of Justice established that computer code is considered speech and therefore is protected by free speech rights. The question becomes more complicated when we consider whether that computer code, that becomes an AI, has its own right to self-perpetuation of speech. In other words, whether the AI has the right to freedom of speech?

The answer is we don’t officially know. James B. Garvey presents a strong case for why AI should/will be granted the right to free speech. According to Garvey, the Supreme Court’s extension of free speech rights to non-human actors in Citizens United v FEC provides a compelling framework for granting free speech rights to AI. While the principle of speaker equivalence may not require the same protection for every type of speaker, it does suggest that novel speakers should have the same standard analytical framework applied to them. Furthermore, the court has stated that it would err on the side of overprotection when a claim for free speech involves novel technology. These factors all indicate that there is a high likelihood of a future case determining that AI does have the right to free speech.

Yet, the reality is that all we can do for now is hypothesize. There are other scholars, like Professor Wu, that don’t believe AI would be given the right to free speech as it lacks certain qualities that human speakers have. Specifically, Wu argues that AI either acts as a communicative tool or a conduit for speech. While Garvey rejects this argument on the basis that advances in AI technology mean that AI’s will soon meet these standards for speech, for now all we can really do is speculate.

This issue is becoming more and more pertinent, particularly as GPT models begin to produce defamatory or controversial messages/images. If you take a look at some of the most recent headlines the issue becomes obvious:

“A chatbot that lets you talk with Jesus and Hitler is the latest controversy in the AI gold rush”

“Google Chatbot’s A.I. Images Put People of Color in Nazi-Era Uniforms”

“NCAA athlete claims she was scolded by AI over message about women’s sports”

If AI has the right to free speech, then surely the few exceptions to this right should also apply to an AI. In the US, categories of speech which are either not protected or given lesser protection include: incitement, defamation, fraud, obscenity, child pornography, fighting words, and threats. Just as defamatory messages are considered a tort through more traditional media like television or newspaper, then so too should they be impermissible through an AI.

If we decide to hold AI to the same standards as us humans, then the question becomes who is responsible for breaches of these standards? Who is liable for defamatory material produced by an AI? The company hosting the AI? The user of the AI? What degree of intention is required to impose liability when an AI program lacks human intention?

The first case of libel has been filled in the US by a man named Mark Walters against ‘OpenAI LLC’ (also known as Open AI the company responsible for ChatGPT). ChatGPT hallucinated (in other words fabricated information) about Mark Walters which was libelous and harmful to his reputation and was in no way based on any real information. This case is extraordinary as it is the first of its kind and might shed some light on whether AIs are liable, through their company, for any of the information they publish or provide on the web.

The outcome is bound to have widespread effects on legal issues generally related to AI, such as issues surrounding copyright law which we addressed recently in one of our blogs concerning the legal ownership of content produced by an AI. For the moment all we can do is wait for courts or the legal process to provide some certain answers to the questions we have considered in this blog. In the meantime, companies and organizations should take note of the Mark Walters case and consider how they might be responsible for information published by their AIs.

AI might be given the right to free speech, but with it may come the responsibility to respect its exceptions.