ChatGPT Pulse: Proactive AI Briefings and the Shift to Agentic Assistants

OpenAI introduced ChatGPT Pulse, a new capability that assembles personalized morning briefs and agendas without a prompt, indicating a clear shift from reactive chat to proactive, task-oriented assistance.

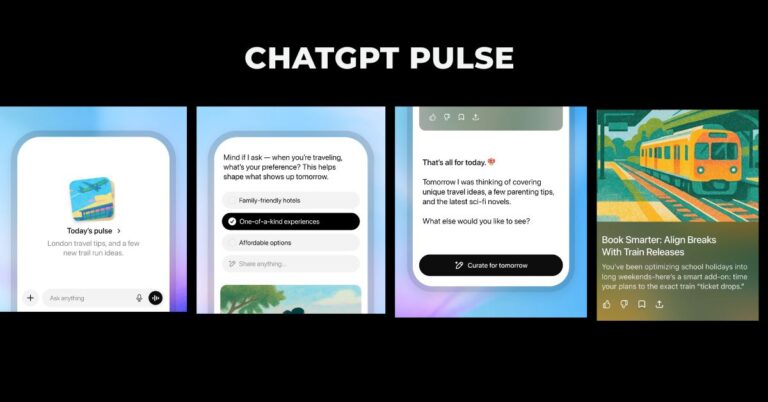

How ChatGPT Pulse Generates and Personalizes Morning Briefs

Pulse generates five to ten concise reports while you sleep, then packages them as interactive cards inside ChatGPT. Each card contains an AI-generated summary with source links, and users can drill down, ask follow-up questions, or request new briefs. Beyond public web content, Pulse can tap ChatGPT Connectors, such as Gmail and Google Calendar, to highlight priority emails, synthesize threads, and build agendas from upcoming events. If ChatGPT memory is enabled, Pulse weaves in user preferences and past context to tailor briefs (for example, dietary constraints or travel habits). A notable design choice: Pulse deliberately caps the number of reports and signals “done” to avoid the endless feed dynamic of engagement-first apps.

Availability, Pro Pricing, and Tiered Rollout

Pulse launches first for the $200-per-month Pro tier and appears as a dedicated tab in the ChatGPT app. OpenAI has telegraphed that Pulse is compute-intensive and will reach broader tiers only after efficiency improves, with Plus access planned but not dated. The launch aligns with OpenAI’s ongoing capacity buildout, including data center partnerships with Oracle and SoftBank, to address server scarcity for inference-heavy products.

From Summaries to Actions: The Road to Agentic AI

Today’s Pulse focuses on synthesis and planning. OpenAI’s roadmap points to more agentic behavior, such as drafting messages for approval or placing restaurant reservations. That progression will depend on model reliability, grounded retrieval, and robust guardrails—prerequisites before enterprises entrust autonomous actions at scale.

Impact on Telecom, CSPs, and Enterprise IT Strategy

Proactive assistants that work overnight change user behavior, reshape infrastructure demand, and open new service models for operators, device makers, and IT leaders.

From Reactive Chat to Continuous, Asynchronous Assistance

Pulse operationalizes an asynchronous usage pattern: the model does work while the user is offline and presents a small set of prioritized outputs at the start of the day. For knowledge workers, that reframes AI from on-demand tool to persistent aide. For telecoms and CSPs, this mirrors a broader trend toward ambient intelligence embedded in devices, productivity suites, and customer portals—where the value is in timely, context-aware actions, not just answers.

Carrier Opportunities: Low-Latency, Edge Prefetching, and QoS for AI

If morning briefs become a daily habit, expect predictable spikes in concurrent inference demand and notification-driven engagement. That creates opportunities for operators to differentiate around low-latency delivery, smart prefetching at the edge, and QoS for AI workloads. Partnerships with hyperscalers and infrastructure providers—echoing OpenAI’s alignment with Oracle and SoftBank—will be pivotal to balance cost, performance, and data residency. Operators can also explore branded “business morning briefs” that synthesize network analytics, customer trouble tickets, and field ops updates for enterprise accounts.

Enterprise Briefings: Inbox Triage, ITSM, and NOC/SOC Overnight Summaries

On the enterprise side, Pulse-like workflows are ripe for internal briefings: overnight summarization of incidents from NOC and SOC tools, change windows from ITSM systems, and executive-ready status digests. Using connectors and fine-grained permissions, enterprises can combine email, calendar, ticketing, and observability data into role-specific briefs. The immediate value is time saved at the start of the day; the strategic value is a foundation for agentic follow-through—drafting responses, opening tickets, or scheduling reviews—with human approval in the loop.

Constraints: Compute, Governance, Quality, and Ecosystem Impacts

Early adopters should weigh compute economics, governance, and trust as they evaluate proactive AI briefings.

Compute Economics and Per-User Cost Optimization

OpenAI’s decision to gate Pulse behind the Pro plan reflects the real cost of running multi-step retrieval and synthesis at scale. For CIOs and CTOs, the question is unit economics: cost per user per day, blended across simple summaries and deep-dive syntheses. Expect vendor emphasis on distillation, caching, and retrieval optimization to shrink per-brief costs over time.

Data Access, Privacy, Compliance, and Residency Controls

Pulse surfaces value by reading emails and calendars—sensitive data domains subject to GDPR, CCPA, and internal retention policies. Enterprises will need clear scopes, auditable connectors (e.g., OAuth-based with least privilege), data lineage, and administrative controls for memory persistence. Data residency and tenant isolation remain gating requirements for regulated industries.

Retrieval Quality, Grounding, and Source Attribution Standards

Pulse cites sources, which helps with transparency, but accuracy depends on retrieval quality and model grounding. For regulated or high-stakes use, enterprises should require evaluators, red-teaming, and fallback behaviors when confidence is low. Human-in-the-loop should remain mandatory for any downstream action, at least until agent reliability improves significantly.

Publisher Strategy for AI Citations and Structured Content

Pulse competes for the morning attention slot against news aggregators, newsletters, and internal portals. Publishers and B2B vendors should plan for attribution-aware syndication, structured content for AI-friendly ingestion, and measurement of downstream traffic from AI citations versus direct channels.

Next Steps: Pilot Proactive Briefings and Prepare for Agentic AI

Organizations can pilot proactive briefings now while preparing governance and infrastructure for agentic expansion.

CSP Actions: Network Briefs, Edge Capacity, and Bundled AI

Prototype subscriber or enterprise “network morning briefs” that consolidate outage maps, SLA adherence, device fleet status, and ticket queues. Align edge capacity and scheduling to expected morning inference peaks. Explore commercial bundles pairing connectivity with proactive AI assistance, grounded in operator data and third-party connectors.

Enterprise Actions: Pilot Scopes, Policies, and Approval Gates

Start with limited-scope pilots: inbox triage for specific teams, executive agendas, and NOC/SOC overnight digests. Define data access policies, opt-in memory settings, and retention rules. Measure time saved, accuracy, and user trust before expanding to semi-automated actions (drafting responses, creating tickets) with approval gates.

ISV Actions: Metadata, Connectors, and Attribution Measurement

Structure content with clean metadata, stable URLs, and usage policies to maximize visibility in AI-sourced briefs. Instrument attribution-driven traffic and adjust monetization models for AI-assisted discovery. Consider native connectors for key systems, such as calendar, email, ITSM, and observability, to ensure your data is governed properly.

Signals: Tier Expansion, Latency Peaks, Connector Breadth, Agentic Pace

Track Pulse availability beyond Pro, inference latency during morning peaks, connector breadth, and the pace of agentic features moving from read-only to act-on-behalf. Also monitor OpenAI’s capacity expansions with partners like Oracle and SoftBank as a proxy for how quickly proactive assistants can scale to mass-market tiers.