AI demand meets networking limits

Fresh survey data from senior US and European executives points to a simple truth: networks are becoming the pacing item for AI at scale.

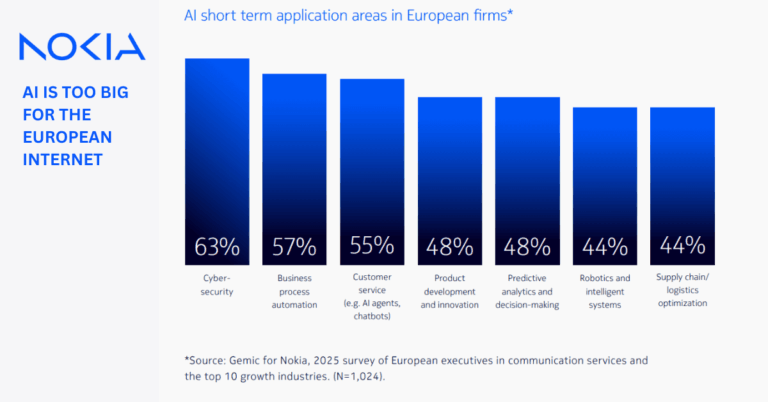

Survey insights: US vs Europe on AI networking

Across roughly 2,000 decision-makers in telecom, data center, and large enterprises, a strong majority doubts that existing infrastructure will keep up with AI’s next wave. In the US, most respondents expect network buildouts to lag AI investment and call out near-term priorities such as optimizing bidirectional data flows, expanding fiber capacity, enabling real-time training feedback, and placing low-latency compute closer to users. In Europe, most enterprise leaders say current networks are not ready for broad AI adoption; many already report latency, throughput, and resiliency pain as data demands rise. The common thread is clear: without accelerated modernization, networks risk becoming the bottleneck that constrains AI outcomes.

Why AI traffic overwhelms legacy networks

AI in production is shifting traffic patterns in ways legacy architectures were not built to handle. Uplink becomes the choke point as autonomous systems, smart factories, drones, and clinical imaging stream rich datasets from the edge into training and inference pipelines. Workloads are more distributed, with data and model updates moving across campuses, metros, and regions. Latency expectations tighten because real-time feedback loops are essential for safety, quality control, and user experience. Meanwhile, resilience, security, and energy efficiency are rising as co-equal requirements. The net effect is pressure on fiber, datacenter interconnect, metro aggregation, and wireless access—simultaneously.

Europe’s AI networking gap is wider

The European market is wrestling with fragmentation, energy constraints, and policy complexity just as AI demand accelerates.

Policy, spectrum, and consolidation for AI scale

European respondents highlight the need for simpler, more aligned rules across markets to speed builds, harmonized and timely spectrum releases, and competition policies that enable rational scale. These are not abstract debates; they determine how fast fiber corridors, metro edge sites, and high-capacity backbones can be delivered. Without streamlined permitting and clearer investment signals, latency targets slip and uplink-heavy corridors stall. Cross-border coherence matters because AI data and model traffic seldom respect national boundaries.

Power, sustainability, and security as core network design

Even the best-designed networks will underperform if power availability and sustainability targets lag. AI clusters push peak density and cooling, and transport upgrades drive new optical and IP layers that must be energy efficient by design. Security is also inseparable from performance as data traverses enterprise campuses, operator cores, and hyperscale fabrics. European digital leadership will depend on treating power, security, and transport as an integrated infrastructure program, not as silos.

Vendors shift to AI-native transport and routing

Suppliers are retooling portfolios to chase AI-era traffic patterns across datacenters, metros, and edge domains.

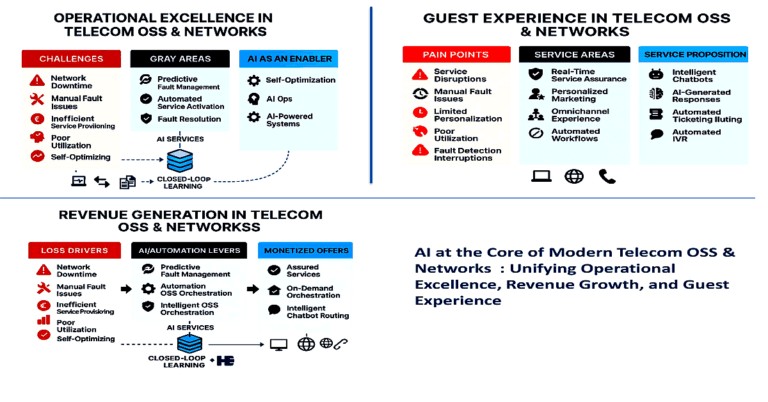

Nokia’s AI-native network strategy

Nokia is reorganizing around Network Infrastructure and Mobile Infrastructure to align with what it calls the AI supercycle. The thrust is clear: lean into optical transport, IP routing, datacenter interconnect, and mobile platforms that are “AI-native” in telemetry, automation, and latency engineering. The company’s commissioned research amplifies the case for faster investment and policy reform in both the US and Europe. Skeptics will note the self-interest, but the underlying issues the studies surface—uplink saturation, metro-edge bottlenecks, and operational complexity—are the right ones.

Industry momentum: optics, IP, and automation

Nokia is not alone. Other optical and IP leaders, including Ciena, have warned that telco and cloud transport needs are outpacing current plans. The direction of travel is consistent: denser fiber, higher-rate optics, datacenter-friendly coherent pluggables, converged IP and optical layers, and automated traffic engineering to handle dynamic, bidirectional flows. Expect more vendor roadmaps to emphasize low-latency edge fabrics, fine-grained observability, and energy-aware networking as AI deployments scale.

Next steps to modernize networks for AI

The near-term playbook is pragmatic: build for uplink, collapse latency, instrument everything, and align policy levers to speed delivery.

Operator playbook for AI-era networks

Map “AI corridors” where data creation and model traffic concentrate, then prioritize fiber densification and 400G/800G upgrades across metro and DCI domains. Push coherent pluggables and IP-over-DWDM where it simplifies layers and accelerates capacity. Modernize routing with segment routing and real-time telemetry to optimize bidirectional flows. Stand up low-latency edge locations that can host inference and pre-processing to cut backhaul. In mobile, prepare for uplink-heavy use cases with features landing in 5G-Advanced, tighter time synchronization, and deterministic QoS across transport and RAN. Treat energy per bit as a core KPI in every design choice.

Enterprise network design for AI workloads

Design data locality first. Keep capture, preprocessing, and inference close to where data is generated, and interconnect edge, campus, and cloud with high-availability DCI. Where wireless is strategic—robotics, machine vision, yard automation—evaluate private 5G alongside Wi‑Fi, with an eye on uplink throughput and end-to-end latency into your training pipelines. Instrument networks with full-stack observability so model performance isn’t hostage to unknown transport issues. Negotiate SLAs that reflect AI realities: bidirectional throughput, jitter, and repair times instead of headline downlink speed.

Policy actions to accelerate AI connectivity

Accelerate fiber and edge builds with permitting reform and predictable timelines. Align spectrum policy to enable both wide-area 5G-Advanced and local licenses for industrial sites. Revisit consolidation thresholds where sub-scale markets impede investment. Incentivize energy-efficient transport, liquid-cooled edge sites, and grid interconnects near AI hubs. Finally, balance data sovereignty with the need for cross-border, low-latency connectivity that AI workflows demand.

AI networking milestones to track through 2026

Execution, not rhetoric, will determine whether networks unlock or constrain AI’s next chapter.

Capex mix and deployment speed

Track operator and cloud capex tilts toward metro fiber, 400G/800G optics, and datacenter interconnect volumes, along with the pace of new edge locations. Meaningful reductions in average metro round-trip latency and faster repair intervals will be the tell.

Standards, telemetry, and automation maturity

Watch 5G-Advanced features for uplink and deterministic performance, coherent pluggable adoption for high-capacity DCI, and maturing telemetry and automation frameworks that enable AI-native operations. Operator trials that couple these ingredients with energy targets are leading indicators.

Policy clarity in EU and US markets

Expect momentum where spectrum roadmaps are firm, consolidation questions are settled, and infrastructure permitting is simplified. Regions that align these levers with power and security strategies will capture outsized AI dividends.

The conclusion is sober but actionable: AI is outgrowing yesterday’s networks, and the winners will be those who re-architect transport, edge, and policy in tandem, before the bottlenecks bite.