SoftBank exits Nvidia to fund AI platforms, data centers, and infrastructure

SoftBank has exited Nvidia and is redirecting billions into AI platforms and infrastructure, signaling where it believes the next phase of value will concentrate.

Transaction overview and capital reallocation strategy

SoftBank sold its remaining 32.1 million Nvidia shares in October for approximately $5.83 billion, and also disclosed a separate $9.17 billion sale of T-Mobile US shares as part of a broader reallocation into artificial intelligence. The company reported quarterly net profit of roughly ¥2.5 trillion, buoyed by gains in AI-related holdings within its Vision Fund portfolio and improved performance across its technology investments and telecom unit. Management framed the divestments as prudent funding mechanics to maintain balance-sheet strength while pursuing large AI opportunities.

Where the capital goes: OpenAI, Stargate, and AI infrastructure

The proceeds are earmarked for a significant expansion of SoftBank’s AI portfolio, including a major investment in OpenAI and potential participation in “Stargate,” a next-generation AI data center initiative co-developed by OpenAI and Oracle. Stargate is expected to require tens of billions of dollars and thousands of high-performance accelerators to deliver massive model training and inference capacity. Notably, SoftBank is shifting from direct exposure to a component supplier to gaining leverage across model platforms and the infrastructure stack that consumes AI compute at scale.

Arm’s role in SoftBank’s AI-era silicon strategy

Despite exiting Nvidia’s equity, SoftBank retains about 90% ownership of Arm. Arm’s CPU IP underpins billions of devices and is increasingly central to AI-era platforms—from cloud servers such as Arm-based CPUs paired with AI accelerators to emerging AI PCs and energy-efficient edge systems. That positioning keeps SoftBank embedded in the silicon value chain even as it reallocates capital upstream to AI platforms and downstream to infrastructure.

Impact on telecom networks, cloud scale, and edge computing

SoftBank’s pivot crystallizes a broader market shift: AI value is migrating from single-vendor chip exposure to end-to-end platforms and the networks that power them.

Shift from chips to AI platforms and high-capacity data center fabrics

Hyperscalers and AI platforms are locking multi-quarter supply of accelerators and building out dense, latency-optimized data center fabrics. For operators and wholesale providers, this drives demand for high-capacity east–west connectivity, 400/800G Ethernet, low-latency optical waves, and high-performance data center interconnect (DCI). Expect tighter coupling between compute clusters and network designs—think congestion-aware fabrics, RDMA over Converged Ethernet, and coherent optics for metro and long-haul interconnect.

Power, liquid cooling, and site selection as AI buildout constraints

Projects on the scale of Stargate concentrate not just capex but megawatts. Grid availability, liquid cooling readiness, and land entitlement become gating factors. Telecom operators, neutral hosts, and edge colocation providers with power headroom and proximity to fiber routes can differentiate with AI-ready sites, heat reuse strategies, and demand-response programs that help platforms scale sustainably.

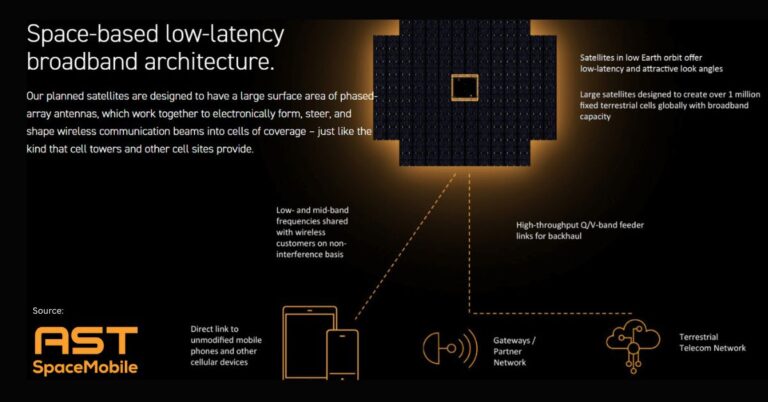

Arm CPUs and DPUs in telco cloud, Open RAN, and edge AI

Arm-based CPUs and Arm-powered DPUs/SmartNICs are gaining traction for energy-efficient cloud-native network functions and secure offload in telco clouds. As operators re-platform for 5G Advanced, Open RAN, and edge AI, Arm ecosystems present a path to lower TCO and power per workload—especially when combined with accelerators for inference at the near edge. This dovetails with ongoing efforts in ETSI NFV, O-RAN Alliance, TIP, and GSMA Open Gateway to standardize and monetize programmable networks.

Strategy outlook: risks, timing, and AI market dynamics

SoftBank is trading single-asset momentum for broader exposure to where AI demand and economics may consolidate next.

Balancing execution risk against potential AI bubble

Concerns about an AI bubble are real, but so is the risk of under-exposure as platforms monetize models, agents, and vertical solutions. Large AI data centers will still depend heavily on Nvidia-class accelerators in the near term, even as competition from AMD and custom silicon intensifies. The pivot does not bet against Nvidia; it seeks leverage to consumption and orchestration layers where pricing power and recurring revenue can be stronger.

Policy, supply-chain constraints, and AI/network standards

Export controls, antitrust scrutiny, and data sovereignty rules will shape AI buildouts and cross-border capacity planning. Optical components (800G/1.6T), advanced packaging, and liquid cooling supply chains remain tight. Standards work across O-RAN, 3GPP’s 5G Advanced, and GSMA Open Gateway will influence how AI workloads integrate with networks, and how telcos participate in the AI value chain via APIs and exposure platforms.

Action plan for operators, vendors, and enterprise IT

Translate SoftBank’s signal into near-term plans for capacity, location, and architecture.

Playbook for operators and neutral hosts

Productize AI-grade connectivity: 400/800G DCI, guaranteed low-latency routes, and burstable high-density cross-connects near major AI campuses. Secure power and cooling roadmaps for high-density racks, and position edge POPs for inference use cases in industrial, retail, and media. Align Open Gateway APIs with AI services to create monetizable, network-aware AI experiences.

Guidance for vendors and systems integrators

Offer reference designs for Arm-based CNFs, DPU-enhanced security, and Ethernet-based AI fabrics with observability and lossless transport. Prioritize O-RAN accelerators, AI lifecycle tools, and FinOps/TelcoOps capabilities that optimize cost per token and cost per inference session. Build go-to-market alliances with hyperscalers, OpenAI ecosystem partners, and silicon vendors to de-risk supply.

Recommendations for enterprise buyers

Adopt a multi-model, multi-cloud strategy and reserve colocation capacity with high power density and liquid cooling options. Engineer networks for AI traffic patterns—latency-sensitive east–west flows and WAN backhaul for data pipelines. Evaluate Arm instances for workloads where performance-per-watt matters, and embed governance for data, security, and cost control from day one.

Leading indicators and milestones to track

Near-term milestones will indicate where returns concentrate and how fast capacity will scale.

Capital commitments, partnerships, and capacity reservations

SoftBank’s finalized stake in OpenAI, closure of Stargate financing, Oracle’s role, and the accelerator vendor mix deployed. Track multi-year capacity reservations and MW commitments in key regions.

Arm monetization in data center, AI PCs, and DPUs

License growth in data center and AI PCs, adoption of Grace-class servers, and the penetration of Arm-based DPUs in telco clouds and secure edge sites.

Network expansions tied to AI campuses and 800G/1.6T optics

Announcements for 800G/1.6T optics, new subsea and terrestrial routes tied to AI data centers, and operator disclosures on power, cooling, and edge adjacency designed explicitly for AI workloads.