AI Capex Supercycle and Hyperscale Buildout

Meta, Alphabet, and Microsoft signaled that AI infrastructure is now a multi-year capital priority measured in tens of billions per year.

The New AI Capex Run-Rate

In their latest results, Meta guided capital expenditures into the $70–72 billion range with an even larger step-up expected the following year. Alphabet raised its 2025 capex outlook to $91–93 billion, up sharply from prior estimates. Microsoft reported $34.9 billion of capex in the most recent quarter, materially above expectations and up strongly year over year, with management indicating spending will continue to grow. These figures point to the largest synchronized build-out of compute, storage, and networking capacity in the history of cloud.

What’s Driving AI Spend Now

Investor enthusiasm is being underpinned by revenue momentum and visible AI demand signals. Alphabet posted record quarterly revenue, boosted by cloud growth and broader AI product integration. Microsoft reported double-digit growth in cloud and AI services. Meta’s ads and engagement metrics continue to improve as the company embeds AI into ranking and creative tools. On the consumer side, Google’s Gemini reports hundreds of millions of monthly users, and ChatGPT continues to scale, reinforcing the need for more inference capacity near term and more training cycles over time.

Building AI Capacity Ahead of Demand

Management across all three firms made a common point: they are building ahead of demand to be ready for more capable models and usage spikes. Meta is hiring elite AI talent while reshaping teams to increase velocity. Microsoft emphasized designing data centers to be “fungible” and continuously modernized—upgrading GPUs, networking, and software efficiency in short cycles rather than locking into a single hardware generation. The strategic logic is clear: pre-position compute, memory, and network fabric so product teams and customers can move fast as model capabilities improve.

Drivers of AI Spend: Cloud, Ads, GenAI

Behind the capex surge is a stack-wide race to scale training, squeeze inference costs, and differentiate with AI-native experiences.

Cloud AI Workloads Move From Pilots to Production

Microsoft’s Azure AI services and Alphabet’s Cloud Platform both posted robust growth, reflecting enterprise pilots maturing into production workloads. Customers want turnkey access to foundation models, vector databases, data pipelines, and security controls—delivered with predictable SLAs. This is pulling through demand for high-density clusters, high-bandwidth memory, and ultra-low latency interconnects in new and existing regions.

Low-Latency AI Inference for Consumer Platforms

Google is threading AI across Search, YouTube, and Android via Gemini. Meta is using AI to improve ad targeting, creative, and content ranking across Facebook, Instagram, and WhatsApp, while advancing the Llama ecosystem. These use cases are inference-heavy and sensitive to latency, driving investment in edge caches, model optimization, and specialized accelerators to reduce cost per token and response times.

Silicon, Systems, and Networks Remain Bottlenecks

The spend is flowing into GPUs and custom silicon (e.g., TPUs and cloud-native AI chips), HBM supply, liquid cooling, and high-radix switching. Network backplanes are moving to 800G and planning for 1.6T Ethernet, with RDMA and congestion control tuning at massive scale. Memory-semantic fabrics (CXL 3.x), chiplet interconnects (UCIe), and Open Compute designs are all in play to improve utilization and lower total cost of ownership. Software efficiency—compilers, schedulers, quantization, and serving stacks—remains a decisive lever.

What AI Capex Means for Telecom and Infra

Telecom, colo, and network vendors sit at the center of the AI build-out, from long-haul optics to edge sites and power.

Data Center Interconnect and Backbone Upgrades

Hyperscaler AI clusters require fat pipes between campuses and regions, accelerating demand for 800G optics, coherent pluggables, and IP/optical convergence. Expect renewed DCI cycles, metro fiber densification, and capacity augments on subsea routes. Vendors across switching, routing, and optical transport—plus builders of modular data halls—are entering a multi-year upgrade window.

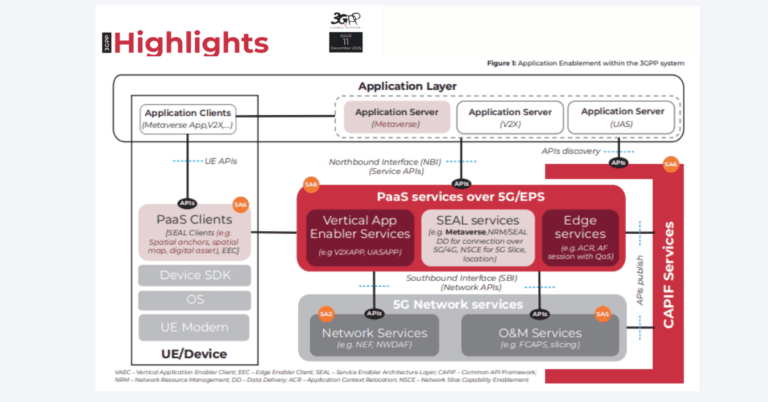

Edge AI and 5G Monetization

Low-latency AI opens opportunities for carrier-hosted MEC, content personalization, and network analytics. 5G Advanced and the O-RAN Alliance ecosystem enable AI-driven RAN optimization via the near-real-time and non-real-time RIC. Telecom operators can package “AI at the edge” with network APIs, location, and QoS tiers for industrial, retail, and media use cases.

Power, Siting, and Sustainability for AI

Multi-gigawatt AI campuses are straining grids and permits. Power purchase agreements, on-site generation, and heat reuse are becoming strategic differentiators. Liquid cooling shifts facility design, while PUE and water usage KPIs face greater scrutiny from regulators and communities. Carriers and colos that can deliver capacity with credible sustainability roadmaps will have an edge in winning hyperscaler and enterprise AI workloads.

AI Capex Risks, Constraints, and Key Signals

The spend is massive, but returns hinge on unit economics, supply chains, and policy.

ROI Pressure and Earnings Volatility in AI

Training costs remain high, and inference at scale can erode margins if models are not optimized. Microsoft flagged volatility tied to its strategic AI partnerships, separating those effects from forward guidance. Watch for improving price/performance per model generation, monetization of copilots and AI assistants, and customer adoption beyond pilots as leading indicators of durable returns.

Supply Chain Constraints and Standardization

HBM capacity, advanced packaging, and optics lead times are critical constraints. Standards like CXL for memory pooling, UCIe for chiplets, and Ethernet 800G/1.6T will influence interoperability and vendor diversity. Buyers should push for multi-vendor fabrics, open telemetry, and portability across accelerators to avoid lock-in.

Policy, Sovereignty, and AI Safety

Data residency rules, export controls on advanced chips, and emerging AI safety frameworks will shape where and how capacity is deployed. Expect continued growth of sovereign AI regions, confidential computing, and model provenance tooling to satisfy regulators and sector-specific compliance.

Action Plan for Telecom and Enterprise IT

Translate the hyperscaler playbook into pragmatic steps that harden networks, control cost, and accelerate AI value.

Harden Networks for AI Traffic Patterns

Plan for east-west heavy workloads with 400/800G upgrades, ECMP-aware designs, and SRv6. Invest in telemetry, eBPF-based observability, and AI-assisted NOC workflows to improve SLOs. Align peering and DCI capacity with expected AI inference spikes tied to product launches and seasonal demand.

Co-Invest and Partner with Clear Economics

Structure capacity deals with hyperscalers and systems integrators that balance revenue guarantees, site control, and power roadmaps. For operators, bundle MEC, private 5G, and data services with cloud on-ramps. For enterprises, negotiate model and serving choices across providers to match workload sensitivity, cost, and compliance.

Optimize AI TCO and Avoid Lock-In

Adopt a reference architecture that supports multiple accelerators, CXL-enabled memory tiers, and containerized model serving. Use open formats and evaluators, manage inference with autoscaling and caching, and apply FinOps discipline to tokens, context length, and retrieval. Pilot both proprietary and open models (e.g., Llama-family) to preserve flexibility as capabilities and pricing evolve.

The bottom line: AI infrastructure is entering an investment super-cycle; winners will pair scale with efficiency, open interfaces, and disciplined go-to-market motions that convert capex into recurring, defensible revenue.