Disney’s $1B OpenAI deal reshapes licensed AI media and network demand

Disney’s equity investment and multi‑year licensing pact with OpenAI brings household IP into Sora and ChatGPT Images while pushing user‑generated synthetic video into mainstream distribution.

Deal terms and platform rollout

Disney will invest $1 billion in OpenAI and become Sora’s first major content licensing partner, enabling fans to generate and share short videos that feature more than 200 characters and environments from Disney, Pixar, Marvel, and Star Wars. The agreement spans three years, excludes actor likenesses and voices, and extends to ChatGPT Images for IP‑compliant image generation. Disney will adopt OpenAI APIs across products and operations, including features for Disney+ and employee productivity, and may showcase select user creations on its streaming service.

Legal pushback on unlicensed AI use

On the same day, Disney sent a legal demand to Google alleging unauthorized use of its IP in AI training and generation across video and image models, underscoring an industry shift from scraping to licensed data. The juxtaposition highlights a bifurcated strategy: embrace AI platforms that pay and control for brand safety while pressuring rivals to curb unlicensed outputs.

How the Disney–OpenAI pact reshapes AI media

This agreement formalizes licensed synthetic media at scale and accelerates the convergence of UGC, premium IP, and AI tooling.

Brand‑safe, licensed UGC with iconic IP

By allowing fan‑made clips with iconic characters inside Sora, Disney is turning brand‑safe, guided creation into a strategic channel. Expect templated prompts, constrained scene packs, and usage policies that balance creativity with IP protection. The exclusion of talent likenesses aligns with ongoing labor and rights frameworks, and will likely be enforced via guardrails in the model and post‑generation filtering.

AI creation linked to Disney+ distribution

Bringing user‑generated Sora content into Disney+—even as a curated showcase—tightens the loop between AI creation and premium streaming distribution. This moves AI video from social novelty to monetizable programming, with implications for editorial standards, content provenance, and ad brand safety.

Enterprise rollout: APIs, ChatGPT, productivity

Disney’s API commitment and ChatGPT rollout to employees indicate that generative AI will touch both consumer experiences and internal workflows (creative tooling, marketing ops, customer service, localization). For enterprises, this is a board‑level signal: AI is shifting from pilots to production with clear spend, governance, and vendor alignment.

Why it matters: economics, risk, regulation

The economics of AI video, IP risk, and network capacity are colliding as generative tools reach mass audiences.

From pilots to platform economics

Licensed IP inside Sora sets a template for how models and rights holders can co‑design guardrails, revenue sharing, and usage analytics. It encourages other studios and leagues to negotiate similar terms rather than rely on enforcement alone. For AI platforms, it legitimizes walled‑garden datasets and ups the bar for safety and provenance.

Reputation, provenance, and policy pressure

Public concern over deepfakes and “AI sludge” on social feeds has regulators moving toward disclosures and provenance, reflected in emerging rules in the EU AI Act and U.S. guidance on watermarking and content credentials. Disney’s approach provides a defensible path for branded AI media that telecom operators, streamers, and advertisers can support without amplifying harmful content.

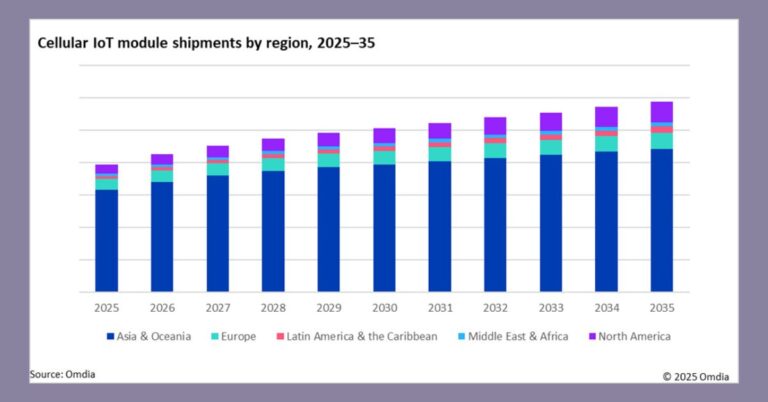

Network, CDN, and edge implications for AI video

Generative video will reshape traffic patterns, content delivery, and compute placement across clouds, CDNs, and 5G edges.

Traffic patterns and capacity planning

Short‑form, high‑bitrate clips generated at scale will look like social video surges with a twist: output may be more personalized and volume more bursty. Operators and CDNs should model higher cache churn, more origin fetches for unique assets, and potentially heavier upstream traffic if creators upload prompt packs and edits. Expect increased peering with AI inference regions and higher GPU‑backed egress from public clouds.

Hybrid inference: edge vs. cloud

Near‑real‑time video generation at consumer scale will stress centralized GPU pools. Telcos and streamers should test hybrid patterns: pre‑rendered templates at CDN edges, lightweight on‑device customization, and regionalized inference for latency‑sensitive features. Offerings like AWS Wavelength, Azure Operator Nexus, and operator GPU‑as‑a‑service pilots could anchor these workloads where latency or data locality matters.

Provenance, watermarking, and delivery‑path filtering

Network and platform operators will be asked to preserve and verify content credentials end‑to‑end. Support for C2PA/Content Credentials, robust invisible watermark detection, and AI‑based moderation pipelines at the edge will become table stakes for distribution partners carrying branded synthetic media. Build hooks to enforce age gating, disallowed mashups, and geofenced rights at delivery time.

Cost control and sustainability

The unit economics of AI video are still volatile. Caching strategies, content de‑duplication, and GPU utilization scheduling will decide margins. Operators should quantify the cost delta of serving highly personalized AI clips versus traditional streaming and explore dynamic quality scaling tied to device, network condition, and ad load.

IP, safety, and governance in licensed AI media

Licensed data strategies, creator policies, and auditability will determine who wins trust and distribution.

Licensed datasets as competitive moat

Disney’s embrace of one model provider and legal pushback on another illustrate a broader industry pivot: from open scraping to explicit licensing. Enterprises should inventory their own rights, negotiate usage scopes (train, fine‑tune, generate), and secure indemnities and content takedown processes across vendors.

Guardrails for creators and employees

Clear red lines—no talent likenesses, no harmful use, no off‑brand crossovers—must be encoded into prompts, model policies, and post‑processing. Internally, rollouts of chat and image tools should include data retention controls, IP classification, and human‑in‑the‑loop review for any public‑facing outputs.

KPIs for brand safety and provenance

Ad buyers and distributors will ask for verifiable provenance, policy conformance scores, and incident reporting. Establish dashboards that track the percentage of AI assets with validated credentials, watermark integrity across transcodes, and moderation SLA adherence.

What to monitor and how to act

Leaders should prepare for rapid standardization in provenance, fragmentation in model/IP alliances, and a jump in AI‑driven video traffic.

Actions for telecom operators and CDNs

Model AI‑video traffic scenarios for 2025–2026; pilot provenance preservation in your delivery stack; stand up GPU‑backed edge zones for templated inference; and offer brand‑safety APIs to streaming partners. Build commercial packages for studios and platforms that bundle distribution, trust and safety, and analytics.

Actions for streamers and media platforms

Integrate licensed AI creation features with strict policy enforcement, provenance by default, and transparent revenue share or rewards. Treat AI UGC as a new programming tier with editorial curation and ad‑suitability controls aligned to industry frameworks.

Actions for enterprises and rights holders

Map your IP and consent posture, lock in licensed model partnerships with audit rights, and align legal, security, and product on content provenance standards. Establish board‑level oversight for AI investments alongside brand and safety KPIs.

Signals and next moves

Expect more studio‑model alliances, rapid adoption of C2PA‑based credentials across major platforms, and policy updates that require provenance for political and commercial content. The players that combine licensed data, verifiable trust, and efficient delivery will set the pace for AI‑native media at scale.