The $800B AI revenue gap: compute, power, and realistic economics

New analysis from Bain & Company puts a stark number on AI’s economics: by 2030 the industry may face an $800 billion annual revenue shortfall against what it needs to fund compute growth.

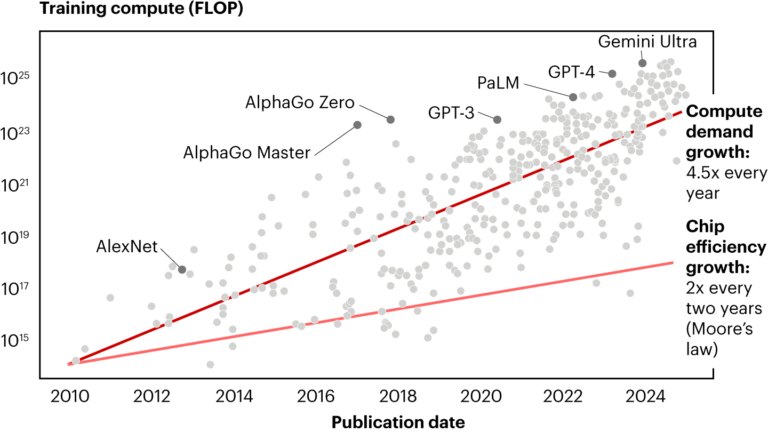

Compute demand outpaces AI monetization

Bain estimates AI providers will require roughly $2 trillion in yearly revenue by 2030 to sustain data center capex, energy, and supply chain costs, yet current monetization trajectories leave a large gap. Usage is exploding across foundation models from OpenAI, Google, and DeepSeek, but willingness to pay per query, seat, or API call is not scaling at the same pace as training and inference bills. The result is an uncomfortable mismatch: hyperscalers and AI labs are building like utilities while revenues still behave like software subscriptions.

Power and supply chains constrain AI capacity

The report projects global incremental AI compute demand could reach 200 GW by 2030, with about half in the U.S., colliding with grid interconnect queues, multiyear lead times for transformers, and rising energy prices. On the supply side, advanced packaging and memory—especially CoWoS and HBM—remain gating factors for GPU and accelerator availability. Nvidia and AMD are prioritizing full-rack systems that maximize performance per watt and per square foot, raising the bar for anyone outside the top clouds to secure capacity at predictable prices.

Valuations and build plans confront cost realities

Bain’s framing challenges the assumption that scale alone closes the economics. If capex keeps rising and power constraints tighten, AI providers will need higher-yield use cases, more efficient models, or different deployment architectures to keep margins intact. Bloomberg Intelligence expects Microsoft, Amazon, and Meta to push combined AI spend well beyond $500 billion annually by the early 2030s, but even aggressive outlays may not bridge the revenue gap without better unit economics.

Why the AI revenue gap matters for telecom and cloud operators

The AI capex-revenue imbalance shifts where and how networks, data centers, and edge assets get built, financed, and monetized.

Power, grid, and siting are the new bottlenecks

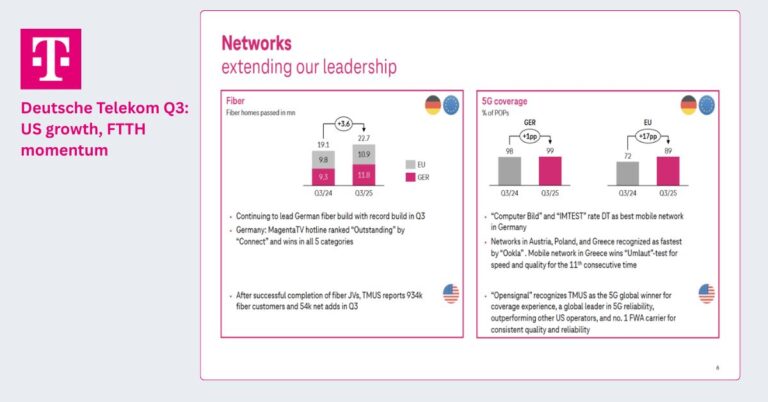

Data center location strategies now start with electrons, not land. Operators will cluster near substations, generation, or firm PPAs, pushing AI campuses into new metros and across borders. Telecom carriers with access to rights-of-way, dark fiber, and substations can become critical partners in site selection, interconnect, and long-haul backbones. Expect more joint ventures among hyperscalers, utilities, and carriers to secure power, water, and permits at scale.

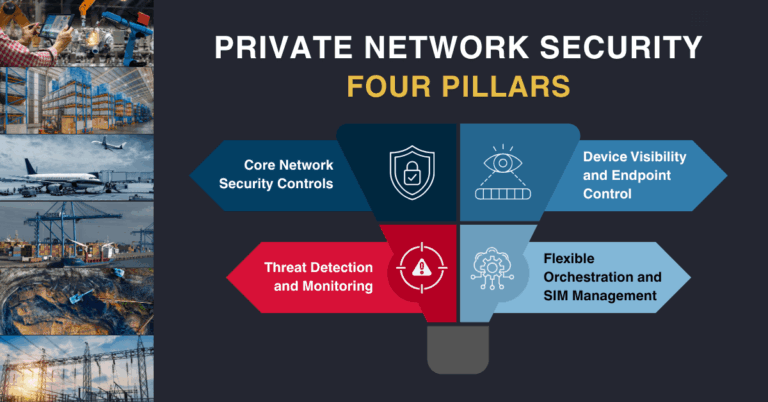

Networks must scale for AI east–west and edge traffic

Training clusters drive massive east–west traffic and demand low-latency, lossless fabrics; inference shifts introduce bursty north–south and edge-originated flows. Backbones will need more 400G/800G waves and faster upgrade cycles, while data center fabrics accelerate adoption of 800G–1.6T optics, congestion control, and high-radix switching. As inference migrates to metro edge and on-prem sites, operators should prioritize MEC footprints (ETSI MEC), private 5G integration, and deterministic transport for AI-assisted applications.

Cooling, density, and interconnect drive AI TCO

As thermal density rises, liquid cooling (direct-to-chip and immersion) will be essential to maintain rack densities and PUE targets. Interconnect decisions—NVLink versus Ethernet/InfiniBand and the role of CXL for memory pooling—will shape performance per watt and capex per token. Carriers and colo providers that standardize on high-density, liquid-ready pods can capture demand from GB200-class and MI300X-class systems faster than facilities tied to air-only cooling.

Strategic actions for enterprises and telcos now

The winning playbook balances near-term ROI with long-lead investments in power, interconnect, and model efficiency.

Optimize ROI per watt and per token

Shift procurement metrics from TOPS per dollar to tokens per dollar and per kilowatt-hour. Push engineering teams to adopt quantization (INT8/INT4), sparsity, mixture-of-experts, speculative decoding, and KV-cache optimizations to cut inference costs. Tier SLAs by latency and accuracy to match workloads to the cheapest viable compute and power.

Shift inference to the edge where it pays

When latency, privacy, or egress costs dominate, edge inference is cheaper and faster than centralized compute. With client NPUs now reaching 40–60 TOPS and server-edge accelerators improving, prioritize designs that run small and medium models close to the user—branch sites, factories, venues, and 5G MEC—while reserving centralized clusters for training and large-context workloads.

Diversify AI silicon and secure supply

Don’t overfit to a single vendor or interconnect. Evaluate Nvidia GB200/NVL72 systems alongside AMD Instinct MI300X pods and cloud TPUs, and test Ethernet AI fabrics as an alternative to InfiniBand where operational simplicity and cost warrant. Lock in HBM and advanced packaging capacity through suppliers or via cloud commitments, and consider capacity “options” with colos to hedge siting and power risk.

Engage utilities and regulators early

Power is strategy. Engage utilities on dual-feed designs, on-site generation, heat reuse, and grid services that can offset tariffs. Begin interconnect and transformer orders years ahead of need, and build a structured permitting playbook for jurisdictions implementing data center moratoriums or environmental constraints.

Rework AI business cases and pricing

Update unit economics with realistic power, depreciation, and supply-chain assumptions. Consider metered pricing for inference, data egress, or context length; charge premiums for low-latency tiers and private deployments. For telcos, package AI with network-as-a-service, private 5G, and managed edge to create outcome-based offers (quality inspection, retail analytics, field ops) that scale beyond API resale.

AI market and infrastructure signals through 2030

The balance between technological efficiency and infrastructure realities will determine who captures durable margins in AI.

Model efficiency gains vs. capex growth

Track whether algorithmic gains outpace hardware capex—smaller, more efficient models and MoE routing could flatten cost curves; if not, expect consolidation around players with the cheapest power and densest campuses.

Power, HBM, and packaging capacity expansions

Monitor grid interconnect backlogs, new generation projects, and the ramp of HBM and CoWoS capacity. Sustained shortages will keep GPU prices high and favor customers with long-term take-or-pay agreements.

Standardization and ecosystem shifts to watch

Watch the maturation of AI-over-Ethernet, CXL-based memory pooling, and open accelerator ecosystems, alongside telco initiatives in O-RAN, MEC, and network slicing that can anchor edge AI revenue. Partnerships among hyperscalers, carriers, and utilities will signal where capital is de-risked—and where the next AI regions will emerge.

The takeaway: AI remains strategically inevitable, but its near-term economics are not automatic. The winners will be those who treat power, interconnect, and model efficiency as first-class levers—and who align their monetization with the real cost of tokens and watts.