Microsoft 365 AI integrates Anthropic Claude

Microsoft is preparing to license Anthropic’s Claude models for Microsoft 365, signaling a multi-model strategy that reduces exclusive reliance on OpenAI across Word, Excel, Outlook, and PowerPoint.

Why Microsoft is adding Anthropic Claude now

According to multiple reports, Microsoft plans to integrate Anthropic’s Claude Sonnet 4 alongside OpenAI’s models to power Microsoft 365 Copilot features, including content generation and slide design in PowerPoint. This is a notable pivot from a single-model default to a best-of-breed approach that routes tasks to the model that performs best for a given function. For enterprises, especially in regulated and mission-critical domains like telecom, the shift implies more resilience, better accuracy for specialized tasks, and new options to optimize for quality, cost, and latency.

Microsoft–OpenAI–Anthropic: evolving partnership strategy

Microsoft remains strategically tied to OpenAI for frontier-scale models, but both companies are hedging. OpenAI is investing in infrastructure independence, including a chip program reportedly with Broadcom and a growing product footprint beyond Microsoft’s orbit. Microsoft, for its part, already offers third-party models such as Anthropic and xAI’s Grok in GitHub Copilot and has introduced early in-house models (MAI-Voice-1 and MAI-1-preview). Adding Anthropic to Microsoft 365 follows this playbook: diversify supply, preserve leverage in negotiations, and ensure continuity as the AI ecosystem evolves.

Enterprise Copilot impacts: accuracy, safety, and cost

Enterprises should expect Copilot features that dynamically select between OpenAI and Anthropic for specific tasks—e.g., structured document drafting, grounded summaries, or presentation layout—which can translate to fewer hallucinations, more consistent formatting, and better safety guardrails. For organizations standardizing on Microsoft 365, this reduces the need to build separate Anthropic integrations just to access different model strengths.

Why telecom and enterprise IT should act now

Multi-model AI has immediate consequences for network automation, OSS/BSS modernization, and edge strategies where performance, governance, and cost predictability are critical.

Multi-model control plane for mission-critical AI

GenAI is moving from pilots to production in service design, field ops, NOC workflows, and customer care. A model-agnostic approach lets teams route prompts to a model based on measured outcomes (accuracy, latency, toxicity, and cost) and fallback seamlessly if a model degrades or changes terms. For telcos orchestrating AI across provisioning, assurance, and marketing, this reduces vendor concentration risk and aligns with reliability targets akin to five-nines thinking.

Optimizing latency, data sovereignty, and cost

Telecoms increasingly need locality controls—cloud, on-prem, and at the 5G edge—for data residency and sub-second inference. A diversified Microsoft stack, accessible through Azure AI and Microsoft 365, can support policies that keep sensitive data within region and shift inference between higher-quality or lower-cost models as SLAs dictate. This is relevant for RAG over sensitive OSS/BSS data, closed-book summarization of trouble tickets, and language support across multi-market operations.

Streamlined procurement and security in Microsoft 365

Buying multi-model access through existing Microsoft agreements can simplify security reviews and vendor onboarding while preserving optionality. Anthropic’s safety posture and structured output control, combined with Microsoft’s enterprise compliance envelope, may shorten the path to production compared to standalone integrations—provided data handling, logging, and retention policies are contractually clear.

Multi-model risks and unanswered questions

While choice is good, multi-model environments introduce new integration, governance, and commercial complexities that leaders must manage deliberately.

Model routing, benchmarking, and evaluation debt

Enterprises will need robust model routing, prompt management, and continuous evaluation. Without task-specific benchmarks and golden datasets—think network design accuracy, alarm triage precision, or ticket summarization fidelity—teams risk chasing perceptions rather than measurable gains. Expect to invest in evaluation pipelines and model cards aligned to NIST AI RMF principles.

Contracts, data rights, and AI traceability

Contracts should explicitly cover training and fine-tuning rights, telemetry retention, and the handling of customer data across models. Ensure lineage is auditable—who processed what, when, and where—and that red-teaming and safety guardrails are enforceable across vendors. ISO/IEC 42001-style AI management systems can help formalize this across business units.

Supplier flux, infrastructure choices, and TCO volatility

OpenAI’s chip ambitions with Broadcom and Microsoft’s own model R&D point to a fluid supplier landscape. GPU scarcity, token pricing, and throughput variability will continue. Enterprises should model TCO scenarios that include egress, storage, vector database costs, and edge inference hardware, not just per-token rates.

Action plan for CTOs and network leaders

Use Microsoft’s multi-model move as a forcing function to harden your AI architecture, procurement posture, and operating model.

Build a model selection and safety layer

Adopt a vendor-neutral abstraction for prompt routing and safety—via Azure AI model catalogs or your own API gateway—that supports A/B testing, guardrails, and policy-based selection across OpenAI, Anthropic, and internal models.

Benchmark on telecom-native GenAI tasks

Create task suites for RAG over network inventories, topology reasoning, LLM-driven configuration generation, and care-agent assistance. Measure accuracy, latency, containment rate, and cost per resolved task, not just per-token metrics.

Plan hybrid cloud and edge inference

Define which workloads run in Microsoft-hosted clouds versus on-prem or MEC sites, and map models to data classifications. For ultra-low-latency scenarios, pre-position distilled or smaller models at the edge and use larger models for planning or offline optimization.

Negotiate flexible, multi-model AI contracts

Push for model portability, transparent usage analytics, volume-based discounts across vendors, and clear SLAs. Ensure rights to switch defaults, retain evaluation data, and enforce data residency by region.

Signals to track in the next two quarters

Several developments will indicate how quickly this multi-model era will mature for enterprise and telecom use cases.

OpenAI restructuring and Microsoft–OpenAI terms

The scale, duration, and pricing of Microsoft’s updated OpenAI agreement will hint at long-term cost curves and availability for enterprise workloads on Azure.

Anthropic roadmap and enterprise controls

Track Claude Sonnet 4 updates, fine-tuning options, system prompt controls, and content safety advances that affect regulated deployments and multilingual care scenarios.

Microsoft first-party model progress

Watch MAI-Voice-1 and successors for call summarization, speech intelligence, and NOC assistant use cases, and whether Microsoft blends first-party models with third-party models in M365 and Azure AI Studio.

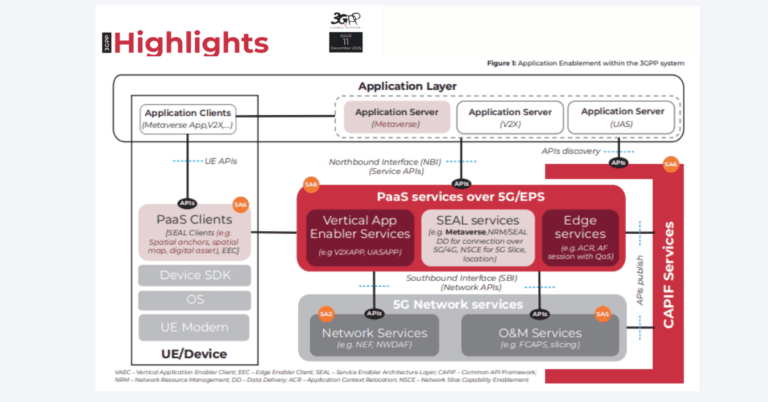

Standards and ecosystem alignment for telco AI

Monitor GSMA Open Gateway APIs for telco-grade exposure of AI-enabled services, ETSI ENI guidance on network intelligence, TM Forum assets for GenAI operations, and the operationalization of NIST AI RMF and ISO/IEC 42001 across large operators.

Bottom line: Microsoft’s Anthropic integration accelerates the enterprise shift to multi-model AI; telecom and IT leaders should lean into evaluation rigor, hybrid deployment, and contract flexibility to capture the upside while containing operational risk.