What’s New in Google Cloud G4 VMs with NVIDIA Blackwell

Google Cloud made its NVIDIA-powered G4 virtual machines generally available, bringing Blackwell-era GPUs and industrial simulation stacks to more regions and use cases.

Blackwell RTX PRO 6000 for Multimodal and Physical AI

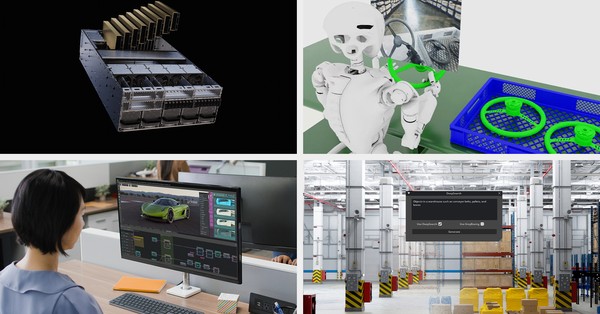

The G4 family is built on NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs and targets high-throughput inference, visual computing, and simulation. Each VM can be configured with 1, 2, 4, or 8 GPUs, delivering up to 768 GB of GDDR7 memory in total. Fifth-generation Tensor Cores introduce FP4 precision to drive efficient multimodal and LLM inference, while fourth-generation RT Cores double real-time ray-tracing performance over the prior generation for photorealistic rendering. Google cites up to 9x throughput over G2 instances, positioning G4 as a universal GPU platform spanning AI inference, content creation, CAD/CAE acceleration, and robotics simulation.

Omniverse and Isaac Sim Optimized for G4 on Marketplace

NVIDIA Omniverse and NVIDIA Isaac Sim are now available as VM images in Google Cloud Marketplace and optimized for G4. Omniverse, based on OpenUSD, provides integration-ready libraries for industrial digitalization, and Isaac Sim offers a reference stack for robot training and validation in physics-accurate virtual environments. Together, they enable scalable digital twin and physical AI workflows for manufacturing, automotive, logistics, and adjacent sectors.

Why G4 Matters for Telecom and Enterprise IT

The G4 launch aligns with a surge in multimodal and real-time applications that demand low latency, efficient scaling, and enterprise-grade orchestration.

Low-Latency Multimodal Inference and Real-Time Apps

Telecoms, media, and service providers can use G4 VMs to serve copilots, vision-language models, and streaming media inferencing with lower latency and higher concurrency. FP4 support and large per-GPU memory (96 GB) reduce cost per token for LLMs and enable larger context windows. For edge-adjacent and regulated workloads, broader regional availability helps keep data in-region while meeting SLA targets for interactive services.

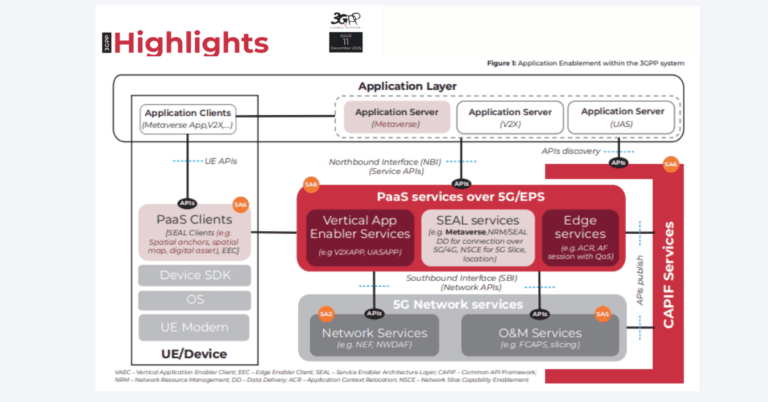

Connecting Industrial and Network Digital Twins

Digital twin initiatives move from concept to operations when physics-based simulation and AI inference run side-by-side. With Omniverse on G4, enterprises can connect factory, logistics, and robotics twins to network digital twins for coverage planning, energy optimization, and incident response. This is directly relevant to 5G densification, private networks, and autonomous operations where design, test, and run-time analytics must converge.

Architecture and Performance Highlights of G4

Key platform attributes focus on memory bandwidth, precision efficiency, GPU partitioning, and inter-GPU communication.

FP4, MIG Partitioning, and Memory Bandwidth

G4 supports FP4 and advanced quantization to boost inference throughput without compromising accuracy for many model types. Multi-Instance GPU (MIG) lets teams split a single GPU into up to four isolated instances with dedicated HBM, compute, and media encoders. This enables multi-tenant pooling and predictable QoS for mixed inference traffic, which is essential for NaaS, CPaaS, and internal platform teams serving diverse models.

Multi-GPU Scaling and Lower Latency via P2P PCIe

Google engineered an enhanced PCIe peer-to-peer data path for the RTX PRO 6000 on G4, accelerating collective operations like All-Reduce. For tensor-parallel model serving, Google reports up to 168% higher throughput and 41% lower inter-token latency versus standard non-P2P setups. The result is faster response times and higher user density per VM for large model serving, especially for 30B–100B parameter ranges.

Workloads and Early Adopters on G4

Customer results and vertical patterns point to a broad set of AI, visualization, and simulation use cases.

Creative, Simulation, and Robotics Use Cases

WPP uses G4 with Omniverse to render photoreal 3D advertising at global scale, compressing production cycles from weeks to hours. Altair integrates G4 into Altair One for high-fidelity CFD and physics simulations. For telecom and utilities, the same capabilities can power site design, city-scale propagation studies, and autonomous inspection workflows using sensor fusion and synthetic data generated in Isaac Sim.

HPC, Data Analytics, and RTX Virtual Workstations

CUDA-X libraries accelerate scientific computing tasks, with Blackwell-based genomics pipelines reporting multi-x throughput gains over prior generations. Dataproc support brings GPU acceleration to Spark and Hadoop, enabling faster feature engineering and model pipelines. NVIDIA RTX Virtual Workstation on G4 delivers remote design, VFX, and visualization with DLSS 4 and the latest NVENC/NVDEC for low-latency streaming.

Cloud-Native Integration and Operations on Google Cloud

G4 is wired into Google Cloud’s AI Hypercomputer architecture and core services to simplify deployment and scale.

GKE Autopilot and Vertex AI Integration

G4 GPUs are GA in Google Kubernetes Engine, including Autopilot, to automate scaling and cost controls. Add the GKE Inference Gateway for lower serving latency and higher throughput. Vertex AI benefits from large GPU memory and global reach for both training and inference, simplifying MLOps and model governance across regions.

Data and Storage Pipelines: Hyperdisk ML, Lustre, Cloud Run

Hyperdisk ML offers low-latency block storage at high IOPS, while Managed Lustre provides a zone-local parallel file system up to 1 TB/s for HPC and large-scale AI. Cloud Storage supports global dataset access with edge caching for inference. Cloud Run now exposes managed access to RTX PRO 6000 GPUs for real-time AI inference and media rendering in pay-per-use mode.

Buyer Guidance: How to Deploy G4 Now

Align your workload mix, deployment model, and data residency needs with the G4 capabilities to capture fast ROI.

Sizing, MIG Profiles, and Capacity Planning

Map model sizes, context windows, and concurrency targets to per-GPU memory and MIG profiles. Use FP4 and quantization where accuracy allows. For >30B parameter models or multi-modal pipelines, test multi-GPU configurations to exploit the enhanced P2P path. For bursty or interactive apps, evaluate Cloud Run with GPUs to smooth peak demand.

Governance, Cost Controls, and Portability

Standardize on Kubernetes with GKE for portability and policy control, and use Vertex AI for model lifecycle management. Leverage Marketplace images for Omniverse and Isaac Sim to accelerate setup and draw down existing commitments. For multi-tenant platforms, isolate workloads with MIG and enforce SLOs per slice to balance cost and performance.

What’s Next for G4 and Blackwell

Roadmaps and ecosystem signals around Blackwell, agentic AI, and industrial digital twins will shape platform choices in 2025.

Fractional GPUs and Broader Blackwell Rollout

Fractional GPU options are coming to G4, which will expand price-performance choices for lighter inference. Expect continued alignment with A-series training instances to create an end-to-end Blackwell stack spanning massive training to high-density inference and visualization.

Agentic AI, Standards, and Ecosystem Signals

Keep an eye on NVIDIA Nemotron reasoning models, NIM microservices, and Omniverse Blueprints with Cosmos for turnkey agents and digital twins. Watch OpenUSD adoption across ISVs and SIs, plus telco-specific digital twin templates. Evaluate how these pieces integrate with 5G edge strategies, data residency rules, and AI assurance frameworks as deployments scale.