FTC 6(b) probe of AI companion chatbots and youth safety

The U.S. Federal Trade Commission has initiated a broad 6(b) study into consumer-facing AI companion chatbots, focusing on risks to children and teens and the governance controls companies have in place.

Companies named in the FTC inquiry

The agency issued orders to seven firms operating at the center of generative AI and social platforms: Alphabet, Character Technologies (Character.AI), Instagram, Meta Platforms, OpenAI, Snap, and xAI. These companies run or embed chatbots that simulate human-like conversation and relationships, often within social apps used by minors. The Commission’s vote to proceed was unanimous, signaling cross-party attention on youth safety in AI.

Scope of FTC requests and governance details

Under its Section 6(b) authority, the FTC is seeking detailed information on how these providers design, test, deploy, and monetize AI companions, and how they limit harms to children and adolescents. The scope spans monetization strategies, input/output processing, character development workflows, safety measurement before and after launch, mitigation techniques, disclosures to users and parents, enforcement of age gates and community rules, and how personal data from conversations is collected, used, shared, and retained. The inquiry also touches on compliance with the Children’s Online Privacy Protection Act (COPPA) Rule and related safe harbor programs.

Why now youth safety risks and monetization pressures in companion AI

Companion bots are moving into mainstream consumer apps amid a documented rise in ethical, privacy, and mental health concerns. Reports and lawsuits have alleged harmful interactions with minors, including cases where long-running conversations bypassed guardrails. Platform policies have been revised under scrutiny, and providers have pledged stronger handling of sensitive topics. The FTC is responding to a fast-commercializing market where relationship-like interactions can heighten trust, drive engagement, and create incentives that may conflict with safety promises.

Implications for tech telecom and platform operators

The inquiry signals a regulatory pivot from abstract AI risk to product-specific accountability across data, design, and deployment pipelines.

Regulatory signal youth-facing AI as a top priority

6(b) studies do not begin as enforcement actions, but they often shape future cases and rulemaking. For AI companions, the Commission is mapping the full lifecycle—from character approval to post-deployment monitoring—creating a template for how regulators expect governance to work in practice. Providers of messaging, app distribution, device platforms, and connectivity should expect spillover obligations.

COPPA obligations and data handling risks

Chatbots that collect personal information from users under 13 trigger COPPA obligations, including verifiable parental consent, data minimization, and strict limits on sharing. Teens are not covered by COPPA, but the FTC is probing disclosures, targeting, and safety assurances that can constitute deceptive practices if misaligned with reality. The data practices of companion features embedded in social and messaging services will face heightened scrutiny.

Operational impact building a model safety lifecycle

The agency is asking for proof of safety-by-design. That includes pre-release red teaming, continuous evaluation for long-dialogue drift, robust incident response, and retraining or policy updates when harms appear. Firms will need auditable artifacts: safety test plans, metrics, logs, policy change histories, and evidence of enforcement against terms violations.

Key challenges for AI companion safety

Companies will need to reconcile product virality and personalization with child protection, privacy compliance, and trustworthy AI operations.

Privacy-preserving age assurance

Stronger age gates are coming, but crude checks risk over-collecting sensitive identity data. Expect demand for privacy-preserving age assurance, federated checks via device or carrier signals, and revocable parental consent flows tied to least-privilege data design.

Managing safety drift in long conversations

Providers acknowledge that guardrails can degrade in extended, emotionally charged chats. This raises requirements for conversation-aware safety classifiers, runtime policy engines, and reinforcement mechanisms that sustain risk controls over multi-turn sessions.

Risks of character bots and parasocial design

Character-driven companions optimize for intimacy and retention, which can blur boundaries for minors and vulnerable adults. Design patterns that mimic romance, therapy, or authority figures will attract regulatory attention and may require stricter gating, labeling, and role constraints.

Aligning monetization with safety incentives

Engagement-based business models can conflict with safety goals if revenue depends on longer, deeper conversations. Companies should separate safety objectives from growth metrics and document how product KPIs avoid incentivizing risky behaviors.

Action plan for companies

Organizations that build, embed, or distribute AI companions should operationalize youth-safety governance now and prepare for data requests.

Establish an AI safety management system

Adopt a formal program aligned to NIST’s AI Risk Management Framework and ISO/IEC 42001 for AI management systems. Define risk taxonomies for youth contexts, set acceptance thresholds, and maintain evidence of safety reviews, sign-offs, and post-release evaluations.

Instrument models and content safety pipelines

Combine pre-trained LLMs with layered guardrails: policy-tuned prompts, retrieval filters, topic and sentiment classifiers, and refusal/recovery flows for self-harm, sexuality, and medical or legal advice. Log safety events, run shadow evaluations, and continuously test for jailbreaks and long-dialogue drift.

Data minimization and sensitive PII controls

Map data flows for prompts and outputs, apply data minimization, and enforce retention and access controls. Restrict third-party sharing, segregate training data from production chat logs, and document consent and parental control mechanisms for under-13 users.

Youth policies disclosures and parental tools

Ensure age-appropriate experiences by default, with clear labeling of AI capabilities, limitations, and data use. Provide parental dashboards, escalation paths to live help, and crisis workflows routed to appropriate resources for self-harm or abuse signals.

Vendor and ecosystem readiness for carriers and OEMs

Telecom operators, device manufacturers, and app store owners should update distribution policies to reflect youth risk categories, require attestations and audit artifacts from chatbot vendors, and consider on-device or network-level safety instrumentation with privacy safeguards.

What to watch in the next 6 to 12 months

The inquiry will shape product roadmaps, ecosystem policies, and potential enforcement across the AI companion market.

Expected outcomes of the FTC 6(b) study

Expect a public report that benchmarks practices across firms, identifies gaps, and signals where enforcement and rulemaking may follow, particularly around deceptive claims, inadequate testing, or weak age restrictions.

Federal and state legislative developments

Momentum around youth online safety, COPPA modernization, and state-level design codes will influence requirements for age assurance, default settings, and data use restrictions in AI companions.

Platform and app store policy shifts to expect

App distribution rules may tighten for companion bots, including mandatory disclosures, independent safety assessments, and stronger age gating for character-based or therapeutic features.

Standards adoption and independent audits

Look for wider adoption of NIST AI RMF profiles for youth contexts, ISO/IEC AI management certifications, and third-party red-team audits as prerequisites for partnerships and app approvals.

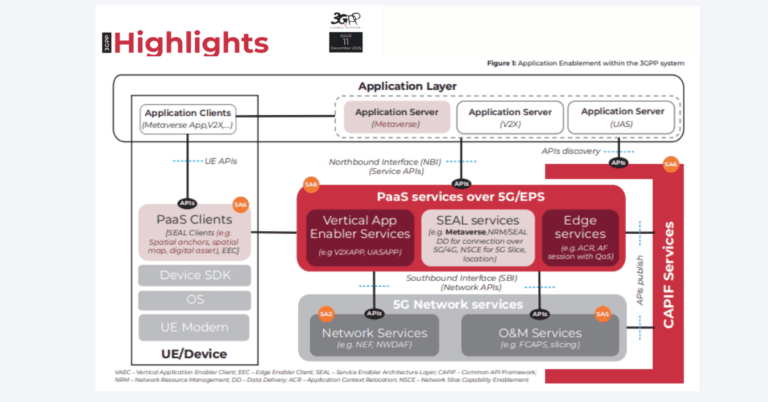

On-device inference and network protections

To reduce data exposure and latency, providers may move sensitive safety checks on-device while leveraging network-level anomaly detection; telecom operators can differentiate with privacy-preserving parental controls and safer messaging integrations.

Bottom line: the FTC is setting expectations for measurable, auditable safety in AI companions, and companies across the digital and telecom stack should align governance, product design, and data practices now to reduce regulatory and reputational risk.