Why this matters: secure AI data fabric for agentic AI at scale

Cisco’s Secure AI Factory with NVIDIA, now integrated with VAST Data’s InsightEngine, targets the core blocker to agentic AI at scale: getting proprietary data to models quickly, securely, and at enterprise breadth.

Agentic AI requires low-latency, governed enterprise data

Enterprises are moving beyond chatbots to autonomous agents that reason across multi-step tasks, call tools, and collaborate with humans and other agents—but these systems fail without low-latency access to current, trusted data. The new joint solution aims to collapse RAG pipeline delays from minutes to seconds, reduce integration risk with validated reference designs, and keep every interaction within security and compliance controls.

Data fabric performance, retrieval, and governance are the AI bottleneck

Model performance is no longer only about GPU counts; the limiting factor is data movement, indexing, retrieval, and governance across files, objects, tables, and vectors. By aligning Cisco’s AI PODs, NVIDIA’s AI Data Platform and DPUs, and VAST’s data intelligence layer, the offering provides a turnkey workload data fabric for production-grade AI agents.

What Cisco, NVIDIA, and VAST deliver: turnkey secure AI data platform

The trio is packaging compute, networking, storage, data intelligence, and security into pre-integrated configurations that shorten time-to-value for RAG and agentic AI.

Pre-validated AI PODs for secure, real-time RAG pipelines

Cisco AI PODs now ship with VAST InsightEngine using NVIDIA’s AI Data Platform reference design, turning raw enterprise data into AI-ready indices and vectors in near real time. Cisco UCS servers with NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs anchor the compute layer, while high-performance Ethernet underpins the fabric. The result is a tested stack for end-to-end ingestion, vectorization, retrieval, and inference.

Three deployment options aligned to maturity and scale

Customers can adopt: 1) VAST on Cisco UCS for a unified data store vetted through Cisco SolutionsPlus; 2) VAST on Cisco AI PODs for a pre-validated infrastructure stack with simplified ordering; or 3) VAST InsightEngine on Cisco AI PODs for a turnkey AI Data Platform enabling NVIDIA NIMs as a Service for enterprise RAG. All variants are orderable now.

Architecture: data intelligence, compute, and Ethernet networking

The design closes the loop from data ingest to inference with GPU-accelerated I/O, serverless automation, and Ethernet-based acceleration to minimize latency and friction.

VAST InsightEngine for data intelligence and RAG acceleration

VAST InsightEngine scans and catalogs files, objects, and tables in the VAST Data Platform; performs real-time embedding and indexing; and orchestrates retrieval using NVIDIA components such as NeMo Retriever and NIM microservices. Running these services natively on the VAST AI OS streamlines lifecycle management, autoscaling, and model updates, enabling continuous RAG without heavy integration work.

Cisco UCS + NVIDIA stack for production AI and agents

Cisco’s UCS portfolio paired with NVIDIA Blackwell-class RTX PRO Server GPUs delivers inference and light training capacity, while NVIDIA AI Enterprise supplies production-ready models and toolchains. The stack is designed for multi-agent workloads, dynamic context windows, and continuous learning loops that depend on high-throughput, low-latency data access.

Ethernet-first acceleration with BlueField-3 DPUs and SuperNICs

NVIDIA BlueField-3 DPUs and SuperNICs help offload networking, security, and storage tasks and accelerate AI traffic in Ethernet-based clouds. This approach aligns with operator and enterprise preferences for standards-based fabrics, offering an alternative path to InfiniBand while maintaining performance for multi-tenant AI clusters.

Built-in AI security, governance, and observability

The solution integrates layered defenses, RBAC, and auditability so teams can scale AI use without compromising oversight or compliance.

Policy enforcement, RBAC, and token-level safeguards

Cisco Hypershield and AI Defense bring microsegmentation, model and data protection, and runtime guardrails into the AI fabric, helping restrict access by role and data domain. Combined with compliance-ready logging and audit trails, the platform enables secure handling of sensitive workloads across teams and lines of business.

End-to-end AI observability with Splunk

Telemetry and analytics via Splunk provide end-to-end visibility across data pipelines, model services, and network flows. This supports capacity planning, SLO tracking, and incident response—crucial for agentic systems that operate continuously and interact with production systems.

Strategic impact for telecom and enterprise AI

For operators, cloud providers, and large enterprises, the package aligns AI infrastructure with data gravity, governance mandates, and Ethernet-centric networks.

Why it matters for telco and edge AI deployments

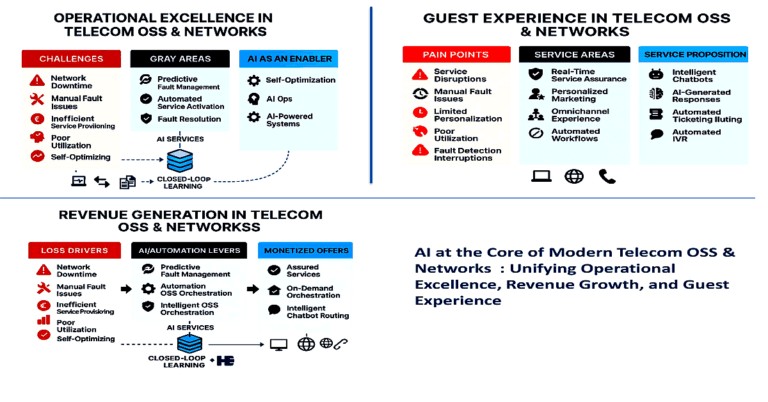

Agentic AI is moving into service operations, field automation, and customer care, where near-real-time retrieval from OSS/BSS, logs, and knowledge bases is essential. An Ethernet-based AI data fabric with DPUs fits existing DC and edge designs, enabling telcos to run secure RAG at MEC sites and central offices while retaining data locality and policy control.

From pilots to scaled production AI

Validated architectures reduce integration risk, speed PoCs, and standardize Day 2 operations. The promise to shrink RAG latency to seconds is material for use cases such as NOC copilots, fraud mitigation, proactive care, and network planning—areas where recency and precision drive business outcomes.

What to watch next and how to get started

Teams should align architecture choices to data, governance, and latency needs, then quantify performance and TCO before scaling.

Evaluation checklist for performance, security, and TCO

– Run RAG benchmarks using live enterprise data; validate end-to-end latency, throughput, and retrieval accuracy.

– Test RBAC, lineage, and audit workflows across regulated datasets; verify isolation with DPUs and policy enforcement with Hypershield.

– Measure cost per agent action and per token under concurrent workloads; right-size GPUs, DPUs, and storage tiers.

– Pilot NIM microservices and NeMo Retriever for lifecycle and autoscaling; validate rollbacks and upgrade paths.

– Instrument with Splunk for SLOs, drift detection, and capacity alerts; codify runbooks for multi-agent operations.

Near-term actions for 60–90 day AI PoCs

Identify two to three agentic AI use cases with clear ROI, stand up a Secure AI Factory POD with InsightEngine, and target a 60–90 day PoC that proves latency, governance, and integration with existing data estates. If Ethernet is your strategic fabric, assess SuperNIC and BlueField-3 benefits versus status quo CPU-only networking to free GPU cycles and improve predictability.