Windows 11 evolves into an agentic AI OS

Microsoft is weaving Copilot directly into Windows 11 so users can talk to their PCs and allow AI to see the screen and take actions, signaling a shift toward an “AI PC” model.

Voice control with “Hey, Copilot” becomes primary input

Microsoft is rolling out a wake phrase so users can start tasks or ask for help hands-free, positioning voice alongside keyboard and mouse as a core input. The company believes everyday voice use will extend beyond meetings and dictation to device control and multi-step workflows. This time, the bet is that modern AI will overcome the adoption barriers that stalled earlier attempts like Cortana, by delivering more reliable understanding and task completion.

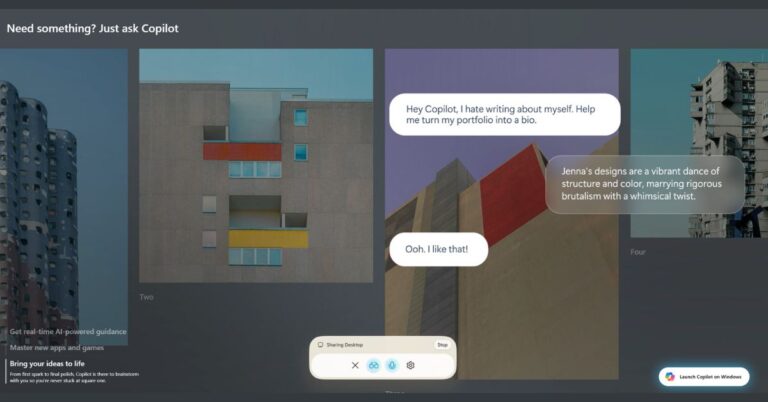

Copilot Vision: opt-in, screen-aware AI assistance

Copilot Vision can view what’s on your screen—apps, documents, photos, even games—and provide step-by-step guidance or troubleshooting. It works like sharing your screen in a video call and is opt-in, a deliberate contrast to last year’s failed “Recall” feature. The capability is rolling out broadly where Copilot is available, and it underpins agentic workflows because the AI must “see” context before it can help.

Copilot Actions: secure AI agent to operate your PC

Copilot Actions moves from advice to execution. In a secure, contained desktop environment, the AI can perform local tasks such as batch edits or configuration changes, while listing each step it takes. Microsoft is limiting this to a preview with narrow use cases, openly acknowledging that agents will make mistakes with complex apps and need tuning. Expect a gradual expansion as models improve and Microsoft hardens safety, auditing, and rollback controls.

Taskbar Copilot and faster Windows search

Windows 11 integrates Copilot directly into the taskbar, with one-click entry points for Voice and Vision. The search experience also gets an upgrade to accelerate finding local files, apps, and settings, aligning with the broader goal of lowering friction between natural language intent and system actions.

Enterprise and telecom impact of agentic Windows 11

As Windows 10 support sunsets, Microsoft is using Windows 11 to normalize voice-driven and screen-aware agents, with implications for endpoint strategy, app design, and operations.

From chat to OS-level AI automation

The shift is from Q&A to “do this for me.” That means OS-level automation that spans multiple apps, credentials, and windows—traditionally the domain of scripts and RPA. If Microsoft can make agentic actions safe and dependable, task automation will move closer to end users and reduce reliance on bespoke integrations. For telecom and large enterprises, this could speed workflows across OSS/BSS, ticketing, network tools, and office apps without waiting on API-level work.

What AI PCs mean for OEMs and ISVs

Even as Microsoft enables these features on standard Windows 11 PCs, voice and on-device reasoning will benefit from AI-capable hardware over time. OEMs will market “AI PC” benefits around responsiveness and privacy. ISVs should assume users will invoke features by voice and expect agents to navigate UIs autonomously. Clear UI structure, accessibility labels, and stable control hierarchies will become table stakes so agents can act predictably.

Operational impact for telecom and IT teams

Agentic assistance fits field service, NOC, and contact center scenarios where hands-free guidance and step-by-step actions can reduce error rates and handling time. Screen-aware help can coach technicians through specialized tools, while controlled actions can execute standard operating procedures. For IT, first-line support could shift from knowledge articles to guided fixes the agent performs, with humans supervising rather than clicking every step.

Managing privacy, reliability, and AI governance risks

Deploying screen-aware agents requires careful guardrails around data, user consent, and auditability.

Privacy post-Recall: explicit consent and controls

Copilot Vision streams what users see, which may include sensitive material. While it is opt-in and session-based, enterprises must enforce policies on when screen sharing is allowed, how content is redacted, and what data is logged. Users need clear indicators when the screen is visible to the agent and easy controls to pause or limit scopes (apps, windows, or regions).

Model reliability and human-in-the-loop safeguards

Microsoft warns early agents will make mistakes with complex applications. Enterprises should start with low-risk, reversible tasks and require confirmation on destructive actions. Human-in-the-loop patterns—previewing steps, enforcing approvals, and enabling instant rollback—are essential. Telemetry on success rates and error types should feed continuous improvement.

Compliance, data handling, and network impact

Screen-aware and voice-driven workflows may interact with regulated data and traverse corporate networks. Security teams should validate how content is processed, where it’s stored, and how it’s encrypted in transit and at rest. DLP, logging, and zero-trust access controls must extend to agent sessions. Network teams should assess bandwidth and QoS implications for sustained screen streaming and voice interactions, especially for remote and mobile employees.

Next steps for leaders adopting Copilot in Windows 11

A structured adoption plan will maximize value while containing risk.

Run a guarded Windows 11 Copilot pilot

Target a few repeatable workflows in support, finance, or operations; enable Copilot Voice and Vision for a defined user group; and set policies for when the agent can view screens or execute actions. Capture baseline metrics like task time, error rates, and rework before and after.

Make apps agent- and voice-friendly

Work with ISVs and internal teams to improve UI semantics, keyboard navigability, and accessibility labels, and to expose APIs where possible. Stable UI structures help agents act reliably; well-scoped APIs allow safer, higher-precision actions.

Align endpoint, identity, and security for AI agents

Review endpoint management, identity, and DLP settings for agent scenarios, including consent prompts, session logging, and least-privilege access to applications and files. Where performance matters, plan hardware refresh cycles with AI-ready capabilities in mind to keep more inference on-device.

Establish oversight, KPIs, and governance

Define acceptable use, escalation paths, and auditing requirements. Track completion rates, user satisfaction, policy violations, and time saved. Use findings to expand into higher-value workflows and to refine governance as Copilot Actions matures.

Bottom line: Microsoft is recasting Windows as an agentic platform, starting with voice as an input, Vision for context, and Actions for execution; enterprises that pilot now, codify guardrails, and tune their apps for agent reliability will be best positioned to capture the productivity gains as the platform scales.