Takane LLM Gets Boost with New AI Compression and Optimization Approach

Fujitsu has introduced a new generative AI reconstruction technology that drastically reduces memory usage and boosts performance for large language models. Announced from Kawasaki, Japan, on September 8, 2025, the innovation is central to enhancing the capabilities of Fujitsu’s Takane LLM through the Fujitsu Kozuchi AI service.

The company achieved a world-leading 89% accuracy retention rate while compressing large models using 1-bit quantization, cutting memory consumption by 94%, and tripling inference speed. This significant leap sets a new benchmark in AI model efficiency and performance, targeting power-sensitive environments and real-world enterprise applications.

1-Bit Quantization: Pushing Compression Without Losing Accuracy

A core part of Fujitsu’s development is an advanced quantization technology that compresses the parameters of AI models without severely compromising their performance. Traditional quantization techniques often struggle with error accumulation across deep neural networks, particularly in LLMs.

Fujitsu addressed this by developing a quantization error propagation algorithm, enabling more intelligent cross-layer error management. This method minimizes the impact of precision loss by maintaining consistent accuracy across the layers of the model.

By optimizing for 1-bit quantization—the most aggressive compression method—the technology allows large-scale models that previously needed four high-performance GPUs to operate on just one low-end GPU. This not only improves accessibility but also drastically reduces power consumption, aligning with sustainability goals.

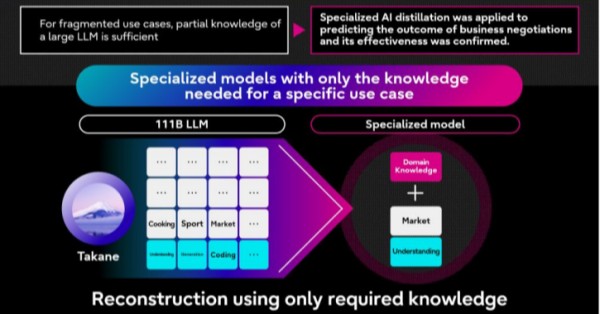

Specialized Distillation: Lightweight Models with Higher Accuracy

Fujitsu’s second major innovation is a specialized knowledge distillation technique. Unlike general-purpose distillation, this brain-inspired method reorganizes AI architectures to become more efficient at specific tasks. It involves:

-

Structural optimization inspired by how the human brain strengthens memory and sheds irrelevant knowledge

-

Pruning and transformer block integration for tailoring the model

-

Neural Architecture Search (NAS) using Fujitsu’s own proxy-based technology to generate and evaluate multiple architecture candidates

-

Expertise distillation from larger “teacher” models (like Takane) into smaller, purpose-built models

This methodology enables smaller AI models—some with 1/100th the parameters—to outperform their larger counterparts. In Fujitsu’s internal tests for text-based Q&A in CRM applications, the distilled models achieved an 11x increase in inference speed, a 43% improvement in accuracy, and a 70% reduction in GPU usage and operational costs.

Real-World Applications: CRM and Image Recognition

The new reconstruction approach was tested in several real-world use cases:

-

Text QA for sales negotiation prediction using Fujitsu’s CRM data showed improved prediction reliability

-

Image recognition tasks saw a 10% gain in detecting previously unseen objects, outperforming existing distillation methods and achieving over three times the accuracy improvement in just two years

These outcomes underscore the technology’s ability to deliver both speed and performance for vertical-specific AI deployments in sectors like finance, manufacturing, healthcare, and retail.

Edge AI and Agentic Intelligence: A Path to Autonomous Systems

With such significant compression, the technology enables agentic AI models to run directly on edge devices such as smartphones and industrial machines. This improves real-time responsiveness, enhances data privacy, and reduces the need for centralized compute resources.

This transition aligns with broader industry trends around Edge/MEC computing and on-device AI, potentially unlocking new use cases in factory automation, remote healthcare, and personalized retail services.

Fujitsu aims to expand these capabilities toward creating autonomous AI agents that can interpret complex environments and solve problems independently—much like human reasoning.

A Sustainable AI Vision Backed by Performance

The breakthrough not only provides performance benefits but also addresses the growing concern of AI’s environmental footprint. By slashing GPU memory needs by up to 94%, Fujitsu’s technology offers a sustainable pathway for deploying generative AI at scale.

Fujitsu’s roadmap includes:

-

Wider rollout of Takane-based trial environments with the new quantization tech in the second half of FY2025

-

Releases of quantized open-weight models, such as Cohere’s Command A, starting immediately via platforms like Hugging Face

-

Further advancements in model reduction—targeting up to 1/1000th the memory footprint without sacrificing accuracy

Accepted Research and Recognition

Fujitsu’s quantization innovation, titled “Quantization Error Propagation: Revisiting Layer-Wise Post-Training Quantization,” has been accepted at the IEEE International Conference on Image Processing (ICIP 2025). The company also released a companion paper on its optimization technique: “Optimization by Parallel Quasi-Quantum Annealing with Gradient-Based Sampling.”

Looking Ahead: Industry-Ready, Specialized AI Models

Fujitsu’s ultimate goal is to evolve these optimized Takane-based models into advanced, agentic AI systems capable of adapting to various industry domains. The company is especially focused on tasks where only a subset of the full LLM’s capabilities are needed, making task-specific model specialization more practical and cost-effective.

This approach not only reduces resource overhead but also facilitates deeper AI integration into mission-critical areas, such as autonomous decision-making and industrial process optimization.

By enabling high-precision AI models to operate efficiently at the edge, Fujitsu is paving the way for a scalable, sustainable, and more inclusive AI ecosystem.

Final Thoughts

Fujitsu’s work represents a practical advancement in the field of AI—prioritizing efficiency, adaptability, and sustainability. By marrying quantization and specialized distillation, the company has found a way to make powerful generative AI models accessible and applicable across industries, from enterprise CRM systems to real-time edge applications.

This technology may not reinvent AI, but it certainly brings it closer to the environments—and devices—where it’s needed most.