Home » 5G Magazine » 5G & Edge Edition | 5G Magazine

5G & Edge Edition | 5G Magazine

Featured articles in this edition

Spotlight Your Innovation in 5G Magazine

- March 16, 2024

Introduction

Edge computing is an integral part of AT&T’s overall 5G strategy with related offerings driven both by what customers need and where the solution is needed to transform business outcomes. AT&T has built horizontal solutions with flexible application execution applied to solve business problems for customers across verticals, including manufacturing, healthcare, stadiums, public sector, retail, and more.

AT&T 5G & Edge Offerings

Today, enterprises generally experience approximately 100ms of latency for their applications hosted within a regional cloud environment. For applications that deliver a good user experience within this latency window, the regional cloud is a viable solution. As enterprises continue to innovate as they transform their business operations, application processing will inevitably migrate closer to the customer’s network edge and their end-users.

Enterprises and end customers will place more real-time demands on their applications and the underlying network which, ultimately, will require more lower-latency and ever more deterministic performance. AT&T’s offerings are positioned to enable edge computing where it’s needed most – closer to your end-users and where the data originates. For applications needing ultra-low latency, or for customers who want enhanced privacy and control over their data and applications, our Multi-Access Edge Computing (MEC) solution provides an on-premises edge solution.

When low-latency, optimized 5G and wireline routing at the network edge is needed, our AT&T Network Edge (ANE) will offer scalable, localized edge clouds in multiple edge-ready metros. In cases, where your business requires performance for on-site and remote workers, MEC and ANE are complementary. The solutions can work together to enable the performance your business needs with workloads placed optimally and where you do business.

AT&T Network Edge (ANE)

One way AT&T enables edge computing solutions for endpoints on the nationwide 5G network is through the AT&T Network Edge. AT&T is partnering with major cloud services providers to connect public and private edges so that customers may realize reductions in latency and accelerating next-gen applications and new experiences. See diagram in the 5G Magazine for more details.

Below are example ANE use cases that are AT&T is exploring with enterprise customers and technology providers:

- Autonomous machines

- Connected vehicles

- Serverless branch

- Real-time analytics

- Mobile gaming

- Augmented/Virtual reality

- Intelligent video analytics

- Interactive live video

AT&T Network Edge (ANE) delivers the public cloud closer for low-latency applications and brings transformation across most, if not all vertical industries. ANE delivers fast, low latency 5G and fiber transport connectivity integrated with high-performance edge computing. ANE can also reduce the complexity of edge computing by deploying applications in public clouds with leading cloud service providers.

AT&T Multi-Access Edge Computing (MEC)

The AT&T MEC platform puts the mobile network edge on the customer premises with the ability to control what cellular data is processed on-site or routed through the AT&T network core. For enterprise customers with a sufficient existing umbrella of local radio coverage (like a distributed antenna system), the addition of an AT&T MEC solution enables a powerful cellular network for massive IoT use-cases where the data is turned into insights on the customer’s local area network without exiting their premises.

Target enterprises that can potentially take advantage of AT&T’s 5G & MEC offering include:

- Manufacturing & Warehouse: Agile Operations, AGVs (Autonomous Guided Vehicles), Asset Tracking, Predictive Maintenance, and Video Surveillance

- Oil & Gas / Mining: Sensors, Drone Monitoring, Worker Safety, Remote Inspection, and Diagnostics

- Transportation Hubs/Retail: Freight / Fleet Tracking, Connected Workers, Incident Reporting, and Intrusion Detection

- Healthcare: Patient Monitoring, Environment Management, and Asset Utilization

- Stadiums, Venues & Events: Live High-Definition Video Streaming, Team Operations, AR for Immersive Fan Experiences

AT&T 5G and Edge Use Cases

Manufacturing & Warehouses

Manufacturers use high-resolution video cameras and sensors to track and automate the production lifecycle to reduce process defects and increase productivity. The cameras and sensors monitoring the processes share data for real-time analysis to detect and resolve the errors before compounding costs down the line.

To enable Industry 4.0 and agile factories, manufacturers need sufficient, secured network coverage across the plant floor with high reliability, bandwidth, and low latency for the wireless devices to send high-resolution video for real-time analysis. AT&T MEC service can route designated cellular traffic through the local area network, allowing businesses to control how they want to process traffic for low-latency and security-sensitive use case solutions.

Healthcare

Many healthcare facilities, such as hospitals, are harnessing the power of virtual reality (VR) to help ease patient discomfort and assist in pain management. VR headsets are providing welcome relief to patients undergoing otherwise stressful or even painful procedures and recoveries. But, as this technology becomes more popular and the library of streamed virtual experiences grows, standard network architecture and may not be able to support lifelike VR experiences.

AT&T MEC service can route cellular traffic through the local area network, helping to enable emerging use cases in healthcare such as imaging, patient monitoring, and high-speed, private transport of imaging data directly where it’s needed, with privacy and low latency.

Stadiums, Venues & Events

Stadiums and arenas need to provide the operators and their fan base with higher capacity, faster download speed, and lower latency while using 5G-enabled devices. Additional features that fans expect include step-by-step navigation to seating or instant sports statistics that mobile users could view by holding up their smartphones to the field. The stadium operators benefit by enabling applications like thermal imaging for fan safety, video analytics to manage wait times, digital payments (cashless transactions) for ordering food in stadiums, better response to public safety responders, and more.

Asset Tracking and Management

Many retailers use remote automated devices like inventory robots for real-time inventory tracking for restocking purposes. Transferring related high-resolution inventory images in near real-time has a very high impact on the performance of their existing network. AT&T MEC service enables them to route cellular traffic through the local area network via the in-building RAN system. This provides retailers an option to improve the processing for low-latency and security-sensitive applications.

Autonomous Machines

The number of drones in the airspace is expected to increase significantly in the coming years. Vorpal, an Israeli startup company, offers drone detection and geolocation tracking solution all in near real-time. The drone detection and monitoring technology can be used across vertical industries such as law enforcement, airports, and energy facilities. In the future, it can also be part of the infrastructure for commercial drone operations for safely flying drones in urban environments.

AT&T’s Network edge in partnership with Microsoft compute provides the low-latency and high-throughput infrastructure required for near real-time drone detection and monitoring.

Analytics

Retailers are deploying new technologies and applications to provide their customers with personalized experiences. These solutions enable retailers to gather & analyze the data each time the customers interact with their store. Combining this information with historical customer data such as purchase history, products viewed on the website – the retailers can predict what a customer is most likely to purchase and thereby personalize the shopping experience & increase sales.

To collect and process the information during the customer’s shopping trip to the stores, retailers need an edge-to-edge approach to process the data onsite, in near real-time.

The traditional off-site data processing will not be able to support the high bandwidth low-latency required for the personalized shopping experience. AT&T’s Network Edge service enables the retailers to process and analyze data in-store, in near real-time for an enhanced personalized shopping experience. It places the processing and storage capabilities at the edge of the network, which results in significantly faster processing times and less consumption of bandwidth. It also enhances reliability because the infrastructure is not accessing an offsite data center for each transmission.

Interactive Live Video

AT&T with Azure Edge Zones enables edge computing so that JamKazam can provide a platform to help musicians play together live and in sync, using AT&T 5G from different locations with high-quality audio and video. See related Video in 5G Magazine.

Interactive Live Video | Holovision

AT&T created a first-of-its-kind 5G-enabled holo interview with ESPN and TNT for their broadcast coverage of the NBA 2020 Eastern and Western Conference Finals, demonstrating sport’s broadcasting’s future inside the NBA campus, using 5G & Edge solutions.

AT&T turned a ballroom into a holo interview studio. Using 5G technology, AT&T created the space for first-of-their-kind of interviews between Orlando and another city. A projector and scrim show the person in Orlando the interviewer, in real-time with lifelike clarity and little lag. They were interacting naturally as if they were together in the same location though they were hundreds of miles away from each other. See related Video in 5G Magazine.

AT&T Edge Partnerships

IBM Cloud

AT&T and IBM have a deep relationship and a years-long history of collaboration. Recently, AT&T and IBM have collaborated to bring the use of 5G wireless networking and edge computing to help enterprises across industries transform their operations to meet the needs of the 5G era.

IBM and AT&T have also collaborated to help enterprises consistently manage their applications hosted in hybrid cloud and edge environments using IBM Cloud Satellite and Red Hat OpenShift, over AT&T networks. IBM’s open hybrid cloud technologies provide the security and flexibility to move and manage applications across any environment – from data centers, to multiple clouds to the edge. Additionally, IBM’s AI technologies enable customers to deliver deeper insights in near real-time from data collected at the edge.

Through these collaborations, AT&T enables customers to gain capacity and speed as well as deploy dedicated 5G or private cellular connectivity at their site with privacy to support massive device connectivity. Also, AT&T’s private network at customer premises creates proximity, which delivers the power of the edge. AT&T’s core and radio access 5G network is designed from the start to be flexible and better suited for edge deployment.

Together, AT&T and IBM are bringing compute resources and services closer to where data is generated, improving the overall experience and end-to-end latency, which is critical for real-time applications and near real-time decision-making.

Microsoft Azure

AT&T has collaborated with Microsoft to enable new 5G, cloud, and edge computing solutions. When combined with AT&T Multi-Access Edge Compute, Azure brings a full compute platform to the customer premises.

This harnesses the power of cellular and cloud to work together efficiently within their local network. With the AT&T Network Edge, Microsoft Azure cloud services are connected to AT&T’s software-defined, virtualized 5G core (the Network Cloud). This helps enable developers to deploy low-latency applications on infrastructure at the network edge, improving customer experience. It would also enable 5G and edge applications for vertical industries such as manufacturing, retail, healthcare, finance, public safety, and entertainment.

The ANE technology is available now for a limited set of select customers to conduct testing with additional edge locations coming soon. Game Cloud Network, a leader in game and app development solutions, is one of the first companies to use AT&T-enabled ANE to give users optimized, low latency mobile connectivity. ANE allows Game Cloud Network to highlight its new multi-player and interactive “Tap&Field” mobile gaming experience, using Microsoft’s Azure and PlayFab services deployed at the AT&T network edge to provide players with near-real-time interaction enabled by the speed of 5G-connected devices.

Google Cloud

AT&T collaborated with Google Cloud to offer new solutions across AT&T’s 5G and Google Cloud’s edge computing portfolio, including AT&T’s on-premises Multi-access Edge Compute (MEC) solution, as well as AT&T Network Edge capabilities through LTE, 5G, and wireline.

AT&T Multi-access Edge Compute (MEC) with Google Cloud combines AT&T’s existing 5G and MEC offering with core Google Cloud capabilities, including Kubernetes, artificial intelligence (AI), machine learning (ML), data analytics, and a robust edge ISV ecosystem. With the solution, enterprises will be able to build and run modern applications close to their end-users, with the flexibility to manage data on-prem, in a customer’s data center, or in any cloud, control over data, improve security, lower latency, and higher bandwidth.

AT&T Network Edge (ANE) with Google Cloud will enable enterprises to deploy applications at Google edge points of presence (POPs) AT&T’s 5G and fiber networks. In addition, it will provide a low-latency compute and storage environment for businesses to deliver faster, more seamless enterprise and customer experiences. As a part of the multi-year strategy, AT&T and Google Cloud will bring the solution to 15+ zones across major cities, starting with Chicago in 2021.

- March 23, 2024

With the advent of R16 standards, work on the other pillars of 5G – URLLC & massive IoT are underway. To take full advantage of new mobile communications standards, mobile operators are moving from an evolved packet core (EPC) to 5GC. By combining 5G NR deployment in standalone (SA) mode with a virtualized network architecture, operators can efficiently use network slicing to allocate resources for different use cases.

As a result, mobile operators need to manage a massive number of devices and connections in support of service level agreements (SLAs) for both consumers and the industry.

Multi-access Edge Computing (MEC)

MEC – Multi-access Edge Computing is a central piece in moving these areas forward. MEC delivers cloud computing capabilities and an IT service environment at the edge of the cellular network. It allows compute resources to be deployed closer to the edge of the network, in effect “transporting” cloud capabilities to the edge to overcome latency and network reliability issues.

MEC offloads client-compute demand given the higher available communication bandwidth from the client. It allows for lower demand on the network for predictable compute demands by placing them closer to the client. It can also be used to optimize the network function itself, because of its large distributed compute capability. MEC also allows for greater flexibility in applications. For example, applications can be architected to run in the client, on the edge, in the cloud, or split across multiple domains to optimize performance, power consumption, and other aspects.

MEC Testing Challenges

- Ensuring the right amount of hardware and software services are available to do the required tasks.

- Slices are instantiated & operate correctly to meet the slated SLAs – Slice assurance.

- How secure is the Edge? Given the MEC is a minimized data center co-hosting applications, vulnerabilities increase, thereby requiring constant tests against attacks & ensuring a strong perimeter defense.

- Private MEC SLA assurance – Dedicated Edge computing infrastructure and radio access network (RAN) that are installed on-premise to provide ultra-low latency to enterprises.

- Public MEC carrier gateway/hyperscaler performance & latency assurance – Public MC brings the cloud computing resources to the edge of a cellular network, closer to where businesses and developers can use them.

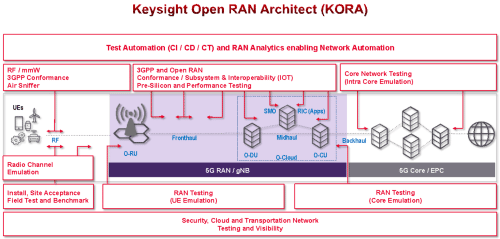

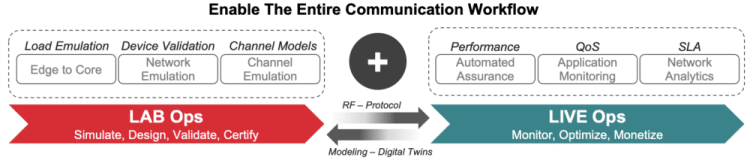

Keysight’s KORA (Keysight Open RAN Architect) Portfolio

Keysight’s KORA (Keysight Open RAN Architect) Portfolio ensures that MEC can be verified under different environments for different use-cases & handle the slated testing challenges just discussed. KORA is an integrated solution portfolio that enables a connected ecosystem to consistently validate the performance of the network from the edge of the RAN to the cloud.

- The ability to run this is in a virtual & cloud environment – hosted as VMs on top of VMWare or OpenShift and instantiated dynamically as CNFs (Docker or Kubernetes) on AWS Outposts or Google Anthos or Microsoft Azure Edge.

- With MEC, lines are blurred between RAN & Core, which is a sweet spot for Edge to Core/Cloud portfolio from KORA. As an example – hosting UPF & Cu-UP at the edge in addition to DU & other RAN components.

- Testing software simulates complex real-world subscriber models to enable mobile operators and network equipment manufacturers (NEMs) to qualify the performance and reliability of voice and data transferred from RAN over 5GC networks. Carrier-grade quality of service (QoS) benefits consumers accessing data-intensive applications, such as video and gaming, and businesses that rely on critical IoT applications in sectors such as automotive, manufacturing, energy, and utilities.

- KORA lends itself well to ensuring tests run in lab & live environments.

- KORA tests can be fully automated and work seamlessly with customer CI/CD pipelines with well-defined APIs.

- With Security & Observability becoming very important at the Edge, KORA extends to handle these aspects as well.

Transitioning from Lab to Live

With O-RAN, the need to validate functionality disaggregated across multiple entities, onboarding new components, and continuously integrating newer code (CI/CD) is very real. Testcases built for lab setups need to be able to transition to a live/active environment. Constant validation of SLAs – measuring latency, QoE, and slated level of service at an End to End level as well as a slice level is necessary.

5G networks require advanced capabilities to be verified including elastic scaling of network nodes, network slicing, and multi-access edge computing (MEC) when simulating the behavior of millions of UEs. To verify that wireless applications, using either 4G or 5G technology, fulfill the expectations of end-users, Keysight’s KORA allows mobile operators and network equipment manufacturers (NEMs) to validate both 5G and legacy radio access networks (RANs), as well as the 5GC.

- March 23, 2024

Q1: What is 5G Network Slicing? What is the impact of edge computing on 5G network slicing?

A: Hitendra Sonny | Kaloom – We see 5G network slicing as providing a mechanism to define a set of logical services. And those modular services share the same end-to-end infrastructure, including all the radios and the data plane, and all the control planes. If we dive a little bit deeper into the power of the 3GPP specs, slices are usually defined by two IDs. One ID like SST is a slice service, which indicates the expected network slice behavior in terms of features and services. And the second ID is SD (Slice differentiator), which is used to differentiate multiple networks and multiple network slices with the same behavior. A: Amar Kapadia | Aarna Networks – Let me explore the relationship angle, the relationship between 5G, edge computing, and network slicing. If you look at the B2B space, 5G is the ideal network to connect end devices to edge computing applications. So that is where those two technologies match up. Before network slicing, a lot of industries would dedicate one workload to one network. With network slicing, you can have sub slices like Sonny mentioned, and each sub slice can have different service level agreements are attributes. That allows you to mix workloads onto the same network. So you can have end devices of different types, edge computing applications of different types going over the same network through network slicing.

Q2: What are some use cases of network slicing with multi-access edge computing?

A: Amar Kapadia | Aarna Networks – The classical use cases, if you go by the standards, are three-plus, maybe one, and they are: latency-sensitive, which is called uRLLC, ultra-reliable low latency, bandwidth-oriented eMBB enhanced mobile broadband, and IoT centric, which is a machine to machine type communication or MMTC. I said three plus one because you also have slices with a security bent. A lot of the government-related work we are seeing requires extremely secure slices. So you can generally say that these are the four, and then you mix and match. And that’s generally the universe of the types of use cases you’ll be supporting with slices. A: Hitendra Sonny | Kaloom – Some specific use cases from a market perspective and what we see from our perspective include smart cities, smart homes, and smart factories. We’ve seen a significant amount of traction in the 4.0 Industry. Having said that, in all the cases, massive IoT, or augmented and virtual reality, comes up a lot. But if I were to double click on the massive IoT use case – now we’re going to be able to take the factories that can deploy the MEC host. So, think of the factory being able to take a MEC host and take that on-site. Now, all IoT devices are there, and they obviously need to communicate with applications. And they need to communicate with each other, and literally the 1000s and 1000s of elements in this network. So, in this case, how are you going to offer and guarantee low latency communication? So it becomes important that you keep all the traffic that needs to remain local with MEC. But, at the same time, keep an eye on a significant amount of traffic that would have to go through the network further beyond for other requirements and other connectivity. We’re actually working with several partners and coming with a blueprint that can be deployed for specific use cases where we can call it a “stamp-it-out” out. It’s not as easy as that, obviously not, but the thing is, this kind of reference architecture does and will help. We also demonstrated with a large telecom service provider – Telenor. Telenor plans to offer enterprise 5G or private 5G services. This demo includes eight different vendors, all running end-to-end on the containerized platform. We’re creating slices & demonstrating that each slice does not end up affecting the other slice. So not only they had multiple customers, per slice, and even per customer, multiple slices.

Q3: What are the benefits of network slicing with edge computing?

A: Hitendra Sonny | Kaloom – We demonstrated the benefits for two use cases – one for a defense customer and one for a broadcast radio customer. The requirements for both use cases were different. And Amar initially referred to the ultra-reliable low latency (uRLLC), that’s exactly what the requirement was for the defense use case, where any and all devices would have to have the ultra-low latency, and that has to be guaranteed and delivered. Now that just cannot be done in like one size fits all. So they have to have a slice such that absolutely no matter, this low latency guaranteed, will be delivered. Whereas the other customer in there had much more of a high data rate requirement. But we needed to make sure that the other application with high data requirements doesn’t creep into taking away from a common infrastructure and compromise on the low latency requirement. And that’s exactly what we actually demonstrated together, where traffic is going up significantly on one side. Meanwhile, the other side is continued to delivering the low latency without any impact whatsoever. A: Amar Kapadia | Aarna Networks – One different use case that we are seeing is actually in healthcare. In healthcare, Of course, with 5G, you’ve heard about these crazy use cases like remote surgery, etc. We don’t even have to talk about those, I think those are further out. But just the fact that you may have a doctor at a site who doesn’t have the expertise, and you want to pull in another doctor remotely over say augmented reality, those use cases are quite doable these days, with 5G and network slicing. And again, the slice guarantees a certain level of SLA, no matter what is going on in the other slices. So that’s really the value. So if you want to say let’s say, 15 milliseconds of low latency for your remote doctor use case, then you’re guaranteed that no matter what’s going on with the rest of the network.

Q4: What is the technical readiness of network slicing?

With related 5G and Edge computing use cases beyond the demos and PoCs A: Hitendra Sonny | Kaloom – Let me go back with a specific example. We talked about Telenor where – eight different vendors, eight different solutions, all 5G working end to end all in the container under some kind of control plane function – in a single pane of glass. The purpose was to show the readiness of these technologies & the marketplace. And we absolutely see from our perspective to that solution, we delivered the networking portion of it, which is obviously a key part of the things, we deliver the UPF, which is the call it the workhorse of the 5G network where every traffic, every packet needs to go through that, actually that network, by the way, if you don’t mind me saying that that network actually won the global award for the innovative network of the year or how they phrase it. I’m pretty proud of that. But the reality is there, we are showing interoperability with multiple vendors. And then we gotta go to the next part of operationalizing. So we’re out of the PoCs and the lab, we’re out in the field actually carrying customer traffic. And that’s where I want to end on bringing also the work we’re doing at VCO 3.0 together. We’re doing a lot of work together to create an end-to-end multi-vendor blueprint where the customer benefits from all of the above – no vendor lock-ins, better pricing, better agility, and the ability to innovate. A: Amar Kapadia | Aarna Networks – Let me just add one more point on the readiness. I think the technology is ready. And what we have found is that markets are never really gated by technology. It’s more of the cultural business model, those types of issues. And I think that’s sort of where we’re at right now. Specifically, our Aarna Networks works on the management plane. So my perspective is network slicing from a management point of view. Can we create slices? Can we manage them? Can we manage the lifecycle terminate them, optimize them? So we’ve been working in the open-source communities with companies such as China Mobile, Wipro, Tech Mahindra. And the work has been going on for at least a year, maybe a year and a half on network slicing management. So the implementation that we have through Linux Foundation is completely 3GPP aligned, the management solution is ready. There’s always room for improvement, there are things like optimizing slices, using machine learning to optimize slices for that is pending. But all the nuts and bolts sort of bread and butter stuff on network slicing is ready. So I think from a technology readiness, I would sort of echo the same thing Sonny mentioned.

Q5: How does the Aarna Networks + Kaloom solution help with network slicing?

A: Hitendra Sonny | Kaloom – So speaking from Kaloom’s perspective, our initial go-to-market approach has been with service providers. We’re involved with very active conversations, way beyond demos and POCs, into field trials and some deployment conversations – many active conversations with tier one operators. That’s where we have initially focused i.e. the use cases they all are looking at – from the mobile world and enterprise world. The thing is, they’re focused on the enterprise side as well. Now, of course, there’s cloud as a portion that’s always involved. So the termination or connecting to the cloud, and then going all the way. As mentioned earlier, we are creating a reference architecture with multiple partners – focus on industry 4.0. We’re providing programmable networking, fabric, and UPF. The UPF is further able to be sliced and multiple UPFs in the same infrastructure, each UPF having its own capabilities of slicing and what have you. It takes a village to deliver, it takes many partners. And one of the key requirements for deployment is the management & orchestration of network slices.

Q6: What is the role of the Aarna Networks Multi-Cluster Orchestration Platform in network slicing?

A: Amar Kapadia | Aarna Networks – Let me talk about our product and then I’ll also talk a little bit more about the blueprint that Sonny has mentioned VCO 3.0, and we’ll touch upon how the two companies are collaborating on network slicing. Our product is an open-source product, and it’s called the Aarna Networks Multi-Cluster Orchestration Platform (AMCOP). It is effectively the management plane for 5G networks and edge computing applications both because our philosophy is that you only have one edge, so you can’t have two management planes, one for networking and one for edge computing fighting for resources. Our product does initial orchestration of 5G network services, edge computing applications, ongoing Lifecycle Management, Day 1 and 2 configurations, and automated Service Assurance, which is nothing but fixing problems automatically via closed-loop automation. In addition, we do things like network slicing, machine learning-based analytics, for example, non-real-time RIC (NONRTRIC) network data analytics function (NWDAF). We have about seven or eight active POCs going on with a few going into production in the next quarter. We are working on a Linux Foundation networking blueprint, which used to be called VCO 3.0. The new name is the Cloud Native 5G Blueprint.

The Cloud Native 5G Blueprint is based on the idea, as Sonny said, it takes a village to do this. It’s a multi-party collaboration. So we have companies like Kaloom and Aarna networks, for sure. But we also have Red Hat, we have Capgemini Engineering, we have A10, we have Intel, GenXComm, and Turnim. So what we are doing is we are creating a blueprint that initially is for private 5G networks over CBRS. And we are building an end-to-end 5G network. In addition to that, we are also doing core slicing. Ultimately, we will extend it to RAN slicing, transport slicing, as well to do end to end slicing. But for now, the focus is core slicing where we collaborated using our product AMCOP. The core is a mix of the Capgemini Engineering 5G core with the Kaloom UPF. So we have successfully done that and now we’re doing test & integration on that implementation.

A: Hitendra Sonny | Kaloom – In this context, Kaloom basically provides the NETCONF or Yang-based model upstream. And Aarna and other partners that Aarna works with beyond can all take from that northbound, and chances are, as you talk about, management orchestration is a huge piece. It’s a humongous deal, right. So there may even be further northbound to deliver end to end. But the end result is these are all open standards, industry-based standards, we all are working together.

Q7: From a roadmap perspective, beyond core slicing – what is being planned?

A: Amar Kapadia | Aarna Networks – Yes, so I can maybe cover the management part. And then I’ll hand it over to Sonny for the full picture. On the network slicing management in terms of what’s there in the future, I think the future is extremely interesting in terms of network slice optimization. And that’s where we will introduce the fourth technology. So you mentioned 5G, you mentioned edge computing, you mentioned slicing. We will introduce machine learning. So machine learning will be able to see the usage and the utilization of the slice. And in real-time, make optimizations. So that’s the next big thing from our standpoint is how to optimize the slices because all the bread and butter stuff has been done creating slices, terminating them, configuring them — all done. We are also looking forward to actually getting this into many, many customers. We have trials going on, hopefully going into production by early next year. But next year, we hope that slicing will proliferate. So those are the two things I’m looking forward to.

Q8: Is the solution flexible to replace one vendor technology with other? And How?

A: Amar Kapadia | Aarna Networks – In theory, it should be plug-and-play. So if you follow the 3GPP standards for 5G core, if you follow O-RAN standards for your RAN, for slicing, if you follow similar standards, 3GPP, and O-RAN, and on the transport side IETF, it should be plug and play. But there is no such thing as plug and play in real life. Nobody complies with the standards perfectly. So what you end up having to do is to make adjustments. From our standpoint, we can make adjustments on either end. Either we can make an adjustment on the network function side. So the 5G core or the RAN can make tweaks to be better aligned to the standards, or our offering is a platform. So we can make adjustments and adapt to what the network function offers. So you have different standards for orchestration, it may be Helm charts for configuration, it may be Yang net con for the rest APIs, for data collection and analytics, it might be VES, HV-VES, and other formats.

Maybe more specifically on slicing, there is a component called the NSSMF network slice subnet management function. The NSSMF is the one that interfaces between the management stack, network slice management stack, and the network function. We are leveraging through LF standard NSSMFs apps for core, RAN, and transport that are standards-compliant. If the network function is completely standards-compliant, great. If not, we develop a custom NSSMF to adapt to the different vendors.

A: Hitendra Sonny | Kaloom – As Amar said that things don’t work plug and play, but it’s not as bad as plug and pray either. I’ll give two examples. In the context of the UPF that we provide, we work with AMF and SMF provided by large incumbent vendors or some upstarts. Initially, it took a long time with everybody’s implementation. But we’ve watched over time, and it gets easier and easier. Similar thing on the orchestration side. When our first customer asked us to do orchestration with one specific tool that they had chosen – it took us about six weeks to work together. Subsequently, we’ve done with three or four vendors. And we’ve got it down to days now. From the technology side – network slicing has to scale at a very high level, both from a bandwidth perspective and from a subscriber number perspective. It also has to maintain the latency. But the last one that many people forget about and we talk a lot about is SASE – that is security is going to be kept in mind 100% as well. We do it in the hardware on P4 enabled hardware and fabrics that we use that bring some amount of security inherent into there.

Summary

A: Hitendra Sonny | Kaloom – If we look at the customers in this perspective, enterprise or service provider, the end game is you’ve got to be able to offer differentiated services. Differentiated services for which you would charge for if you’re a service provider – different services where you could guarantee certain services to your internal BUs (business units) or external customers. And that to us is when you put it all together is the huge benefit to bring the differentiated services of many things that Amar and I discussed over time. So I think that’s the name of the game. And that’s the exciting part, both for the enterprise and service provider customers. That’s the end benefit. A: Amar Kapadia | Aarna Networks – Ultimately, all this technology has to serve a purpose. And what is that purpose? For a service provider, it’s to increase the potential revenue. We all know that the average revenue per user, ARPU, is only going down. So how can we expect the service providers to roll out such a CAPEX intensive 5g network? And it’s through techniques such as this. Edge computing applications, network slicing, are the ways they can make more revenue. When it comes to enterprise, it’s not so much about increasing the ARPU. That’s not the case. But it’s cutting OPEX. So how do you get OPEX for your environment? How do you improve your customer satisfaction? How do you roll out new services faster? So I think that’s ultimately the promise of network slicing.

- March 16, 2024

The edge promises and security needs

Transformational edge applications require efficient data processing near the source, low latency bandwidth, and highly scalable distributed systems. Smart IoT sensors, 5G+ wireless, AI, Cloud, Microservices, Kubernetes, and MLOps are the key enablers of complex edge solutions. Edge applications such as Autonomous Cars, Integrated Smart Factory, Smart Patient Monitoring, Smart Grid, Precision Farming, Remote Monitoring of Oil & Gas Drilling, promise tremendous value to businesses in terms of cost optimization, revenue growth, and innovation. Business and IT leaders focus on applications affecting business operations and securing them stays their top priority. The recent trends in sophisticated ransomware attacks trying to control operations have elevated their concerns much higher. It is not uncommon to see topics such as cybersecurity and edge security being discussed at the board level. As a result, security has become the backbone of 5G+ edge applications, while securing them poses a true challenge for software and hardware technologists.

Complexities in edge application architecture

Edge applications are distributed across three application layers: Enterprise Cloud, Core Edge, and Far Edge. The three-layer architecture enables edge applications to run at scale, stable, and secure. The Enterprise Cloud layer consists of master services to handle data store, analytics, AI models, network, security, and application management. Far Edge node services process data received from hundreds of IoT devices in remote locations and perform real-time analytics at the edge. Core Edge acts as a regional application center to combine many far edge services and coordinate actions to be executed. The edge application is decomposed into loosely coupled microservices and deployed onto Kubernetes clusters. The Security Services manage security requirements for data, network, edge, and application. All the distributed services must be well managed and coordinated to work in synchrony, be fault-tolerant, and be able to run at scale. See 5G Magazine for Figure 1 5G+ Edge application landscape.

Security challenges in edge application

While stability, scalability, and security are the key pillars of 5G+ edge business applications, stability may be addressed through efficient DevOps, reliable infrastructures, and high availability systems. Scalability may be addressed by over-sizing the resources or by having spare clusters on hot stand-by. Security on the other hand cannot be substituted by alternate approaches. With multiple application layers, distributed microservices, hardware-software integrations, and processing of sensitive data outside of the IT centers, edge applications open up multiple points of “security vulnerability”. The following list highlights the key aspects to be considered in securing 5G+ edge applications:

- IoT sensors must be protected physically and digitally

- The network, 5G+ wireless must be secured for access, interruptions, and attacks

- The data must be secured during transit and at rest

- The messages must be secured against interception and distortions

- The AI models must be secured from access and updates

- The application services must be secured for access, interruptions, and malwares

See 5G Magazine, for the table providing indicative representation of security threats and risk levels across the edge application layers. For the overall application efficiency, security functions must be optimized based on the security threats and the assessed risk level across the application layers including the edge devices.

The way forward in securing 5G+ edge application

Security must be built from the core and integrated end-to-end across the edge application. Enterprise solutions experts strongly recommend a thorough approach in securing the key elements of edge applications. Securing IoT Devices: Millions of IoT Sensors from remote locations generate large amounts of sensitive data required for the application. Smart sensors have processing capabilities that must be protected against malware and trojan injections. Advanced edge network attacks can inject fake nodes to cipher messages and take control of assets. Edge devices must be protected from physical tampering, circuit modifications, and isolation. Security defense logics can leverage machine learning models to detect hardware trojans and camouflaged edge nodes. Device manufacturers are offering smart sensors with embedded security and enable capabilities to live update the firmware and security features to address evolving threats. Securing Network & 5G+ wireless: Edge networks are prone to “routing attacks” at the communication layer affecting the latency and throughput. Distributed denial of services (DDoS) attacks can overwhelm the edge network and make the nodes dysfunction. 5G+ wireless opens up additional security vulnerabilities. Securing a network starts with fundamental definitions of policies and processes to prevent unauthorized access, modifications, and interruptions. Cryptographic protocols such as TLS secure the messages across the network. By analyzing threat patterns in the core and edge network, AI models can be developed to detect and prevent attacks before they can happen. Telcos are investing to enhance the security features in their offering through IMSI encryption, SDN, MG 3GPP, and NFV. Securing Systems & Software: Systems and software must be protected both at the hardware and operating system level. Edge servers have inbuilt security features to augment the operating system securities. The network gateways must be secured from physical and network access. Data stored in the systems, insights developed through processing the data, AI models, and services must be protected against access and hacking. Application codes and policies must be validated using AI-infused vulnerability checks. Cybersecurity and storage companies are fast developing advanced data encryption and security tools perfected for edge applications.

Security innovations in the horizon

The modern-day security threats are highly dynamic and unpredictable. Each day, cybercriminals are getting smarter and more sophisticated. The defense strategies must stay one step ahead to effectively counter the constantly evolving threats. Edge applications architecture allows for the distribution of security updates. The Security detection and deterrent services must auto-learn over time and initiate alarm or shutdown triggers to handle breaches such as ransomware that threaten business outages. In recent years, security threat detection and event management solutions elevated the protection level significantly by using AI techniques. Competitive cybersecurity and cloud vendors are teaming up to combat the ever-increasing threats from malware and ransomware. Innovative techniques should enable continuous learning of threat patterns and automated updates of protection logic should become a standard feature. Quantum safe encryption and quantum-safe networks have promising solutions suited for edge applications.

Summary

In the coming years, 5G+ edge applications will accelerate digital transformation, drive topline and bottom-line improvements for businesses around many industries. Security must be incorporated at the application core and integrated across the edge solution. Security services should use modern AI techniques and support dynamic updates to defend the rapidly evolving sophisticated attacks. It is also promising to note that software and hardware vendors are investing heavily to embed security as an integral feature of their offering. Through further innovations using AI and quantum technologies, we can expect a “team of virtual smart edge sentinels” that will ensure total security to business-critical 5G+ edge applications.

- March 20, 2024

The Solidity of Edge Computing

Undoubtedly with the faster proliferation of multifaceted IoT sensors and devices in and around us, the prospects for the newer phenomenon of edge computing brightens significantly. Resource-constrained IoT devices are termed as edge devices whereas resource-intensive IoT devices are being touted as edge servers. Such a distinction is necessary for gaining a deeper understanding of IoT systems and environments. The industry has been fiddling with cloud computing for a long. Now with IoT edge devices are being stuffed with more memory and storage capacities and extra processing capabilities, the real edge era is, to begin with, all the clarity and confidence. Powerful processor architectures are emerging to artistically empower IoT devices to be productive, participative, and cognitive. IoT devices are increasingly self-, surroundings, and situation-aware yet they are slim and sleek, handy and trendy. Through a bevy of advancements, edge devices in our everyday environments (homes, hospitals, retail stores, manufacturing floors, eating joints, nuclear establishments, entertainment plazas, railway stations, ports, etc.) are adroitly strengthened to join in mainstream computing thereby the idea of edge computing has started to flourish with correct nourishment.

Edge-native Applications

With the projected billions of IoT devices and trillions of IoT sensors is tending towards reality, there is a possibility for producing a massive amount of multi-structured IoT data through their voluntary collaborations, correlations, and corroborations. With such exponential growth in the IoT data size, traditional cloud processing is to face a number of hitches and hurdles. The way forward is to filter out repetitive and routine data at the source itself. Such an arrangement goes a long way in saving precious network bandwidth. Securing data in transit and rest is another challenge widely associated with conventional and classical computing. The security of IoT data is ensured as there is no need to traverse every bit of data to faraway cloud storage and analytics over the porous, open, and public Internet, which is the prominent and affordable communication infrastructure for the IoT era. The network bandwidth gets preserved due to edge computing. Above all, the real-time data capture, storage, processing, analytics, knowledge discovery and dissemination, and actuation get fully accomplished through edge computing. What edge computing brings to the table is the local storage and proximate processing of edge data. Such a paradigm shift brings in a dazzling array of sophisticated edge applications. The quantity and quality of edge applications are the key motivation for the huge success of edge computing. The realization of elusive context-aware applications is spruced and speeded up with the widespread IoT device deployments in crowded and mission-critical environments. Multi-device applications, which are typically process-aware and business-critical, are bound to see the light. There are numerous business, technical and user cases getting articulated and accentuated through the unprecedented adoption of edge computing. In the subsequent sections, we illustrate edge-native applications.

Edge Infrastructure Clouds

If we sum all the cloud servers of all the public cloud environments across the globe, the number of commodity cloud servers should be hovering around a few million. But it is projected that there will be 50 billion connected devices across the world in the years to come. Thus, the computational capability of edge devices is far more superior than all the cloud servers added together. IoT edge devices gain the strength to find and interact with other edge devices in the vicinity to form ad hoc, temporary, dynamic, and purpose-specific clusters/clouds. Edge devices are increasingly integrated with cloud-based software applications (called cyber applications) to gain extra capability. Digital twins are also being formed and run in cloud environments for complex edge devices at the ground. Thus, edge integration, orchestration, and empowerment are being activated through a host of technological paradigms. Holistically speaking, edge clouds are formed to tackle bigger and better problems. Feature-rich applications can be availed through edge cloud formation. However, there are constraints and challenges in constructing edge device clouds. Devices are heterogeneous and large in number. As widely accepted, the aspects of multiplicity and heterogeneity lead to unfathomable complexity. Thereby there is a clarion call for complexity-mitigation techniques and tools.

Edge Platforms

The above-mentioned infrastructure complexity is being smartly delegated to competent platform solutions. We have container runtimes for fulfilling software portability, the state-of-the-art hybrid version of microservices architecture and event-driven architecture, DevOps toolkits for frequent and speedy software deployments, and container orchestration platforms such as Kubernetes. Further on, there are shrunken versions of Kubernetes to be deployed in edge servers. Kubernetes is being positioned as the one-stop IT platform solution for forming edge device clouds. Devices expose their unique services to the outside world through service APIs. Messaging middleware solutions are enabling automated and event-driven device interactions. The large-scale adoption of Kubernetes is accelerating and sustenance of edge device clouds across industrial, commercial, and official environments. Process industries get immense benefits out of containerized and Kubernetes-managed edge clouds. Industry 4.0 applications are being facilitated through the power of Kubernetes. Edge-native applications are built from the ground up with Edge in mind. Edge-native applications intrinsically take advantage of the unique capabilities and characteristics of the Edge. Kubernetes plays a very vital role in shaping up edge-native apps.

Edge Analytics

With path-breaking edge platforms and infrastructures in place, the idea of edge data analytics picks up fast. The noteworthy factor is that edge analytics fulfills the long-standing goal of producing real-time insights. Real-time intelligence is mandated to build next-generation real-time services and applications, which, in turn, contributes to the elegant establishment of real-time intelligent enterprises. There are fast and streaming data analytics platforms specifically prepared getting deployed in edge servers, which cleanse and crunch edge device data to emit out actionable insights in time. Edge devices generally collect and transmit edge data. There are sensors, CCTV cameras, robots, drones, consumer electronics, information appliances, medical instruments, defense equipment, etc., in various physical environments to minutely monitoring, measuring and management of physical, informational, commercial, temporal, and spatial requirements proactively and unobtrusively. The growing number of IoT devices produces ad streams a lot of data at high speed. Low-latency applications such as video surveillance, augmented reality and autonomous vehicles demand a real-time analysis to discover and disseminate real-time knowledge. There are research contributions such as real-time video stream analytics using edge-enhanced clouds.

Edge AI

This is gaining immense traction these days. There are AI-specific processing elements (graphical processing unit (GPU), tensor processing unit (TPU), and vision processing unit (VPU)) to accelerate and augment data processing at the edge. There are concerted efforts by many researchers to fructify tiny machine learning (ML) processing at the edge. There are several lightweight frameworks and libraries to smoothly run machine learning and deep learning algorithms at the edge. Classification, regression, clustering, association, prediction requirements are being handled at the edge. AI processing at the edge is the key differentiator for ushering smarter applications such as anomaly/outlier detection, computer vision / facial and face recognition, natural language processing (NLP) / speech recognition, image segmentation, etc. With all-around advancements in the fields of AI and edge computing, edge AI is turning out to be an inspiring domain of study and implementation.

Cloud-native Edge Computing

The cloud-native paradigm has brought in a few deeper and decisive automation in software engineering, including software deployment. Cloud-native applications are being designed, developed, delivered, and deployed across cloud environments (private, public, and hybrid). Highly modular and modern applications are being derived by applying cloud-native principles. Further on, cloud-native applications are highly available, scalable, extensible, and reliable. Especially the non-functional requirements of applications are fulfilled through the unique cloud-native competencies. Now, this successful computing paradigm is being tried in building and releasing event-driven, service-oriented, and people-centric edge applications. All the complexities of edge-native application engineering are being decimated through the skillful application of the proven cloud-native model. In short, edge computing is getting simplified through the power of cloud-native computing. Cloud-native applications are to maximize resilience through predictable behaviors. A cloud-native microservices architecture allows for automated scalability that eliminates downtime due to error and rapid recovery in the event of application or infrastructure failure. Automated updates provide risk-free and secure software. Traditional embedded applications are OS-dependent, which makes migrating and scaling applications across new infrastructures complex and risky. There are lightweight Kubernetes implementations such as KubeEdge (https://kubeedge.io/en/), K3s (https://k3s.io/), and MicroK8s (https://microk8s.io/) for enabling edge device clouds.

Serverless Edge Computing

Serverless eliminates infrastructure maintenance tasks and shifting operational responsibilities to a cloud or edge vendor. Serverless has been popular in the traditional cloud world. Now with Kubernetes, containers, MSA, and event-driven architecture (EDA) at the edge, the pioneering serverless principles are getting applied in order to bring in the same benefits at the edge. Serverless in a centralized cloud suffers from slow cold starts, and there are a few drawbacks. The edge cloud is being presented as the ideal solution to remedy all of these challenges. A serverless environment that is embedded within an edge cloud provides scale and reliability. Serverless edge truly fulfills a distributed compute power and data processing where it is created.

The Combination of 5G and Edge Computing

For ultra-low latency and ultra-high reliability, 5G communication comes to the rescue. On the other side, towards the last-mile connectivity, edge clouds help 5G communication. Their association surmounts the limitations of each other so that the real digital transformation empowers business and people transformation.

Conclusion

The fusion of edge computing and 5G communication is to result in a series of versatile and resilient applications for businesses and commoners. The unique blend is to open up fresh possibilities and opportunities. There are product and platform providers and start-ups in plenty exquisitely leveraging their combined capabilities to do the justice for the ensuing digital era. There will be cool interactions between enterprise, embedded, and cloud systems to visualize and realize cognitive applications. The transition from business IT to people IT gets exemplified through this harmonious linkage.

- March 16, 2024

Perspective on 5G and Edge Computing

Applications are increasingly going to process more data and make decisions closer to the edges for better real-time user experience, compliance, etc. Gartner estimates that by 2025, 75% of enterprise-generated data will be outside of a central data center or cloud. Use cases across verticals need geo-distributed application architectures – autonomous vehicles, digital healthcare, smart retail, smart cities, and industrial automation are examples. 5G is an important enabler for edge computing. In addition, a confluence of factors are coming together that will enable Edge (geo-distributed) computing.

- Network speeds – 5G offers 10x-20x higher speeds (multi-Gbps) and lower latencies when compared to 4G with improved reliability. This enables a better experience for distributed applications.

- Compute costs – Specialized hardware such as GPUs, TPUs have now become more affordable to be used at the edge. So one could envision offloading a compute-intensive task such as ML closer to where data sources are.

- Distributed cloud and datacenter footprint – The large cloud providers themselves are expanding their footprint. In addition, companies such as Equinix Metal, Vapor IO, Cox Edge are building micro data centers and services.

- Modern application architectures that lend well to distribution – Many enterprises have focused on adopting microservices-based application architecture, where components are loosely coupled but tightly connected. This framework will help in distributing applications.

What are the challenges for edge computing?

The key motivation of edge computing is to offload compute from the cloud to edge to process data closer to the source and make real-time decisions. So based on the use case and application, the edge could just have an ephemeral lambda function or in many cases a long-lasting footprint to run ML workloads, analytics, and even a local database, storage for compliance and efficiency purposes. The key challenges with edge computing include:

- Heterogeneity and scale – Unlike a cloud environment, edge environments exhibit heterogeneity w.r.t infrastructure capabilities, footprint, and providers, and there can be multiple locations. So applications need to be developed so they can be deployed in such heterogeneous environments.

- Connectivity and security – One of the consequences of heterogeneity is connectivity and security challenges. Edge locations can be connected either with wired or wireless networks or both. Depending on the provider and capabilities, network services such as VPN, firewall, load balancer, etc. could differ at each location. With the application footprint now spread across locations, the security exposure and attack vector increases too. Service chaining could be potentially used, but that increases the complexity with multiple locations – especially when it needs to be done reliably in an automated way.

- Data – There can be multiple data sources such as IoT sensors or servers and applications themselves. Paradigms for data collection, representation, and abstraction, secure streaming, and exchange between different services are required for distributed decision making and to have a feedback loop across the application span so the application can be adaptive.

- Observability and Resiliency – Fault isolation for such a geo-distributed application can be hard, especially if done manually. Rich real-time observability is needed from infrastructure to application across locations along with a feedback mechanism that can be automated to reduce error and build resiliency.

- Dynamism – The edge can sometimes be mobile or the data/users being serviced can be mobile. So optimization mechanisms need to be in place to dynamically move the workloads from one location to other based on cost, latency, bandwidth, proximity, etc.

The last decade has been about digital transformation and cloud adoption. If going to a single cloud was hard, distributing applications across multiple locations is harder – but inevitable to be on the cutting-edge.

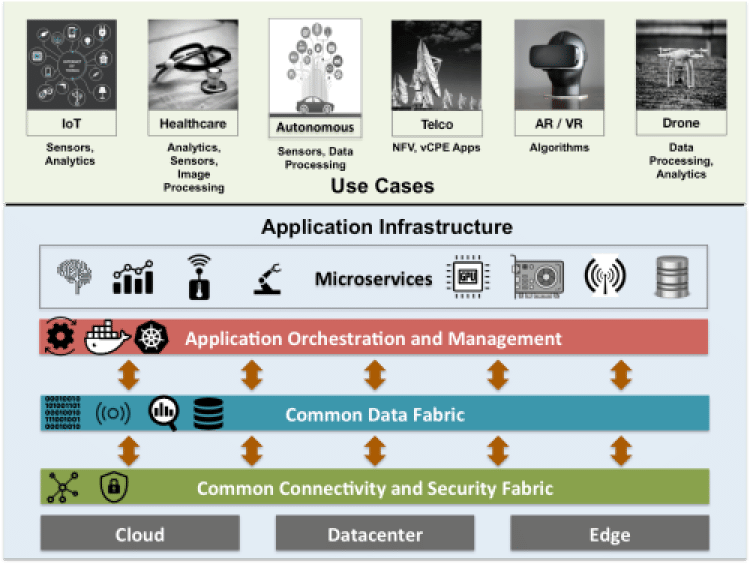

How to build the application environment?

It is important to build an application environment that is easily portable across locations, vendors, and providers and one that can scale well. See the picture in the 5G Magazine for a high-level approach. It has three key layers.

- Common connectivity and security fabric – This is the key layer that binds all the locations together. It has to abstract the underlying providers and vendors and provide a unified view for the geo-distributed application. It has to be able to make it simpler to discover and connect disparate entities such as VMs, docker containers, Kubernetes clusters, network and security services, etc.

- Common data fabric – This has to build on top of connectivity fabric and provide API-based access to application microservices for data collection, secure streaming, and exchange among different services that may or may not be co-located.

- Application orchestration and management – This has to enable application definition and deployment across locations. It has to provide a framework for observability, feedback, and policy-based mechanisms for resiliency, scalability, and lifecycle management.

Having such an application infrastructure framework will allow for the non-disruptive evolution of the application over time when new locations, providers, services, and components are added in due course.

Doesn’t open source offer the solution?

Open source has been a catalyst for innovation and democratized access. It offers multiple tools and technologies to build a distributed infrastructure. Open source also gives a sense of ownership and control (compared to dependence on a vendor’s solution) to the enterprises and their technical operations teams. It becomes both a temptation and necessity to weigh the merits of different choices for a given component before picking one. An example would be choosing container networking or storage plugin. In both cases, multiple options exist and it requires technical depth to make assessments. But as was evident in the edge computing challenges, a geo-distributed application infrastructure needs piecing together a complex puzzle that requires both breadth and depth of skillset. The complexity can be further appreciated in the context of security. Different components (containers, network, data message bus, application, etc.) have their own authentication and security framework. So managing a cohesive end-to-end security framework in such a distributed environment is quite complex. That’s not to say open source does not have the answer, but not every enterprise has the wherewithal for a DIY operation. Most modern businesses are digital businesses and application delivery is central to their success. So making the right tradeoffs becomes important for successful business outcomes. A vendor-based solution that is open source/standards compliant may offer a practical approach for such enterprises. One of the reasons why things work well in a single cloud is because the cloud provider offers well-integrated core infrastructure (compute, storage, network, security, observability). The same cannot be said if application straddles different cloud providers and this becomes harder as one goes from cloud to the edge.

How about the cloud providers?

Cloud providers are building edge cloud services. AWS offers Wavelength, Google has Anthos, IBM has Edge Application Manager for example. Some have tie-ups with Telcos to offer low latency network connections between locations. This could work well for some customers and their applications and might be their preferred option. The applications will be tethered to a particular cloud provider. However, edge offers more choices with micro datacenter providers and vendors building smaller and specialized compute environments. Some customers may want to leverage best-of-breed environments that best meet their application and business needs such as proximity to end-users, latency, capabilities, or cost. Such customers are better off exploring vendor-neutral solutions.

Does fledge.io offer a solution?

fledge.io has been built to address this challenge. fledge.io offers a single unified cloud experience across different clouds, data centers, and edges that is intuitive, easy to use, and based on open technologies. Our solution provides the core application infrastructure that includes geo-distributed application orchestration, consistent application connectivity with zero trust security, continuous observability, and telemetry-based data collection and streaming across cloud and edge. Essentially, fledge.io offers a public cloud-like experience for such geo-distributed applications and is a fully cloud provider / vendor-neutral solution. We partner with cloud and data center providers, and we will provide a seamless experience for customers as their applications straddle heterogeneous environments.

- March 16, 2024

As we move towards a society with ever-increasing connectivity and more data generated by things & people, organizations strive to find new ways to process, store and act on this acquired data. One of the essential elements of this ecosystem is cloud or on-premise networks, where most of the workload of any application is executed. One of the main reasons for the poor performance of any application is linked with the time taken by data packets moving back and forth between the user equipment and the compute area. From this point of view, the most significant difference in the experience of any application is created by latency. It is essential to define what we refer to when we say latency. Latency is a measurement of how much time it takes for a data packet to move from its point of origin to its destination point.

The traditional computing paradigms adopted by most of the public cloud computing are mostly centralized computing models. Linearly expanded cloud computing services cannot efficiently handle the massive data and computing tasks generated by exponentially growing edge devices. It faces problems such as real-time, accumulation, and bandwidth occupation. Edge computing can also fulfill this need by distributing workloads closer to digital interactions, allowing organizations to enhance customer experiences and harness growing data volumes for actionable insights. The increasing demand for edge computing has resulted in many new solutions from service providers.

However, these solutions often focus on a single component rather than the integrated technologies needed for edge computing. The value of edge computing comes from the data and technologies that adapt to evolving needs. Therefore, to meet the needs of real-time operation, low latency requirements, and high quality of service (QoS) scenarios, edge computing emerged as an application paradigm of the IoT.

Edge computing relocates critical data processing functions from the center of a network to the edge to a place closer to where data is generated and pushed to end-users. While there are many reasons why this architecture makes sense for specific industries, the most apparent advantage of edge computing is its ability to combat latency. Effectively troubleshooting high latency can often mean the difference between losing customers and providing high-speed, responsive services that meet their needs.

In the new distributed computing paradigm, edge computing facilitates computing and data to be stored and computed closer to edge devices and edge cloud. Hence, edge computing helps change the response time of computing tasks, significantly reducing the pressure on network bandwidth and cloud or on-premise locations and improving service quality for users. Due to its superior performance in delay-sensitive applications, edge computing has become a crucial enabling technology in 5G.

An algorithm that can help offload and redistribute jobs between edge cloud and cloud center can create tremendous value in this job execution. This algorithm can execute similar functionality as what PageRank has done for Google search. The PageRank algorithm measures the importance of each node within the graph based on the number of incoming relationships and the importance of the corresponding source nodes. The underlying assumption, roughly speaking, is that a page is only as important as the pages that link to it.

The PageRank algorithm measures the importance of each node within the graph based on the number of incoming relationships and the importance of the corresponding source nodes. The underlying assumption, roughly speaking, is that a page is only as important as the pages that link to it. The growth of enterprise use cases is inevitably linked with the success of methods and techniques in the edge computing ecosystem. At one end, public cloud companies like AWS and GCP are slowly expanding their edge compute network through partnerships with telecom service providers.

Companies like 5GVector are building such algorithms which can help enterprises to distribute application workloads. Similarly, another innovative company in the Asia region is Nife. Nife is developing an application distribution platform that allows developers to seamlessly deploy applications near end-users directly via the Nife platform or any cloud service provider. Nife executes these applications close to end-users through a mesh of globally connected servers.

The ecosystem of Telco edge cloud is developing fast with various initiatives and collaborations announced by key players in the last 12 months in Asia. This development includes SK Telecom’s recent partnerships with VMware and Dell to offer edge computing in private 5G networking solutions. Singtel is leading the region in telco edge computing with its recent partnership with Microsoft to launch 5G MEC and its work with Ericsson to leverage MEC in its trial 5G SA network. Singtel and Globe (Philippines) are also part of the APAC-focused MEC Task Force launched in January. Other telcos are also collaborating with technology vendors and enterprises to explore and develop enterprise 5G solutions.

The success of such an ecosystem and partnerships will depend on the adoption of their platforms by end-users. Hence, for end-users, enterprises should include edge computing in their ICT roadmap and be more open to collaborating with service providers to explore and co-create new solutions.

- March 18, 2024

From 1G to 5G

Since the 1G mobile network was launched, a new generation of mobile network technology is coming out at around every 10 years on average. With 4G/LTE, it gave us a new generation of consumer-focused mobile applications such as Facetime, Uber, and Snapchat. In addition to making these applications popular, it has a profound impact on our daily lives. With 5G, the expectation is even higher as 5G can be up to 100 times faster than 4G.

In the beginning, existing applications that are bandwidth hogs such as Netflix and YouTube will probably benefit the most from the transition to 5G. While many people are waiting for new applications that can consume the bandwidth from 5G, what really sets 5G apart from 4G/LTE is latency. Low latency is the prerequisite for real-time applications and edge devices are going to benefit the most from the low latency of the 5G networks. Please see the related diagram in 5G Magazine.

The Rise of Private 5G Networks

For many 5G applications that rely on public networks, full-scale deployment of 5G infrastructure has to be there. For example, autonomous driving relying on 5G might not work in rural areas if the infrastructure is not there. It will take time for wireless carriers to build out their public networks. While 5G public networks are being built, 5G Private Network (On-Premise) is gaining popularity.

For many companies, a private 5G network offers the control and reliability they will need for certain mission-critical activities, along with seamless integration across their existing systems. Since bandwidth does not need to be shared and additional encryption can be used, private 5G networks are more secure. Data inside a private 5G network can also be monitored closely. In some countries, businesses can either buy the spectrum to build their own private 5G network or have the telco companies to build and maintain their private 5G networks.

Industry 4.0 Plant Powered by a Private 5G Campus Network

One company that is leveraging the 5G private network for manufacturing is Bosch (see the related figure in the 5G Magazine). They plan to deploy 5G to 250 of its plants worldwide. What drove their decision to adopt the 5G private network versus other technologies? Bosch needs a robust network that can transfer data reliably and ultra-fast, and machines communicate in real-time. Reliability is important in manufacturing. A few dropped packets could mean a robot missing its cue or stop working, resulting in a costly production shutdown. A 5G private wireless network can guarantee coverage. 5G includes several features that make it more reliable than existing wireless solutions, including protocols for recovering lost packets quickly and ways for routing data around network bottlenecks.

5G promises latencies as low as a millisecond, compared with around 30 milliseconds for current 5G networks. For smart machines to communicate in real-time, low latency is important. Low latency means there is no longer a need to have a wired connection for improved latency. 5G wireless network increases the mobility of robots which, in turn, can increase the utilization and efficiency of the robots. In addition, adding 5G wireless links to robots and other equipment allows them to be synchronized and calibrated more precisely, helps predict costly malfunctions and downtime, and allows sophisticated software, including artificial intelligence, to be piped in to make them more capable. Let’s look at another industry that is ripe for 5G transformation.

Ushering in The Next Generation of Healthcare With 5G

VA’s Palo Alto Health Care System is the first fully 5G-enabled hospital in VA (Veterans Affairs). They did not enable 5G for people to get faster download on their phones. Their 5G infrastructure is to enable mobile connectivity between medical devices, augmented reality, and virtual reality tools to support medical training, etc. 5G is perfect for these advanced tools which require greater data transfer rates to support the large amounts of information transmitted across devices.

To turn the idea into reality, the team from VA Palo Alto Health Care System collaborated with Verizon, Microsoft, and Medivis: Verizon provided the 5G network backbone, Microsoft offered the HoloLens headset and information delivery platform from Microsoft, and the imaging software from Medivis. Physicians at VA’s Palo Alto Health Care System can now transform complex health data into interactive 3D holograms, models, and overlays. Let’s examine another application that is moving the computing power to the edge of the 5G network.

Why 5G is Critical for Road Safety

What does 5G have to do with self-driving cars and safety? 5G’s increased throughput, reliability, availability, and lower latency will enable new safety-sensitive applications which are known as V2X or Vehicle-to-Everything. The low latency is important for real-time decision-making scenarios. Self-driving cars can generate terabytes of data daily. See Figure 2 in the 5G Magazine. Anything over a hundred milliseconds of latency is going to disrupt the operation of self-driving cars.

Recognizing the importance of lower latency and reliability offered by the 5G wireless network, Honda and Verizon have teamed up to explore how 5G and MEC (Mobile Edge Computing) can improve road safety. With Verizon 5G Ultra-Wideband and 5G Mobile Edge Compute, it can ensure fast, reliable communication between road infrastructure, vehicles, and pedestrians.

Decentralized Edge Computing

With the applications named above, it is really about the need to process a large volume of data at a faster speed in real-time. Some of the 5G applications will require an on-premise edge to process the data. As the demand for real-time processing and low-latency connectivity increases, edge computing will drive the growth of more 5G applications. Businesses are already building products that could be supercharged by 5G and edge computing. 5G applications are not a dream anymore. It is here.

- March 20, 2024

Private networks come with the promise of enterprise-level control over a required level of quality of service. Mission-critical applications, such as remotely coordinated control over robots and automated guided vehicles in intelligent factories, require very low latencies and high bandwidth. MEC (Multi-access Edge Computing) will be the handmaiden to tie the loose ends in integrating communication and computing, complemented by spectral efficiencies, to achieve service quality goals. The entry of cloud computing companies is accelerating the convergence of private networks, local network intelligence, computing services, and MEC that will bolster the adoption of enterprise applications at the edge.

Mission-critical enterprise applications advance

Applications migrating from pilots to commercialization include 3D applications, XReality, 3D simulations of digital twins, and computer vision-based enterprise facilities monitoring. Unity, a company specializing in photorealistic 3D visualizations valuable for educational AR applications and robots running digital twin simulations, is collaborating with Verizon benefiting from its 5G Edge MEC services, running on its 5G Ultra-Wideband network. Individual enterprise clients run the applications on their private clouds and securely interconnect with public networks for wide-area uses.